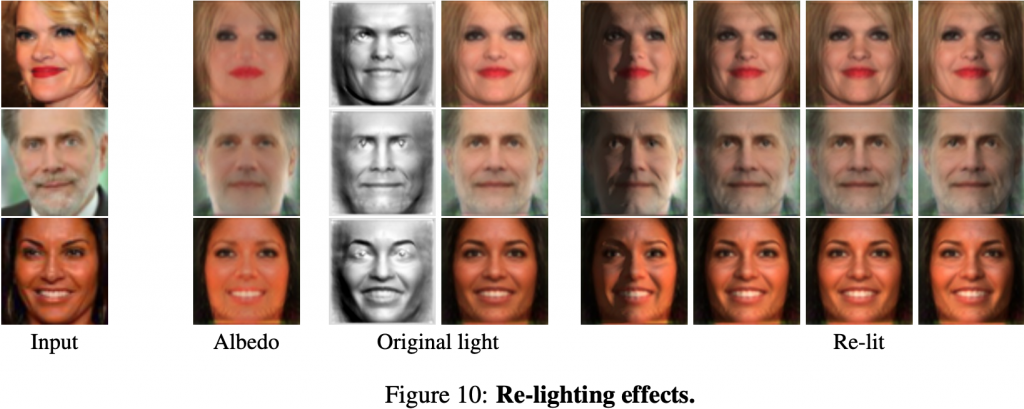

Images used for research in creating 3D reconstructions. (Source: University of Oxford research)

10 Best Ranked AI Papers Cover NLP, 3D Objects, Multi-Agent Interaction, among Other Topics

A story on syncedreview.com explored 10 of the award-winning peer-reviewed research papers on AI of more than 6,656 published in 2020. They cover a range of areas of expertise, from statistical modeling to Natural Language Processing to unsupervised learning of 3D objects by algorithms. The number of AI research papers has grown by 300% in the past two decades, according to the Stanford AI Index. Here are links and summaries of five of the papers:

AAAI 2020 Outstanding Paper Award

WinoGrande: An Adversarial Winograd Schema Challenge at Scale

Authors: Keisuke Sakaguchi, Ronan Le Bras, Chandra Bhagavatula, Yejin Choi

Institution(s): Allen Institute for Artificial Intelligence, University of Washington

The Winograd Schema Challenge (WSC) (Levesque, Davis, and Morgenstern 2011), a benchmark for commonsense reasoning, is a set of 273 expert-crafted pronoun resolution problems originally designed to be unsolvable for statistical models that rely on selectional preferences or word associations. However, recent advances in neural language models have already reached around 90% accuracy on variants of WSC. This raises an important question of whether these models have truly acquired robust commonsense capabilities or whether they rely on spurious biases in the datasets that lead to an overestimation of the true capabilities of machine commonsense.

CVPR 2020 Best Paper Award

Unsupervised Learning of Probably Symmetric Deformable 3D Objects from Images in the Wild

Authors: Shangzhe Wu, Christian Rupprecht, Andrea Vedaldi

Institution(s): University of Oxford

Abstract: We propose a method to learn 3D deformable object categories from raw single-view images, without external supervision. The method is based on an autoencoder that factors each input image into depth, albedo, viewpoint and illumination. In order to disentangle these components without supervision, we use the fact that many object categories have, at least in principle, a symmetric structure. We show that reasoning about illumination allows us to exploit the underlying object symmetry even if the appearance is not symmetric due to shading.

ACL 2020 Best Overall Paper

Beyond Accuracy: Behavioral Testing of NLP Models with CheckList

Authors: Marco Tulio Ribeiro, Tongshuang Wu, Carlos Guestrin, Sameer Singh

Institution(s): Microsoft Research, University of Washington, University of California-Irvine

Abstract: Although measuring held-out accuracy has been the primary approach to evaluate generalization, it often overestimates the performance of NLP models, while alternative approaches for evaluating models either focus on individual tasks or on specific behaviors. Inspired by principles of behavioral testing in software engineering, we introduce CheckList, a taskagnostic methodology for testing NLP models. CheckList includes a matrix of general linguistic capabilities and test types that facilitate comprehensive test ideation, as well as a software tool to generate a large and diverse number of test cases quickly.

ICML 2020 Outstanding Paper Awards

Efficiently Sampling Functions From Gaussian Process Posteriors

Authors: James Wilson, Slava Borovitskiy, Alexander Terenin, Peter Mostowsky, Marc Deisenroth

Institution(s): Imperial College London, St. Petersburg State University, St. Petersburg Department of Steklov Mathematical Institute of Russian Academy of Sciences, University College London

Abstract: Gaussian processes are the gold standard for many real-world modeling problems, especially in cases where a model’s success hinges upon its ability to faithfully represent predictive uncertainty. These problems typically exist as parts of larger frameworks, wherein quantities of interest are ultimately defined by integrating over posterior distributions. These quantities are frequently intractable, motivating the use of Monte Carlo methods. Despite substantial progress in scaling up Gaussian processes to large training sets, methods for accurately generating draws from their posterior distributions still scale cubically in the number of test locations. We identify a decomposition of Gaussian processes that naturally lends itself to scalable sampling by separating out the prior from the data.

Generative Pretraining from Pixels

Authors: Mark Chen, Alec Radford, Rewon Child, Jeffrey K Wu, Heewoo Jun, David Luan, Ilya Sutskever

Institution(s): OpenAI

Abstract: Inspired by progress in unsupervised representation learning for natural language, we examine whether similar models can learn useful representations for images. We train a sequence Transformer to auto-regressively predict pixels, without incorporating knowledge of the 2D input structure. Despite training on low-resolution ImageNet without labels, we find that a GPT-2 scale model learns strong image representations as measured by linear probing, fine-tuning, and low-data classification. On CIFAR-10, we achieve 96.3% accuracy with a linear probe, outperforming a supervised Wide ResNet, and 99.0% accuracy with full fine-tuning, matching the top supervised pre-trained models. An even larger model trained on a mixture of ImageNet and web images is competitive with self-supervised benchmarks on ImageNet, achieving 72.0% top-1 accuracy on a linear probe of our features.

Five additional impactful papers are listed in the article, which gives a deeper look at what is on the AI horizon.

read more at syncedreview.com

Leave A Comment