Intel Eyes the Future with Backpack to Assist Blind, Visually Impaired People

After reading about a new visual aid system and then watching a video of the system in action, it shows an incredible new tool. As a system that is part AR, part VR, part Alexa, and more, it makes the most of the latest tech for people who are sight-impaired or blind.

The story comes from businesswire.com and it highlights a brand new optical assistance idea. The World Health Organization says there are approximately 285 million people worldwide with sight difficulties.

Intel has put out an AI-powered backpack with a full appointment of voice-powered visual assistance choices. Artificial intelligence (AI) developer Jagadish K. Mahendran and his team designed an AI-powered, voice-activated backpack that can help the visually impaired navigate and perceive the world around them. The backpack helps detect common challenges such as traffic signs, hanging obstacles, crosswalks, moving objects and changing elevations, all while running on a low-power, interactive device. It tells the user where the obstacles are, such as “one o’clock” for its location.

The story does come with a bit of irony from the inventor:

“Last year when I met up with a visually impaired friend, I was struck by the irony that while I have been teaching robots to see, there are many people who cannot see and need help. This motivated me to build the visual assistance system with OpenCV’s Artificial Intelligence Kit with Depth (OAK-D), powered by Intel.”

–Jagadish K. Mahendran, Institute for Artificial Intelligence, University of Georgia

We are all familiar with the original visual aids such as a seeing-eye dog, or the beeping white cane with the red tips that help guide the less sighted. However, Intel has created an entirely different approach with its backpack and vest system. Mahendran made the code open source so that any company could use the code to adapt a similar system.

Here is the video showing the system at work.

The tech is exciting for anyone with visual impairment. It will take some getting used to as the voice commands are hard to catch at times due to the robot voice going off and announcing several objects repeatedly, which could be distracting.

How It Operates

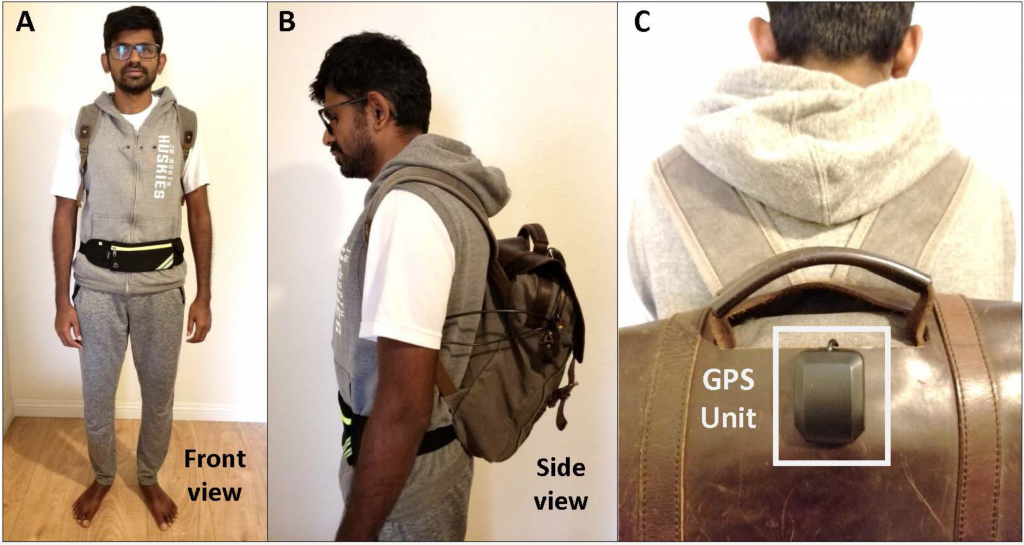

The system is housed inside a small backpack containing a host computing unit, such as a laptop. A vest jacket conceals a camera, and a fanny pack is used to hold a pocket-size battery pack capable of providing approximately eight hours of use. A Luxonis OAK-D spatial AI camera can be affixed to either the vest or fanny pack, then connected to the computing unit in the backpack. Three tiny holes in the vest provide viewports for the OAK-D, which is attached to the inside of the vest.

“Our mission at Luxonis is to enable engineers to build things that matter while helping them to quickly harness the power of Intel AI technology,” said Brandon Gilles, founder and chief executive officer, Luxonis. “So, it is incredibly satisfying to see something as valuable and remarkable as the AI-powered backpack built using OAK-D in such a short period of time.”

OpenCV’s Artificial Intelligence Kit may open new doors for blind people who want to navigate the world.

read more at businesswire.com

Leave A Comment