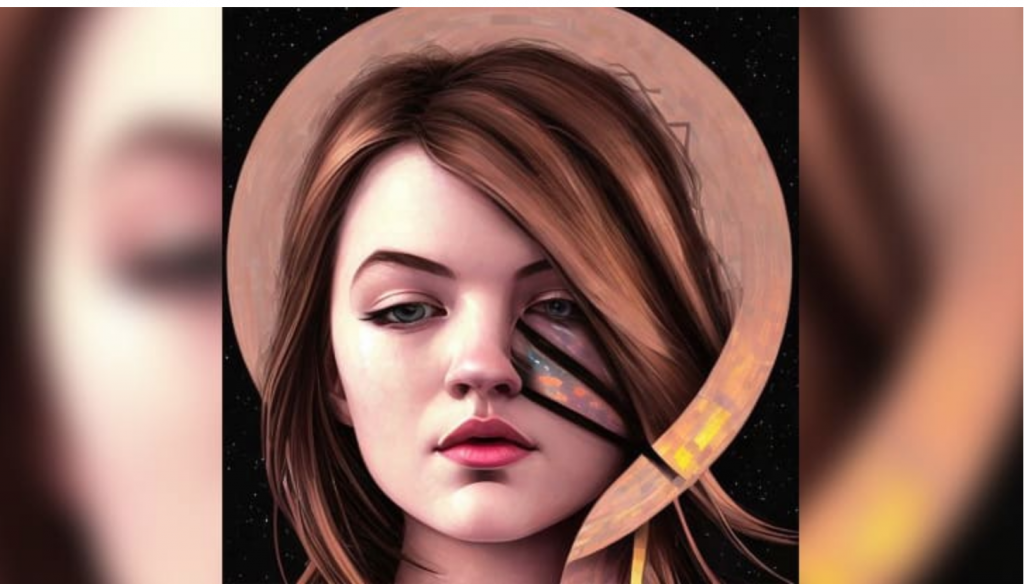

CNN’s Zoe Sottile generated this image by submitting selfies to Lensa’s “Magic Avatars” function. (Source: Lensa)

Users Warn about Use of Lensa for Image Manipulation, Especially for Teens

Do you Lensa? It seems lots of folks around the world have taken pretty quickly to using the new AI app. Especially on Facebook. It makes anyone look like a fairy tale character of sorts.

Zoe Sottile of cnn.com has broken down some of the features and raised a few of the warnings that come with the usage of Lensa.

Creating a ‘Magic Avatar’

The pictures making the rounds online are products of Lensa’s “Magic Avatars” function. To try it out, you’ll need to first download the Lensa app on your phone. A yearlong subscription to the app, which also provides photo editing services, costs $35.99. But you can use the app for a weeklong free trial if you want to test it out before committing. Generating the magic avatars requires an additional fee. As long as you have a subscription or free trial, you can get 50 avatars for $3.99, 100 for $5.99, or 200 for $7.99.

Even AI can learn to love capitalism I suppose. Lensa is a product of Prisma, which first reached popularity in 2016 with a function allowing users to transform their selfies into images in the style of famous artists.

Concern over Sexualization of Photos

One of the challenges Sottile encountered in the app has been described by other women online. Even though all the images she uploaded were fully-clothed and mostly close-ups of my face, the app returned several images with implied or actual nudity.

“In one of the most disorienting images, it looked like a version of my face was on a naked body,” Sottile wrote. “In several photos, it looked like I was naked but with a blanket strategically placed, or the image just cut off to hide anything explicit. And many of the images, even where I was fully clothed, featured a sultry facial expression, significant cleavage, and skimpy clothing which did not match the photos I had submitted. This was surprising, but I’m not the only woman who experienced it. Olivia Snow, a research fellow at UCLA’s center for critical internet inquiry and professional dominatrix, told CNN the app returned nude images in her likeness even when she submitted pictures of herself as a child, an experience she documented for WIRED.”

Digital Artists Complain of Duplication

Lensa’s technology relies on a deep learning model called Stable Diffusion, according to its privacy policy. Stable Diffusion uses a massive network of digital art scraped from the internet, from a database called LAION-5B, to train its AI. Artists are unable to opt-in or opt-out of having their art included in the data set and thus used to train the algorithm. Some artists complain that Stable Diffusion relies on their artwork to make their own images, but they’re not credited or compensated. Earlier this year, CNN reported on several artists who were upset when they found their work had been used without their consent or payment to train the neural network for Stable Diffusion.

Lensa’s owner Prisma commented:

” ‘Whilst both humans and AI learn about artistic styles in semi-similar ways, there are some fundamental differences: AI is capable of rapidly analyzing and learning from large sets of data, but it does not have the same level of attention and appreciation for art as a human being,’ wrote the company on Twitter on December 6.”

All in all, it may be a fun app if you need a little pick-me-up online, but users warn women to be cautious with how these apps use your images. Revenge porn is a possible result, Sottile warned.

read more at cnn.com

Leave A Comment