Home devices such as Amazon’s Echo can record everyday conversations. (Source: Amazon)

Voice Device Users Seek Security through Adversarial Attack Strategy

The headline asks “Is technology spying on you?” And the answer is, of course, it is. You need not have any doubt of that. So the next question is do you care if you are spied on or listened to? Well, there might be a new approach to help keep you and your life a little more secure and private.

Matthew Hutson is a freelance science journalist in New York City and he has a piece with science.org that talks about new technology. It is called Neural Voice Camouflage. It generates custom audio noise in the background as you talk, confusing the artificial intelligence (AI) that transcribes our recorded voices.

Hutson lists several ways you might be being eavesdropped upon. Big Brother is listening. Companies use “bossware” to listen to their employees when they’re near their computers. Multiple “spyware” apps can record phone calls. And home devices such as Amazon’s Echo can record everyday conversations.

You see, we are probably not nearly as private as we wish we were on so many levels. The new system uses an “adversarial attack.” The strategy employs machine learning—in which algorithms find patterns in data—to tweak sounds in a way that causes an AI, but not people, to mistake it for something else. Essentially, you use one AI to fool another.

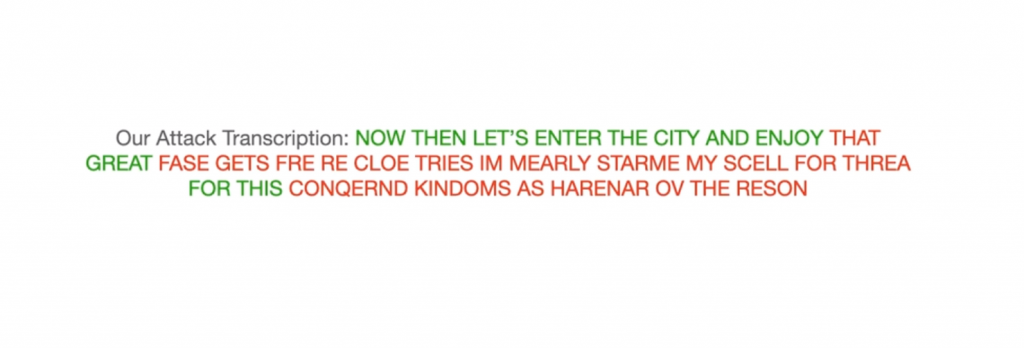

The article goes on to explain how this type of problem was addressed and how much it appears it works. It’s not 100% yet but it’s getting close. Below is a sample of what a printout of a spoken sentence looks like once it is camouflaged.

They include a voice track in the article that gives you a little better sample of what an adversarial attack sounds like.

The scientists overlaid the output of their system onto recorded speech as it was being fed directly into one of the automatic speech recognition (ASR) systems that might be used by eavesdroppers to transcribe. The system increased the ASR software’s word error rate from 11.3% to 80.2%.

“I’m nearly starved myself, for this conquering kingdoms is hard work,” for example, was transcribed as “im mearly starme my scell for threa for this conqernd kindoms as harenar ov the reson”

So you get the idea. It’s a scrambler for your text as well as the printout of your conversations.

The work was presented in a paper last month at the International Conference on Learning Representations, which peer reviews manuscript submissions.

Even when the ASR system was trained to transcribe speech perturbed by Neural Voice Camouflage (a technique eavesdroppers could use), its error rate remained 52.5%. In general, the hardest words to disrupt were short ones, such as “the,” but these are the least revealing parts of a conversation.

Audio camouflage is much needed, says Jay Stanley, a senior policy analyst at the American Civil Liberties Union.

“All of us are susceptible to having our innocent speech misinterpreted by security algorithms.” Maintaining privacy is hard work, he says. Or rather it’s harenar ov the reson.

See it really does work. Even for the ACLU.

read more at science.org

Leave A Comment