Companies Sign Open Letter Against Weapon Systems

On July 18, an international coalition of prominent AI researchers joined by leading corporate and nonprofit organizations in the space signed a Future of Life Institute-sponsored open letter against the development of lethal autonomous weapons systems (LAWS), stating in the open letter that, “the decision to take a human life should never be delegated to a machine” and pledging to “neither participate in nor support the development, manufacture, trade or use of lethal autonomous weapons.”

Founded in 2014 by some of the leading thinkers in the tech scene—including Max Tegmark, who has written at length on the topic of LAWS—and boasting an impressive advisory board of tech mavens and philosophers, the Future of Life Institute is a Boston-based think tank and research group focused on the mitigation of existential risks to humanity. Risks most notably include the potential for AI-related risks to human welfare such as LAWS and negatively-aligned superintelligent AI.

Adopted at the 27th International Joint Conference on Artificial Intelligence (IJCAI) in Stockholm, the open letter is the latest of a series of international actions in recent years by the international community to pressure world’s governments and most powerful tech players to ratify restrictions on the looming development of LAWS before a major corporate or government power develops them. This could create the conditions for an arms race of self-governing weapons with the ability to kill at horrifying scale.

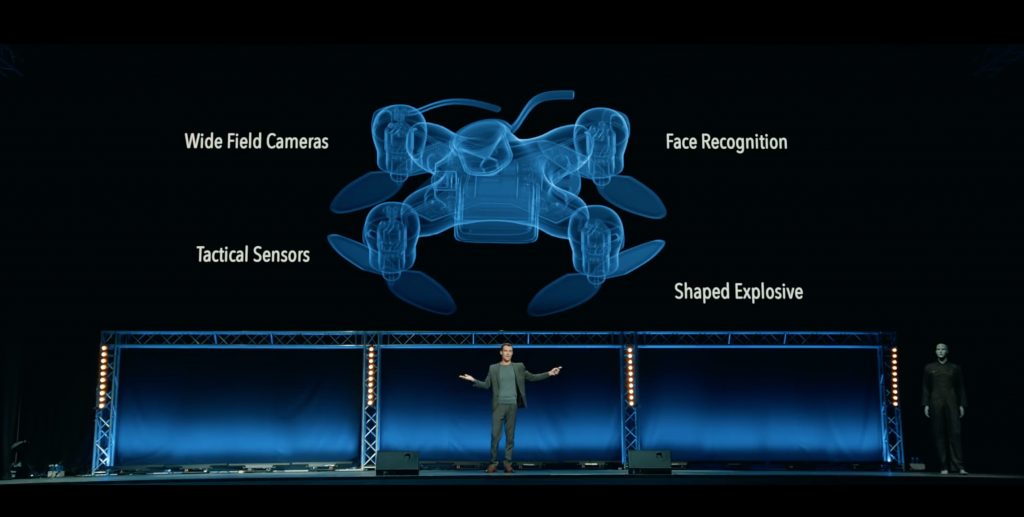

Above: Published in November by a Future of Life Institute initiative, the short film “Slaughterbots” is a horrifying peek of speculative sci fi into a near-future where autonomous weapons are hijacked by ideologically motivated terrorists to foment mass murder beyond human control.

According to the letter, which describes the technology as “dangerously destabilizing for every country and individual,” the international community should proactively move to prevent the proliferation of potentially uncontrollable self-operating weapons, noting that “stigmatizing and preventing such an arms race should be a high priority for national and global security.”

The pledge’s signatories include 222 organizations and 2,833 people, including an impressive who’s who of AI research and development in the corporate, nonprofit and university spheres. Representation from Google DeepMind, the XPRIZE Foundation, the Swedish AI Society, the European Association for AI are among the supporters, as well as professors and researchers at the world’s premiere universities.

According to a statement by Max Tegmark, the pledge represents a shift “from talk to action, implementing a policy that politicians have thus far failed to put into effect.” Describing AI as having “huge potential to help the world” if properly employed, Tegmark compares the technology to biological research, analogizing how LAWS and other abuses of the technology would be similar to misuse of biology to create bioweapons: “AI weapons that autonomously decide to kill people are as disgusting and destabilizing as bioweapons, and should be dealt with in the same way.”

Marines take cover near General Dynamics’ armed MUTT robot in 2016. While presently relegated to human control, in the near future such armed military drones could be given complete autonomy, removing human conscience and judgement from the loop of the weighty decision to kill. Image via U.S. Marine Corps.

At previous IJCAI sessions in 2015 and 2017 researchers signed similar open letters against the development of LAWS, including the widely covered 2017 letter endorsed by Elon Musk and other AI luminaries calling on the United Nations to ban autonomous weapons. Due in part to such pressure from the international research community, the U.N. has held preliminary hearings on the topic, and will next meet in August to discuss LAWS and potentially enact international regulation of the weapons. To date, 26 nations of the U.N. have called for an outright ban on development of such technology.

The full text of the pledge, available here as well with a full list of signatories, are below:

“Artificial intelligence (AI) is poised to play an increasing role in military systems. There is an urgent opportunity and necessity for citizens, policymakers, and leaders to distinguish between acceptable and unacceptable uses of AI.

In this light, we the undersigned agree that the decision to take a human life should never be delegated to a machine. There is a moral component to this position, that we should not allow machines to make life-taking decisions for which others – or nobody – will be culpable. There is also a powerful pragmatic argument: lethal autonomous weapons, selecting and engaging targets without human intervention, would be dangerously destabilizing for every country and individual. Thousands of AI researchers agree that by removing the risk, attributability, and difficulty of taking human lives, lethal autonomous weapons could become powerful instruments of violence and oppression, especially when linked to surveillance and data systems. Moreover, lethal autonomous weapons have characteristics quite different from nuclear, chemical and biological weapons, and the unilateral actions of a single group could too easily spark an arms race that the international community lacks the technical tools and global governance systems to manage. Stigmatizing and preventing such an arms race should be a high priority for national and global security.

We, the undersigned, call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons. These currently being absent, we opt to hold ourselves to a high standard: we will neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons. We ask that technology companies and organizations, as well as leaders, policymakers, and other individuals, join us in this pledge.”

Leave A Comment