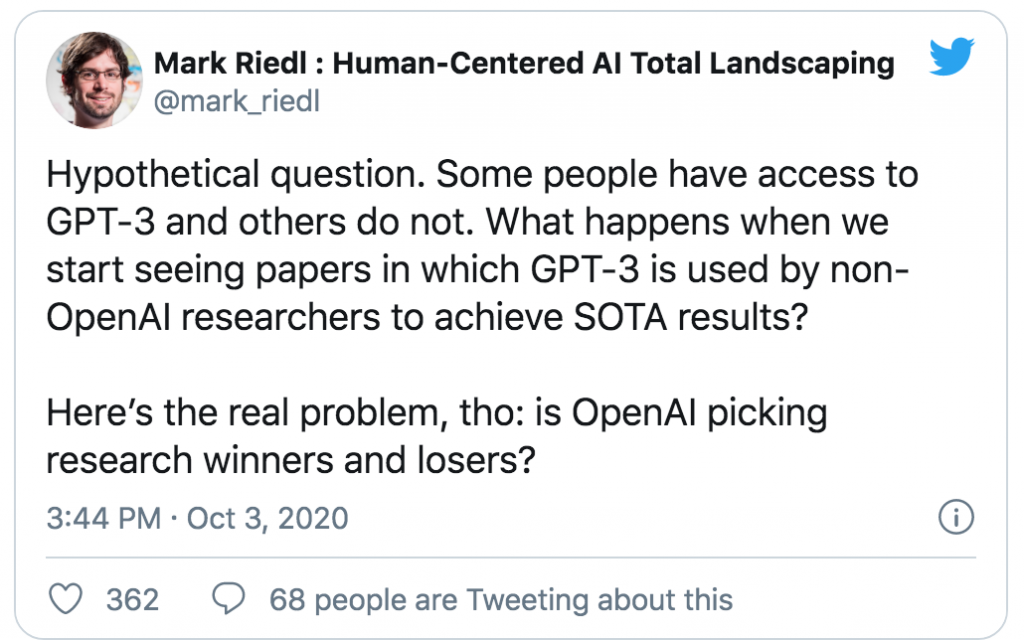

Researchers are calling out AI tech giants for lack of transparency.

The Drawback of Limited Access to Powerful GPT-3 Concerns Researchers Seeking to Duplicate Results

Successful science is replicable science. That is to say when you produce an experiment and write a paper about the results, then one of your peers should be able to replicate that experiment and find the same results. In an eye-opening article this week from technologyreview.com we discover that AI scientists and their companies are not behaving like other sciences and their companies. It has people concerned. The people concerned are AI scientists.

AI moves quickly from research labs to real-world applications, with a direct impact on people’s lives. But machine-learning models that work well in the lab can fail during public use—with potentially dangerous consequences. Replication by different researchers in different settings would expose problems sooner, making AI stronger for everyone.

The author of the article William Douglas Heaven writes:

Last month Nature published a damning response written by 31 scientists to a study from Google Health ta journal published earlier this year. Google described successful trials of an AI that found signs of breast cancer in medical images. But according to its critics, the Google team provided so little information about its code and how it was tested that the study amounted to nothing more than a promotion of proprietary tech.

“We couldn’t take it anymore,” says Benjamin Haibe-Kains, the lead author of the response, who studies computational genomics at the University of Toronto. “It’s not about this study in particular—it’s a trend we’ve been witnessing for multiple years now that has started to really bother us.”

Haibe-Kains and his colleagues joined the ranks of numerous scientists complaining about the lack of transparency in AI research.

“When we saw that paper from Google, we realized that it was yet another example of a very high-profile journal publishing a very exciting study that has nothing to do with science,” he says. “It’s more an advertisement for cool technology. We can’t really do anything with it.”

That divide could be problematic for both sides of this issue in replicating and proving results.

What’s stopping AI replication from happening as it should is a lack of access to three things: code, data, and hardware. According to the 2020 State of AI report, a well-vetted annual analysis of the field by investors Nathan Benaich and Ian Hogarth, only 15% of AI studies share their code. Industry researchers are bigger offenders than those affiliated with universities. In particular, the report calls out OpenAI and DeepMind for keeping code under wraps.

A chart from the State of AI Report 2020 shows how few companies reveal the code used so that researchers can analyze it.

This story delves into the issue and cites several examples. The growing gap in sharing the AI tools, both data and hardware, make it a difficult problem to solve. Proprietary data, such as the information Facebook collects on its users, or sensitive data, like private medical records, complicate finding solutions. Tech giants are carrying out more and more research on enormous, expensive clusters of computers that few universities or smaller companies have the resources to access.

read more at technologyreview.com

Leave A Comment