According to some providers, here are some ways that AI has improved mental health therapy. Other providers totally disagree with the use of AI in this field.

AI, Chatbots Are Becoming First Line of Defense in Mental Health Treatment

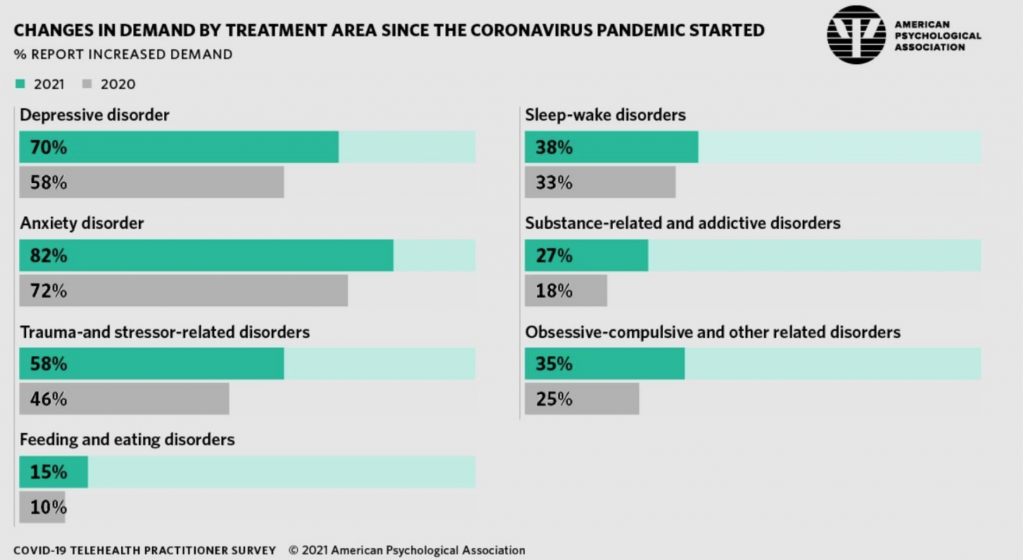

One of the major stories directly related to the global pandemic is its effect on the mental health of the world’s population. One of the solutions that have moved to the forefront of this mental health crisis is the use of AI and chatbots to address depression and other mental health disorders.

According to interviews with mental health professionals on wired.com, this may not be the best answer for many people because the algorithms are flawed. Wired reporter Pragya Agarwal writes:

“Emotional AI algorithms, even when trained on large and diverse data sets, reduce facial and tonal expressions to emotion without considering the social and cultural context of the person and the situation. While, for instance, algorithms can recognize and report that a person is crying, it is not always possible to accurately deduce the reason and meaning behind the tears. Similarly, a scowling face doesn’t necessarily imply an angry person, but that’s the conclusion an algorithm will likely reach. Why? We all adapt our emotional displays according to our social and cultural norms so that our expressions are not always a true reflection of our inner states. Often people do “emotion work” to disguise their real emotions, and how they express their emotions is likely to be a learned response, rather than a spontaneous expression. For example, women often modify their emotions more than men, especially the ones that have negative values ascribed to them such as anger, because they are expected to.”

AI technologies could worsen gender and racial inequalities, for instance. For example, a 2019 UNESCO report noted that the gendering of AI technologies with “feminine” voice-assistant systems reinforced stereotypes of emotional passiveness and servitude in women.

The amount of training and study that a person goes through to become a psychiatrist or psychologist is substantial. To say nothing about the life experiences they go through as a human interacting with other humans that helps form that person’s opinions. It is a tricky path for AI to tread according to Agarwal, who is a behavior and data scientist, and author of HYSTERICAL: Exploding the Myth of Gendered Emotions.

Another article on forbes.com raises the level of concern.

If you take a look at social media, you will see people that are proclaiming ChatGPT and generative AI as the best thing since sliced bread. Some suggest that this is sentient AI. Others worry that people are getting ahead of themselves. They are seeing what they want to see. They have a shiny new toy that they believe works perfectly, but that’s far from reality.

Those in AI ethics and law fields are seriously worried about this burgeoning trend, and rightfully so.

Generative AI

The Forbes article by Lance Eliot skeptically addresses smartphone mental health apps and generative AI, which composes text as though the text was written by humans.

All you need to do is enter a prompt, such as a sentence like “Tell me about Abraham Lincoln” and generative AI will provide you with an essay about Lincoln. This is commonly classified as generative AI that performs text-to-text or some prefer to call it text-to-essay output. You might have heard about other modes of generative AI, such as text-to-art and text-to-video, see my elaboration at the link here.

Generative AI can also provide mistaken answers to the person it interacts with. And the person that is interacting with the AI would have no idea the information they are receiving is wrong. Either factually, ethically, or even legally.

Both articles we link below are important and worth the time to read up on this use of AI. It may or may not be 100% reliable. When it concerns mental health, that is just too big a risk to take for many medical providers.

read more at wired.com

or at forbes.com

Leave A Comment