People are creating the DALL-E of porn with Stable Diffusion—and the company behind the tool wants no part of it. (Source: Vice.com)

Stability AI Image Generator Used to Create Figures Some Describe as Porn

Uh-Oh. We suppose it was bound to happen. Even if the company that invented it never intended their product to be used in this manner. As a matter of fact, the inventors begged the users of this free program not to abuse it.

What we mean by “it” concerns using an OpenSource program to generate pornographic images. Samantha Cole of vice.com explains what Stable Diffusion is and what it has to do with this graphic porn explosion. What’s even harder to process is many of these generated questionable images can and are considered art, not smut.

Recently, after an invite-only testing period, artificial intelligence company Stability AI released its text-to-image generation model, called Stable Diffusion, into the world as open access. Like DALL-E Mini or Midjourney, Stable Diffusion is capable of creating vivid, even photorealistic images from simple text prompts using neural networks. Unlike DALL-E Mini or Midjourney, whose creators have implemented limits on what kind of images they can produce, Stable Diffusion’s model can be downloaded and tweaked by users to generate whatever they want. Inevitably, many of them are generating porn.

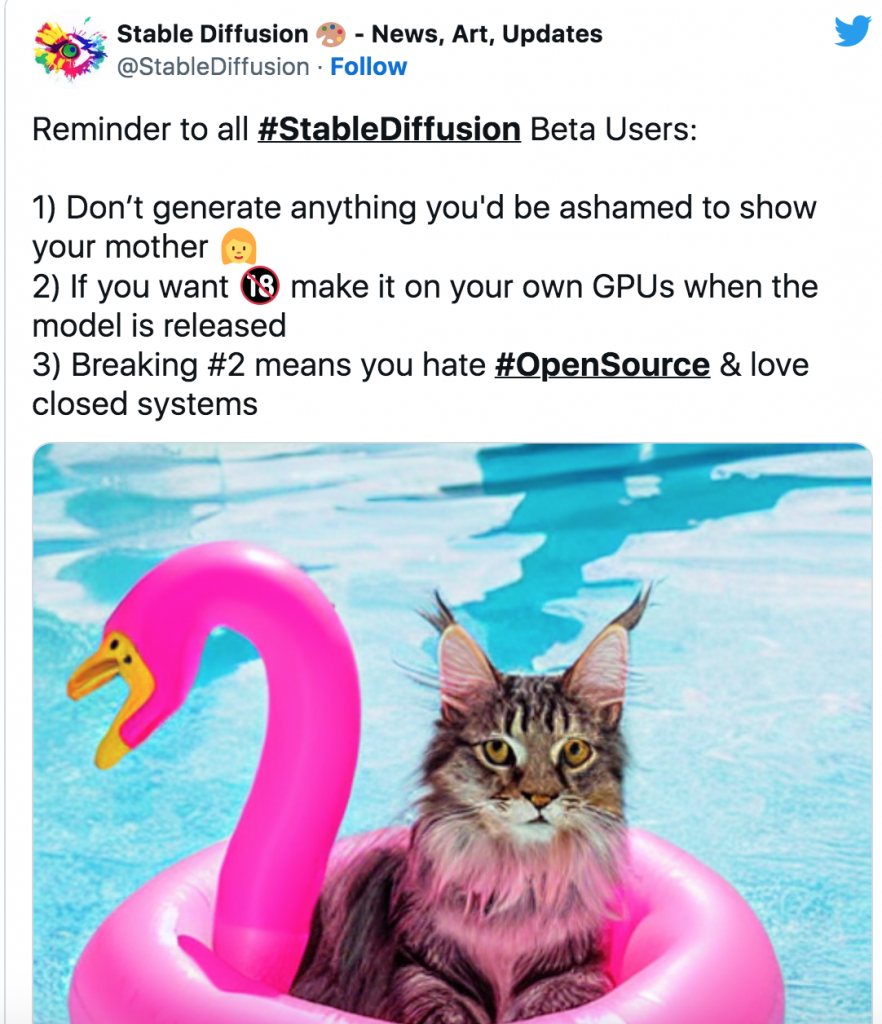

We know pornographic images have been pretty popular as far back as Greek societies. Perhaps further. So the fact some users have taken the latest tools to make art is just that. The latest way to create art. And yes, some images can be considered smutty to some people on some levels. The company knew this well in advance and asked politely that their users show respect to the wishes of Stability AI moral guidance.

Take a look at their tweeted request.

Now here is a quote from one of the developers :

“The truth is, I created this server because while some of the images are simply pornographic, and that’s fine, I found many of the images to be incredibly artistic and beautiful in a way you just don’t get in that many places,” they told Motherboard. “I don’t like the pervasive, overwhelming prudishness and fear of erotic art that’s out there, I started this server because I believe that these models can change our perception of things like nudity, taboo, and what’s fair by giving everyone the ability to communicate with images.”

There are links if you want to look at what the naughty art looks like. Here is one written by the same author: Frankensteins Monster: Images of Sexual Abuse Are Fueling Algorithmic Porn.

Cole’s article covers a lot of technical information that a layman is simply not likely to be well versed in. For instance:

Stabile Diffusion uses a dataset called LAION-Aesthetics. According to Techcrunch, Mostaque funded the creation of LAION 5B, the open source, 250-terabyte dataset containing 5.6 billion images scraped from the internet (LAION is short for Large-scale Artificial Intelligence Open Network, the name of a nonprofit AI organization). LAION-400M, the predecessor to LAION 5B, notoriously contained abhorrent content; a 2021 preprint study of the dataset found that it was full of “troublesome and explicit images and text pairs of rape, pornography, malign stereotypes, racist and ethnic slurs, and other extremely problematic content.” The dataset is so bad that the Google Research team that made Imagen, another powerful text-to-image diffusion model, refused to release its model to the public because it used LAION-400M and couldn’t guarantee that it wouldn’t produce harmful stereotypes and representations.

Well like the old saying goes: Where there’s a will, there’s a way.

If this is up to your technically proficient interests, we can highly recommend this piece. It will entertain and inform you about a new Open Source tool that is really on the cutting edge of high-tech AI toys this month.

Remember some of this is NSFW (Not Safe For Work) and lots of it can be found on Reddit.

read more at vice.com

Leave A Comment