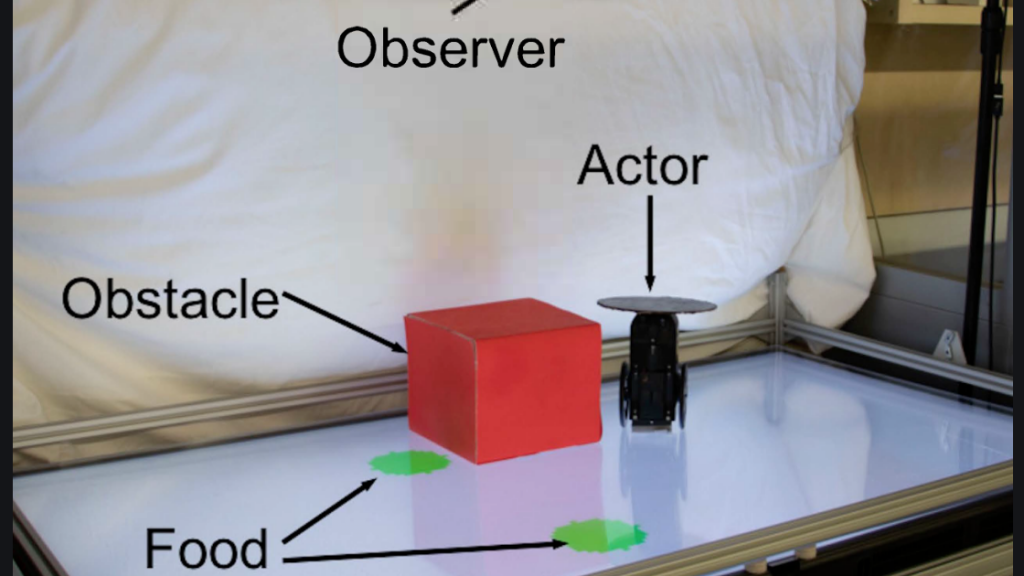

To predict the motion of the robot ‘actor,’ the A.I. ‘observer’ only needs to see the first moment of the set-up. (Source: Creative Machines Lab/Columbia Engineering)

Robots Develop Ability to Predict Behaviors Indicates Step Towards Empathy

The robot in the Lost In Space television show was programmed to protect and interact with humans, warning each week, “Danger, Will Robinson!” While it lacked emotion itself, it had the ability to detect it in humans, from happiness and loneliness to anger.

Now in the 21st century, AI researchers are working to teach algorithms to not only predict human emotions but also behaviors in an effort to teach empathy, according to a story on Inverse.com.

“Using only visual data and no pre-programmed logic, researchers trained an AI to accurately predict the final action of a secondary robot ‘actor.’ At its peak, the AI could accurately predict the robot actor’s final action (after only seeing its starting point) with 98.5 percent accuracy across four movement patterns.”

Researchers say this development is important because it’s evidence of the AI having “theory of mind,” a cognitive trait in humans and primates that facilitates social communication ranging from play hiding to lying and deception. Studying its development in robots could help researchers teach them to be more human-like.

“Our findings begin to demonstrate how robots can see the world from another robot’s perspective,” wrote Boyuan Chen, lead author of the study and computer science Ph.D. student at Columbia, in a statement. “The ability of the observer to put itself in its partner’s shoes, so to speak, and understand, without being guided, whether its partner could or could not see the green circle from its vantage point, is perhaps a primitive form of empathy.”

The paper was published Monday in the journal Scientific Reports.

What sets the research apart is that it’s an AI that doesn’t just predict the next frame of an action (e.g. if one video frame shows a soccer ball being kicked it will predict the next frame will show the ball in the air) but instead can predict the final frame of a sequence of actions (e.g. the opposing goalie intercepting the shot at the last moment.)

“This is akin to asking a person to predict ‘how the movie will end’ based on the opening scene,” explain the authors in the paper.

A video shows the AI/robot problem-solving exercise. Researchers say it followed its training and found its way around the test quite easily. However, the program taught itself a little more than scientists had planned on and scored on a task that it was not pre-trained on. When the robot performed one or a mix of these four behaviors, the AI was able to accurately predict actions 98.5 percent of the time.

Hod Lipson is a co-author on the study and a professor of robotics and innovation at Columbia University. In a statement, he explains that relying on visual data alone is another way to bring this A.I. closer to human cognition.

“We humans also think visually sometimes,” explains Lipson. “We frequently imagine the future in our mind’s eyes.”

As the researchers continue exploring the limits of AI cognition and how they may represent elementary forms of human-like social intelligence, Lipson said it will be important to keep ethical concerns in mind.

“We recognize that robots aren’t going to remain passive instruction-following machines for long,” Lipson says. “Like other forms of advanced AI, we hope that policymakers can help keep this kind of technology in check, so that we can all benefit.”

The videos of Boston Dynamics robots, including a door-opening dog, give an idea of how human-like robots can behave with programming. Imagine if they are capable of predicting what their users want and responding accordingly without being asked.

read more at inverse.com

Leave A Comment