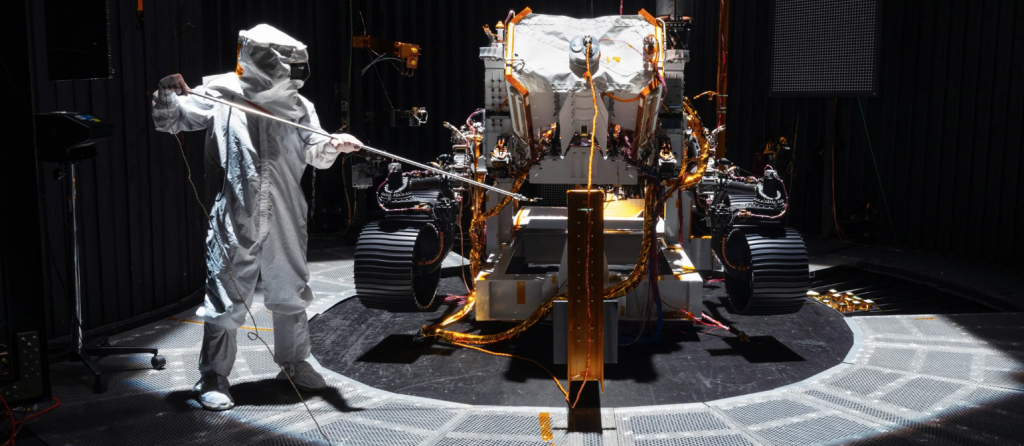

The Perseverance rover from NASA will rely on two computers, paired with stereo vision and visual odometry for navigation. (Source: NASA)

Perseverance Could Soon Be Paying off in NASA’s Upcoming Mars Mission

In an article on wired.com from Daniel Oberhaus, he writes of this month’s upcoming launch from NASA in which the latest Mars rover, Perseverance, will be launched on a first-of-its-kind mission to the Red Planet. Its job is to collect and store geological samples so they can eventually be returned to Earth.

Perseverance will spend its days exploring the Jezero Crater, an ancient Martian river delta, collecting samples in the search for evidence of extraterrestrial life.

Significantly more autonomous than any of NASA’s previous four rovers, Perseverence is designed to be a “self-driving car on Mars.” It will navigate using an array of sensors feeding data to machine vision algorithms. While terrestrial autonomous vehicles are equipped with the best computers money can buy, the main computer on Perseverance is “about as fast as a high-end PC from 1997,” according to the Wired story. The only way Perseverance’s can handle autonomous driving is because NASA gave it a second computer that acts as a robotic driver:

“On previous rovers, the navigation software had to share limited computing resources with all the other systems. So to get from one point to another, the rover would take a picture to get a sense of its surroundings, drive a little, and then stop for a few minutes to figure out its next move. But since Perseverance can offload many of its visual navigation processes to a dedicated computer, it won’t have to take this stop-and-go approach to Martian exploration. Instead, its main computer can figure out how to get Perseverance where it’s supposed to go, and its machine vision computer can make sure it doesn’t hit any rocks on the way.”

One of the bigger issues for NASA and this particular planet they want to explore is the time lag between when a radio signal is sent and when it arrives on Mars. It takes 22 minutes to get a radio signal traveling at the speed of light from Earth to that Red Planet. A lot can go wrong in 22 minutes, especially since space radiation can shut computer systems down at any time.

The long delay makes it impossible to control a rover in real-time, and waiting nearly an hour for a command to make a round trip between Mars and the Earth isn’t practical either. Perseverance has a packed schedule—it needs to drop off a small helicopter for flight tests, then collect dozens of rock samples and find a place on the surface to store them. (A later mission will bring the cache back to Earth so it can be studied for signs of life.)

Navigating Mars

Terrestrial autonomous vehicles typically use lidar systems, which are rife with problems. Instead, Perseverance will use stereo vision and visual odometry to figure out where it is on Mars. Stereo vision combines two images from a “left camera” and a “right camera” to create a 3D picture of the rover’s surroundings, while visual odometry software analyzes images separated in time to estimate how far the rover has moved.

“We were concerned about the mechanical reliability of Lidar for a space mission,” says Larry Matthies, a senior research scientist and supervisor of the computer vision group at NASA’s Jet Propulsion Laboratory. “We started using stereo vision for 3D perception at JPL decades ago when Lidars were far less mature, and it’s worked out pretty well.”

Oberhaus’s article describes the upgraded chips being used for the mission that will reduce the chance of radiation causing problems. And he elaborates on the algorithms being designed to keep the rover’s autonomous driving abilities ever adaptable to changes in the terrain of Mars.

“The more complicated the system is, the more types of decisions it could make,” says Philip Twu, a robotics system engineer at NASA’s Jet Propulsion Laboratory. “Making sure you’ve covered every possible scenario that the rover might run into has been very challenging. But it’s by doing a lot of really hands-on tests like this that we find quirks in the algorithm.”

read more at wired.com

Leave A Comment