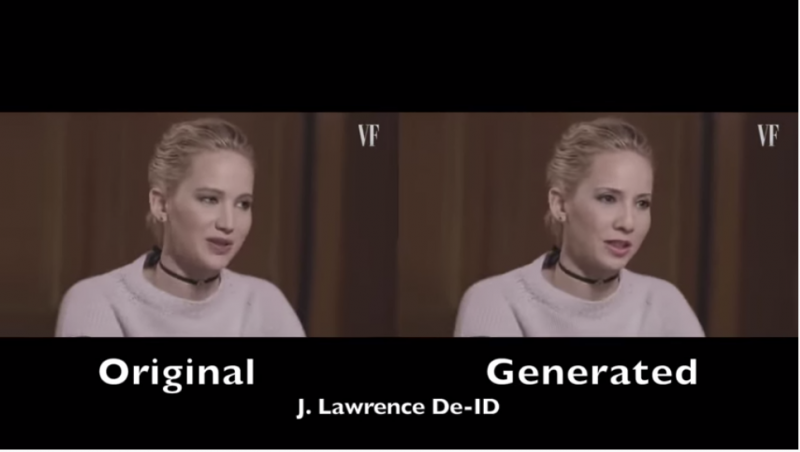

Facebook Develops Tool for Fighting against Deepfake Photos

For every amazing development of AI, an equal and opposite development with negative consequences seems to have occurred. One major example is how bad actors have developed the ability to digitally alter still images to produce any effect desired. Most commonly, the technology superimposes the faces of well-known actresses or actors faces in place of the original participants of pornography videos. Many companies have had tech that will reveal CGI still images.

But now Facebook says it has developed tech that will work in real-time on videos and live feeds.

The verge.com covered the social media giant’s latest claim. Facebook remains embroiled in a multibillion-dollar judgement lawsuit over its facial recognition practices, but that hasn’t stopped its AI research division from developing technology to combat the misdeeds for which the company is accused. According to VentureBeat, Facebook AI Research (FAIR) has developed a state-of-the-art “de-identification” system that works on video, including even live video. It works by altering key facial features of a video subject in real-time using machine learning, to trick a facial recognition system into improperly identifying the subject.

You can see an example of it in action in this YouTube video, which, because it’s de-listed, cannot be embedded elsewhere.

“Face recognition can lead to loss of privacy and face replacement technology may be misused to create misleading videos,” reads the paper explaining the company’s approach, as cited by VentureBeat. “Recent world events concerning the advances in, and abuse of face recognition technology invoke the need to understand methods that successfully deal with de-identification. Our contribution is the only one suitable for video, including live video, and presents quality that far surpasses the literature methods.”

read more at theverge.com

Leave A Comment