New Technologies Debuted at Annual Developers Conference

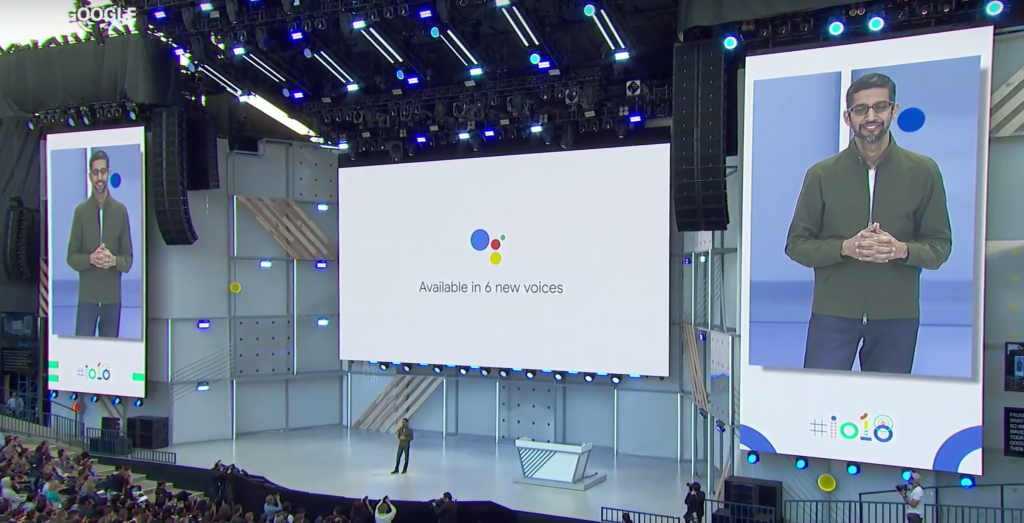

At the keynote for Google’s I/O conference in Mountain View, CA this week, the company announced a host of new AI-driven technologies raising the bar for Google’s competitors and placing the tech giant at the forefront of consumer AI technology.

Focusing mostly on mobile technology for Google’s Android OS, most of the developments announced concerned the impressive new suite of features for Google Lens, Google Assistant, and the company’s camera, navigation, and news apps.

Here’s a highlight of some of the most noteworthy announcements from the keynote:

• New advances in AI-driven healthcare, including improved disease diagnoses such as diabetic retinopathy and heart disease as well as data-driven predictive AI that can predict admittance rates 1-2 days sooner than traditional methods.

• Improvements to the company’s Google Assistant voice assistant: Users will be able to chose from six new voices for Assistant. Later this year, the company will offer the voice of R&B star John Legend. The company also debuted Google Duplex, technology allowing for Google Assistant to carry out human-seeming conversations on behalf of an Andorid user. The example used was the company’s demo of the technology where a Duplex-powered Assistant called a human receptionist at a hair salon to schedule an appointment and employed natural-sounding voice patterns including uptalk and various “uhms” in pauses. To create family-ready vocal assistants, Google Assistant will reward politeness by responding well to “please.”

• A Smart Compose function for Gmail which, with the press of the “tab” key, will allow users to autocomplete an email using intelligent predictive phrases.

• New features for the company’s camera and photo apps as well as Google Lens, Google’s highly touted AR interface. Android will now recognize people in photos on the phone and suggest sending photos to friends. Also, the company announced new photo editing functions such as smart selective color to isolate subjects and even am impressive AI-driven colorize feature for black and white photos. Google Lens users can enjoy advanced new camera and AR features, such as the ability to generate a PDF document from real-world text the camera is pointed at. Also, it will have the ability “to copy and paste from the real world directly to your phone” by using the camera to read real-world sources such as books, paper, or menus. In its most advanced new AR feature, Google Lens can analyze a user’s surroundings and identify objects in near real-time, such as a dog breed or where to buy a couch or handbag.

• A new Google News app for Android, iOS, and the web offering what the company describes as a revolutionary and game-changing new aggregator. The new app will analyze news articles, podcasts, and comments across the web to bring users an up-to-date “newscast” news briefing with the option to delve deeper into stories and explore them from different sources.

• MLKit, a mobile-centric AI-based developer tool allowing for on-device machine learning capabilities with cloud accessibility.

• New planned features for the company’s Maps app which “reimagine walking navigation” including advanced AR capabilities such as interactive directions live on the phone’s screen and visually overlaid recommendations, and an AR guide to lead users along a route, such as a small animated fox portrayed in the company’s demo. The company is also debuting “VPS” or “visual positioning system” which can position a user within a cityscape simply by visually identifying features and locations nearby.

For more information on these and many more exciting announcements, watch the keynote in its entire three-hour glory below:

Leave A Comment