Leading Thinker Urges Risk Analysis of AI Safety

Author of Life 3.0: Being Human in the Age of Artificial Intelligence and founder of the Future of Life Institute, Swedish-American physicist Max Tegmark spoke with VentureBeat following a presentation at the Digital Workforce Summit in New York, analyzing AI’s risks and potential benefits, the specter of lethal autonomous weapons (LAWs), and China’s rise to AI superpower.

Preventing Superintelligent Catastrophe

Tegmark, Elon Musk, the late Stephen Hawking and others have long warned of AI’s existential risks. Derided by the media and fellow technocrats such as Mark Zuckerberg as doom merchants, Tegmark says the AI safety camp “have been falsely blamed for pessimism.” Rather than proffering “scaremongering” though, Tegmark advocates for “safety engineering.”

Tegmark urges cooperation to balance the power of AI “and the growing wisdom with which we manage it.” By “thinking about what could go wrong to make sure it goes right” and fighting complacency toward risk, AI can foster an “inspiring, high-tech future.” Tegmark likens AI to harnessing fire, while rebutting accusations of Ludditism:

Stanislav Petrov, the Soviet officer who refused to approve a retaliatory nuclear strike against the U.S. following an alert by a supposedly fail-safe system. Technological blunders can destroy humanity absent human conscience. Via NYT / Statement Film

“I ask them if they think fire is a threat and if they’re for fire or against fire. The difference between fire and AI is that […] AI, and especially superintelligence, is way more powerful technology. Technology isn’t bad and technology isn’t good […] the more powerful it is, the more good we can do and the more bad we can do.”

Analyzing mankind’s previous success containing powerful technologies, Tegmark asserts that new innovations’ dangers are tempered by wisdom gained in “screw[ing] up a lot,” such as how humanity learned to curtail fire’s destructive power. However, Tegmark notes, the past century’s deadly technologies and those yet to come in ours give humanity perilously little room to learn from mistakes:

“[W]ith more powerful technology like nuclear weapons or superhuman AI, we don’t want to have to learn from mistakes […] you wouldn’t want to have an accidental nuclear war between the U.S. and Russia tomorrow and then thousands of mushroom clouds later say, ‘Let’s learn from this mistake.'”

Limiting Lethal Autonomous Weapons

Tegmark finds ironic reassurance that superpowers won’t deploy Lethal Autonomous Weapons (LAWs) by likening the technology to another deadly analogue: bioweapons. Biology, despite deadly risks, is used almost exclusively for the benefit of mankind.

“[T]here have been people killed by chemical weapons,” says Tegmark, “but much fewer people have been killed by them than have been killed by bad human drivers or medical errors in hospitals — you know, the kinds of things that AI can solve.”

Alluding to the “clear red line beyond which people think uses of biology are disgusting and […] don’t want to work on them,” Tegmark believes that—like bioweapons—LAWs’ inherent horrors will limit their development. Establishing legal and ethical frameworks to inculcate revulsion to LAWs will “take a lot of hard work” and isn’t yet “a foregone conclusion,” according to Tegmark, who sees promise for the future in the backlash against Project Maven:

“[…] a lot of AI researchers, including the ones who signed the letter at Google, say that they want to draw lines, and there’s a very vigorous debate right now. It’s not clear yet which way it’s going to go, but I’m optimistic that we can make it go in the same good direction that biology went.”

Along with ethical strictures, Tegmark foresees international pressure limiting LAWs.

“If you look at biological weapons, they’re actually pretty cheap to build, but the fact of the matter is we haven’t really had any spectacular bioweapons attacks […] what’s really key is the stigma by itself,” Tegmark said. Regulations limiting LAW proliferation is actually in superpowers’ best interest, lest mass-production make LAWs cheap and abundant enough for less scrupulous actors.

“Once [LAWs] get mass-produced just like regular firearms,” he says, “it’s just a matter of time until North Korea has them, ISIS has them, and Boko Haram has them, and they’re flooding the black markets all over the world […] it’s not in their interest for a new technology to come along which is so cheap that all of the local terrorist groups and rogue state enemies come and get it.”

While limited use is inevitable—much like occasional instances of bioterrorism—Tegmark argues that “homebrew” LAWs used by “a once-in-a-blue-moon Unabomber type […] will remain just a nuisance in the grand scheme of things”.

China’s Secret to AI Supremacy: STEM Education

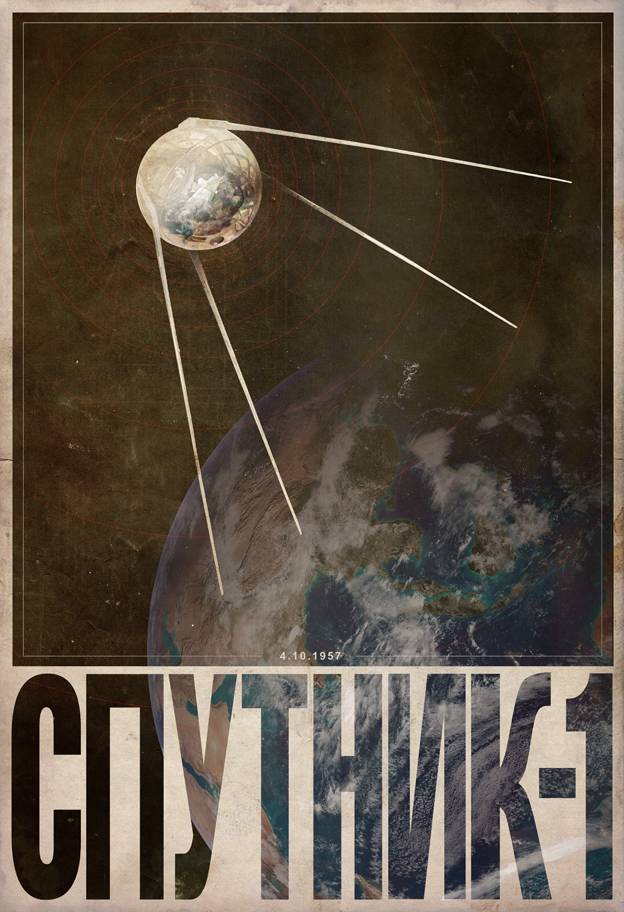

Tegmark calls AlphaGo the modern equivalent of Sputnki-1 which sparked the last century’s “space race.”

China will match the US within five years according to estimates including Kleiner-Perkins’, which Tegmark attributes to America’s waning STEM investment.

Tegmark ascribes America’s tech supremacy to past generations’ foresight: “during the space race, the government invested very heavily in basic STEM education, which created a whole generation of very motivated young Americans who created technology companies.”

Tegmark sees 2016’s AlphaGo as China’s “Sputnik moment,” sparking an AI “space race,” warning that America is “investing the least per capita in basic computer science education, whereas China, for example, is investing very heavily.”

He also criticizes the U.S. government’s actions in halting stalled STEM investment, which could help the country remain competitive:

“If you look at what the U.S. government gives to the National Science Foundation, it’s very lackadaisical compared to what the Chinese are investing. […] We live in a country right now where we still don’t even have a national science advisor appointed over a year into the presidency.”

Leave A Comment