Researchers let algorithms learn to play word-based or text-oriented games without instructions.

AI Masters Games without Assistance of Rules, Human Guidance

Not being a gamer or a programmer for that matter, I have little personal insight into how an algorithm learns to play a game. Even the simplest games are hard for people my age to play without a lot of instruction. And trying to get an AI program to learn how to play a text-based game that doesn’t rely on data and math and win at a game has often been extremely difficult for programmers.

In 2018, Mauro Comi wrote an article for towardsdatascience.com that helped explain how that process works. Most algorithms must have programmed instructions to operate effectively. Recently, however, researchers discovered that an algorithm can learn to follow text-based interactions all by itself if allowed to play on its own. Comi wrote:

“Artificial Intelligence and Gaming, contrary to popular belief, do not get along well together. Is this a controversial opinion? Yes, it is, but I’ll explain it. There is a difference between Artificial intelligence and Artificial behavior. We do not want the agents in our games to outsmart players. We want them to be as smart as it’s necessary to provide fun and engagement. We don’t want to push the limit of our ML bot, as we usually do in different Industries. The opponent needs to be imperfect, imitating a human-like behavior.

“Games are not only entertainment, though. Training an agent to outperform human players, and to optimize its score, can teach us how to optimize different processes in a variety of different and exciting subfields. It’s what Google’s DeepMind did with its popular AlphaGo, beating the strongest Go player in history and scoring a goal considered impossible at the time.”

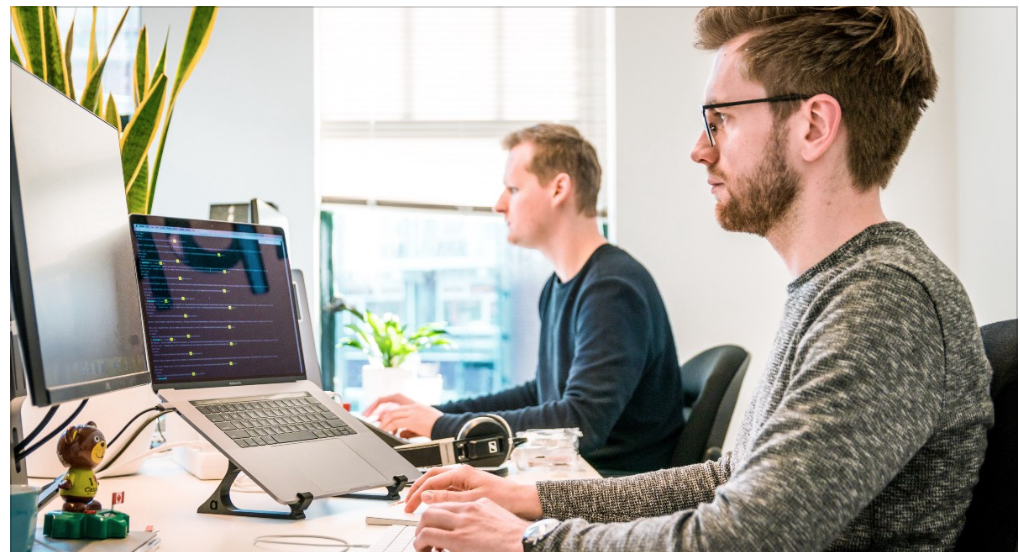

On the left, the AI does not know anything about the game. On the right, the AI is trained and learns how to play.

The above sample is just the start of this information on programming AI, and we have linked the story below.

Recently an article by Kyle Wiggers in venturebeat.com went further in explaining one approach to training an AI algorithm. Wiggers wrote:

“Can AI learn to play text-based games like a human? That’s the question applied scientists at Uber’s AI research division set out to answer in a recent study. Their exploration and imitation-learning-based system — which builds upon an earlier framework called Go-Explore — taps policies to solve a game by following paths (or trajectories) with high rewards.

“Text-based computer games describe their world to the player through natural language and expect the player to interact with the game using text. These games are of interest as they can be seen as a testbed for language understanding, problem-solving, and language generation by artificial agents,” wrote the coauthors of a paper describing the work. “Moreover, they provide a learning environment in which these skills can be acquired through interactions with an environment rather than using fixed corpora … [That’s why] existing methods for solving text-based games are limited to games that are either very simple or have an action space restricted to a predetermined set of admissible actions.”

In a series of experiments, the researchers set Go-Explore loose in two games in which multiple words are required to win and the reward doesn’t give feedback. The first was CoinCollector, a class of text-based games where the objective is to find and collect a coin from a location given a set of rooms, and the second was CookingWorld, a collection of over 4,440 games with 222 different difficulty levels and 20 games per level of difficulty, each with different entities and maps. While CoinCollector only parses five commands in total, CookingWorld accepts 18 verbs and 51 entities with predefined grammar with a total vocabulary size of 20,000, and it requires many actions (at least 30 for harder games) to find a reward.

The stories help explain how researchers learn to train AI to compete in word-based games, such as Trivia Pursuit. Spoiler alert, if you play the AI, you will probably lose.

read more at towardsdatascience.com

and at venturebeat.com

Leave A Comment