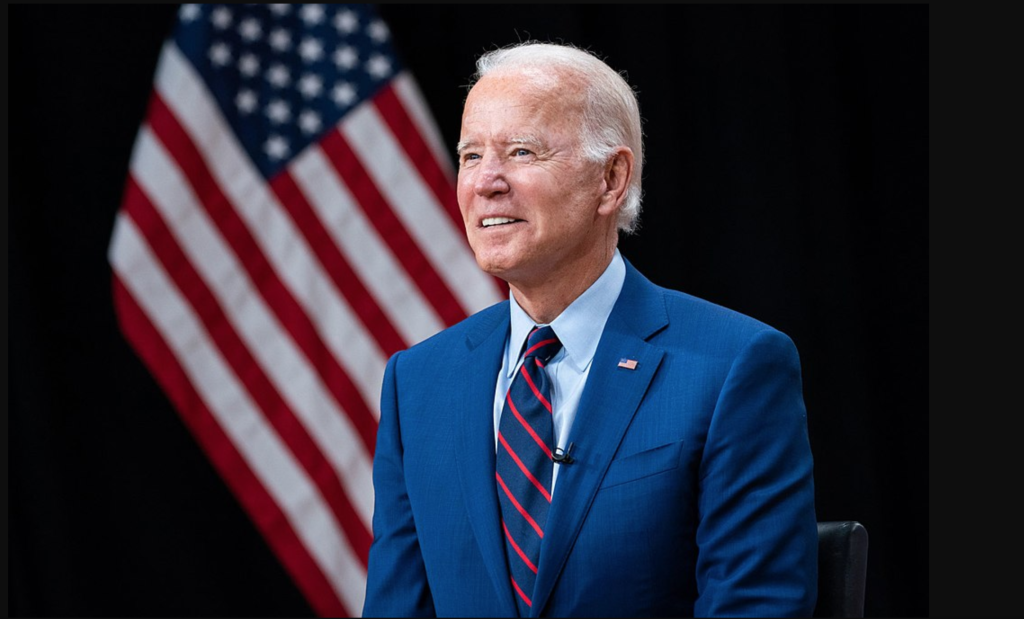

President Joe Biden’s voice was cloned and a recording was sent via phone calls to New Hampshire Democrats telling them not to vote. (Source: Wikipedia)

Deepfake Biden Phone Call to Democrats Being Investigated as Officials Worry of More in 2024

A manipulated audio clip of U.S. President Joe Biden, created using deepfake technology, has raised concerns among disinformation experts. The voice in the clip, altered to sound like Biden, asked New Hampshire voters not to vote in the Democratic primary, arguing to “save your vote for the November election.”

The incident underscores the growing concern about audio deepfakes in politics. These are easy to create, hard to trace, and can be used to manipulate voters.

Robert Weissman, president of consumer advocacy think tank Public Citizen, warned that “the political deepfake moment is here,” and urged lawmakers to enforce protections against fake audio and video recordings to prevent “electoral chaos.” The spread of deepfakes in politics is not new. For instance, audio deepfakes were circulated on social media before Slovakia’s parliamentary elections last year.

Deepfake technology is increasingly being adopted in U.S. political campaigns to engage with voters. The trend coincides with significant investments in voice-cloning startups, such as ElevenLabs, which recently raised funds valuing the company at $1.1 billion.

The origin of the Biden deepfake audio is still unclear and under investigation. However, tracking its source is challenging due to its distribution via phone, creating less of a digital trail. The incident confirms fears that deepfakes could be used to influence public opinion and also to deter people from voting. Even minor confusion caused by such misinformation could significantly impact election results.

The U.S. Federal Election Commission has taken steps toward addressing the issue of political deepfakes, and some states have proposed laws to regulate them. Meanwhile, election officials are preparing for potential deepfake-related challenges, running training exercises that simulate scenarios involving deepfakes. However, deepfake detection tools are still in their early stages and their accuracy remains uncertain.

read more at time.com

Leave A Comment