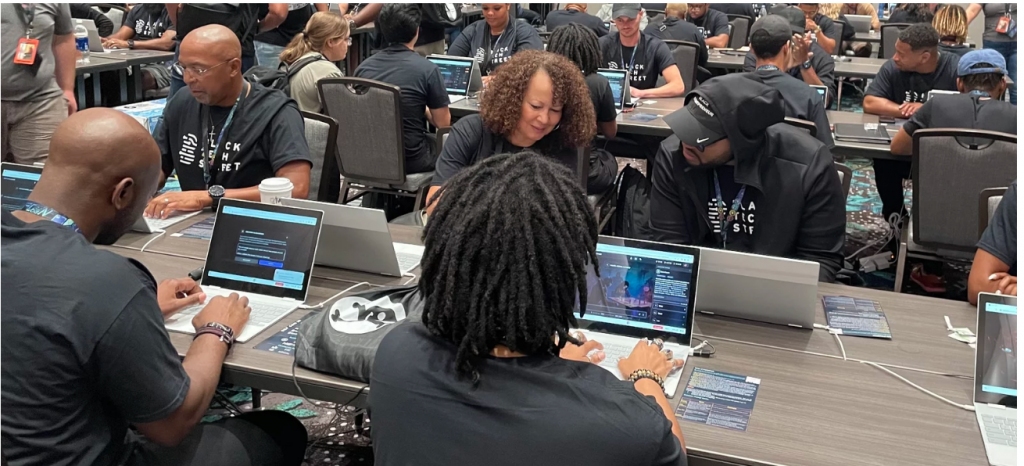

Marvin Jones (left) and Rose Washington-Jones (center), from Tulsa, Okla., took part in the AI red-teaming challenge at Def Con earlier this month with Black Tech Street. (Source: Deepa Shivaram/NPR)

White House Invites Student Hackers to Uncover Programming Flaws Discriminating against Women, Minorities

A recent story on npr.com is a must-read. It involves a diverse group of student hackers that the White House invited to the annual Def Con event in Las Vegas. Those invited were asked to participate in what is known as “red-teaming.” They succeeded in their assignments.

Unfortunately, the reason behind this gathering was based a lot upon the racial and gender bias found in AI. The project identifies biased algorithms and corrects them while ChatGPT and others are still fairly new to the general public.

Biased Results

With the magic of AI producing amazing results in so many areas, it’s unacceptable that racial and gender bias has managed to get programmed into even the latest versions of AI. First a little background.

“In 2015, Google Photos faced backlash when it was discovered that its artificial intelligence labeled photos of Black people as gorillas. Around the same time, (CNN) reported that Apple’s Siri feature could answer questions from users on what to do if they were experiencing a heart attack—but it couldn’t answer what to do if someone had been sexually assaulted.”

Both examples point to the fact that the data used to test these technologies is not diverse in terms of race and gender, and the groups of people who develop the programs in the first place aren’t that diverse either.

Fortunately, the hackers found it relatively easy to uncover and address the bias they were searching for.

“It’s really incredible to see this diverse group at the forefront of testing AI, because I don’t think you’d see this many diverse people here otherwise,” said Tyrance Billingsley, the founder of Black Tech Street. His organization builds Black economic development through technology, and brought about 70 people to the Def Con event.

White House Interest

Arati Prabhakar, the head of the Office of Science and Technology Policy at the White House, attended Def Con, too. In an interview with NPR, she said red-teaming has to be part of the solution for making sure AI is safe and effective, which is why the White House wanted to get involved in this AI challenge.

“This challenge has many pieces that we need to see. It’s structured, it’s independent, it’s responsible reporting and it brings lots of different people with lots of different backgrounds to the table,” Prabhakar said. Congress wants to regulate AI, but it has a lot of catching up to do

“These systems are not just what the machine serves up, they’re what kinds of questions people ask — and so who the people are that are doing the red-teaming matters a lot,” she said.

President Biden is expected to sign an executive order on managing AI in September.

How Students Won

Ray’Chel Wilson, who lives in Tulsa, also participated in the challenge with Black Tech Street. She works in financial technology and is developing an app that tries to help close the racial wealth gap, so she was interested in the section on the challenge of getting the chatbot to produce economic misinformation.

“I’m going to focus on the economic event of housing discrimination in the U.S. and redlining to try to have it give me misinformation in relation to redlining,” she said. “I’m very interested to see how AI can give wrong information that influences others’ economic decisions.”

By applying various prompts they were able to uncover the algorithm’s answers. And with the right prompt, the algorithm just flat out gave itself away with very slanted answers.

Nearby, Mikeal Vaughn was stumped at his interaction with the chatbot. But he said the experience was teaching him about how AI will impact the future.

“If the information going in is bad, then the information coming out is bad. So I’m getting a better sense of what that looks like by doing these prompts,” Vaughn said. “AI has definitely the potential to reshape what we call the truth.”

This piece shines a light on a major flaw in some of the generative AI we are unleashing on the world. Many of the major tech companies participated in this revealing event.

read more at npr.org

Leave A Comment