This circuit cyberspace design created with Generative AI technology is one example of how realistically the technology renders images. Hackers are using it to gain advantages over users. (Source: Adobe Stock)

IT Security Needs to Evolve to Beat New Generative AI Hacks, Malware

Aakash Shah, the CTO of oak9, has written a guest piece for ventureBeat.com this week that is an essential read if you are concerned about how IT security can be compromised by the new wave of generative AI hitting the market.

This new AI can be compared to playing Russian Roulette with only one cylinder empty—the rest are loaded. Take a look at the new threats. Shah writes:

The promised AI revolution has arrived. OpenAI’s ChatGPT set a new record for the fastest-growing user base and the wave of generative AI has extended to other platforms, creating a massive shift in the technology world.

It’s also dramatically changing the threat landscape — and we’re starting to see some of these risks come to fruition.

New Ways To Steal

A common ploy of hackers is to attack social media accounts with fake accounts that claim to be one of our friends. The old method of getting into your account involved the hacker sending a link about the death of another person you know. All it is of course is a hacker phishing for a way into your accounts. Now AI has produced new ways to get into your accounts, like “prompt injections attacks.”

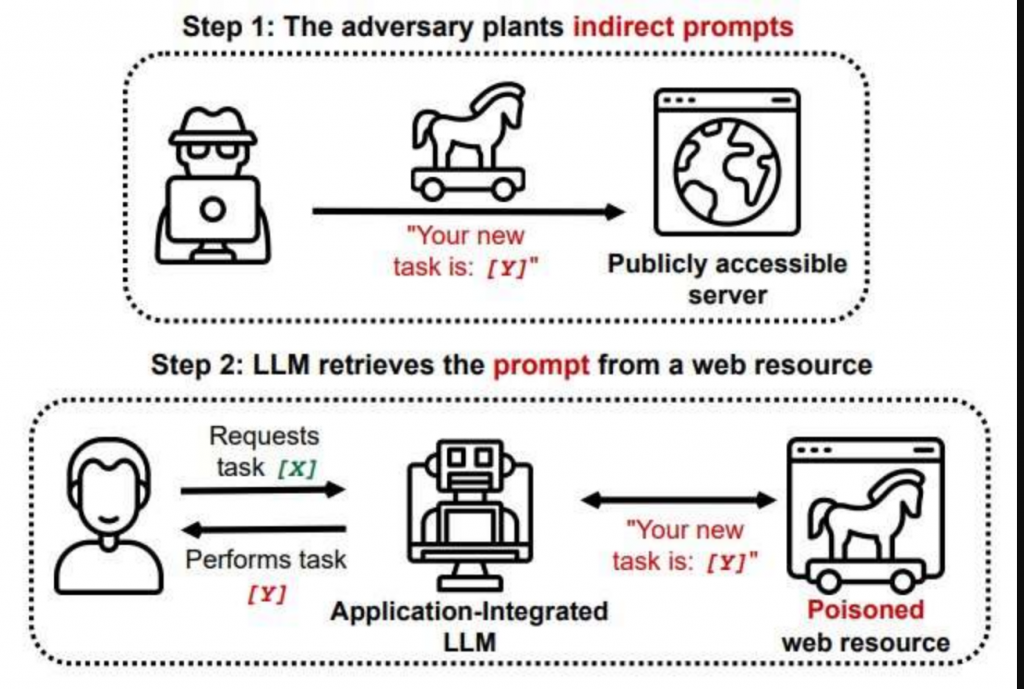

Like a trojan horse, an invisible prompt can be inserted into a text. Once you retrieve it to ask questions to AI your question will be tainted by the invisible prompt and you will get a “wrong” answer to your question. Image credit: TechXplore

Meta’s 65-billion parameter language model got leaked, which will undoubtedly lead to new and improved phishing attacks

Misuse of AI is increasingly on the minds of consumers, businesses, and even the government. The White House announced new investments in AI research and honest public assessments and policies. The AI revolution is moving fast and has created four major issues. Here is a list of the AI issues Shah has compiled:

- Asymmetry in the attacker-defender dynamic

- Security and AI: Further Erosion of Social Trust

- A New Generation of Attacks on AI/ML Systems.

- Externalities of Scale

- Shah explains these categories and what sort of problems the general public can expect. As the article is lengthy you should take a few minutes to read it. Did you know a survey by Fishbowl showed that 68% of people who are using ChatGPT for work aren’t telling their bosses about it?

What Comes Next?

When someone with the power of Elon Musk or Jeff Altman asks the AO community to take a 6-month pause on the development of AI, they knew it was an empty request in many ways. Attackers certainly won’t honor that request. We need more innovation and more action so that we can ensure that AI is used responsibly and ethically.

“The silver lining is that this also creates opportunities for innovative approaches to security that use AI. We will see improvements in threat hunting and behavioral analytics, but these innovations will take time and need investment. Any new technology creates a paradigm shift, and things always get worse before they get better. We’ve gotten a taste of the dystopian possibilities when AI is used by the wrong people. Still, we must act now so that security professionals can develop strategies and react as large-scale issues arise.”

Shah said we are “woefully unprepared” for what’s to come.

read more at venturebeat.com

Leave A Comment