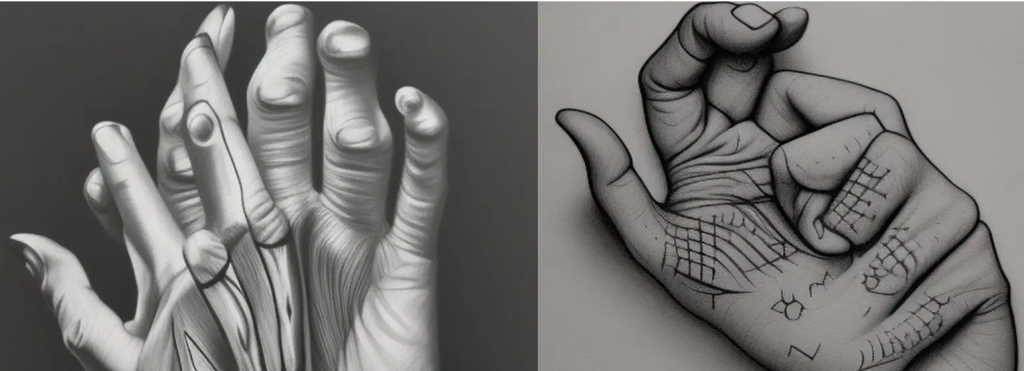

The Chatsonic program created these hand images.

When It Comes to Rendering Hands, AI Art Programs Struggle to Be Real

Artists who draw human figures can tell you they’re harder to render than abstract objects. It turns out that even algorithms have a difficult time producing human fingers. Here is what kotaku.com had to say about this AI problem:

No matter where you sit on the enormous and complex debate regarding AI models and their use of datasets to generate new images, artworks, writing and even movies, there’s one thing everyone can agree on: they’re damned creepy at drawing human hands. But why? Especially when AIs are so capable of recreating the seemingly far greater complexity of human faces. To find the answers, we decided to speak to a leading authority on this subject: an AI.

The article writer John Walker did just that. He asked ChatGPT and Chatsonic to join the conversation about getting fingers right:

ChatGPT is by far the most well-known AI language model just now, causing huge waves across the world with its ability to hold natural conversations, answer complex questions, and generate extraordinary poetry, writing, and even the most complicated of human discourse: game journalism. However, as yet it’s incapable of rendering pictures, music, or video, so therefore a more impartial source on these areas.

ChatGPT Finger Fouls

Walker asked ChatGPT, “Why is AI so bad at rendering human fingers?”

“Rendering realistic human fingers is challenging for AI because they are highly articulated and have complex shapes and textures,” the AI explained, adding, “capturing the subtleties of how light interacts with skin, nails, and wrinkles requires advanced modeling and rendering techniques.”

Walker explains the possible reasons for the AI to get even the number of fingers wrong when asked to create a hand.

ChatGPT And Signing

But then ChatGPT expanded on the why.

“There are also AI models that specialize in generating specific types of hand images, such as hand gestures or sign language,” it stated. “For example, the Sign Language Transformer (SLT) is an AI model that has been trained to translate sign language videos into natural language text, and it includes a hand pose estimator that can accurately predict the position and orientation of fingers in the hand.”

Walker’s article also asks Chatsonic a similar set of questions.

Rival AI Chatsonic offers a far more broad suite of AI abilities—albeit far less likely to provide accurate or coherent responses (during one conversation, I found it espousing to me the mental health benefits of removing one’s own head)—I thought I’d let it respond to the topic. I began by asking it to create a few images of human hands, something ChatGPT cannot do (although of course OpenAI, the group behind ChatGPT, also owns DALL-E).

The hands were as deeply creepy as imagined. Walker further shares why giving him the finger was so hard for AI.

read more at kotaku.com

Leave A Comment