‘A sea otter in the style of Girl with a Pearl Earring by Johannes Vermeer’/ ‘An ibis in the wild, painted in the style of John Audubon’ are two images generated by the improved OpenAI program. (Source:DALL-E 2)

OpenAI Researchers Still Testing DALL-E 2 Prior to Public Release

San Francisco-based OpenAI has announced its image-generating AI called DALL-E 1 has had major upgrades for better performance, according to a story first reported by MIT’s Technology Review magazine. The upgrades are so notable that artnews.com is heralding them, as well.

DALL-E 2, as the upgraded tool is called, converts text prompts into images like its predecessor. However, the new version is reportedly far more advanced, creating images that more accurately match the text prompt—and can even be tweaked to incorporate different styles.

People in fields once thought safe from AI, such as the creative arts, are no longer immune from competing with this latest version of DALL-E for illustrator jobs, much as how a group of bridge players in Paris didn’t think they would get beat at their own card game by an IBM AI.

The MIT article points out that this version of DALL-E 2 doesn’t just take directions for creating an image, but it can also edit existing images. The researchers are asking now whether it’s appropriate to expand the definition of AI to include creativity.

If by chance you got to use the earlier version of the tool, perhaps it seemed like a fun party trick: input a few simple words, such as “avocado + armchair,” and the tool would produce a strange image that might include glitches and odd visuals, but were still appropriate.

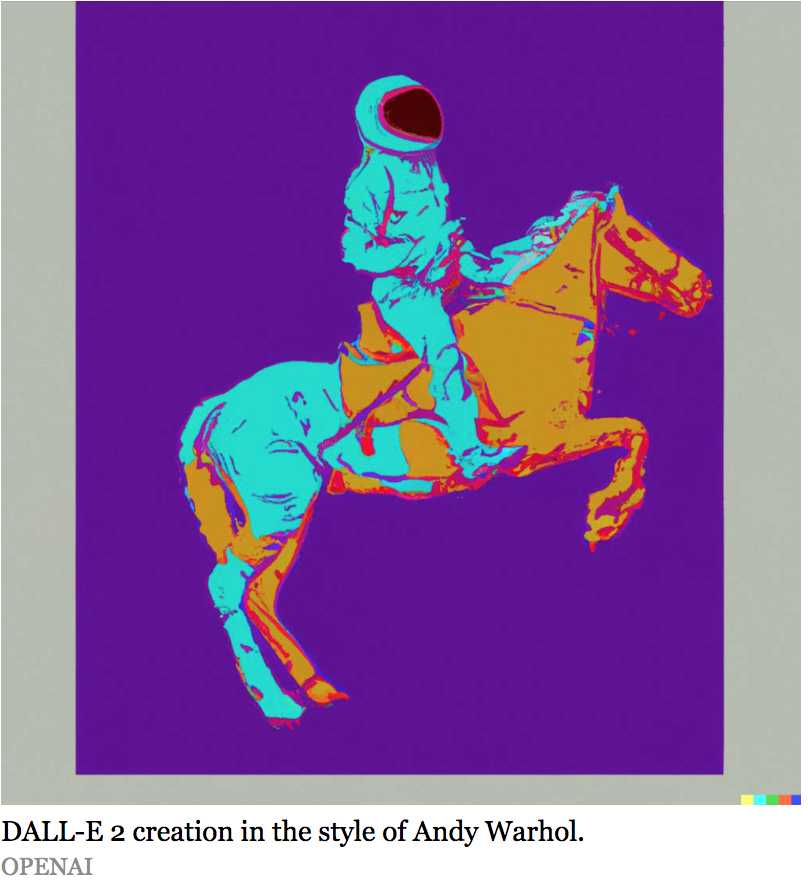

DALL-E 2 as an artist on-demand isn’t perfect yet, either. The algorithm has issues with appendages, like feet or hoofs as the image below reveals.

DALL-E 2 is expected to develop and learn as it gathers information. Much like a human artist, DALL-E 2 is predicted to teach itself to become a better performing artistic service as users teach it their traits.

“One way you can think about this neural network is transcendent beauty as a service,” Ilya Sutskever, cofounder and chief scientist at OpenAI, told MIT. “Every now and then it generates something that just makes me gasp.”

OpenAI has not yet released DALL-E 2 as an easily accessible software as it is still testing out the technology. The researchers are attempting to ensure that it is not used to create violent images or deepfakes, among other concerns.

read more at artnews.com

Leave A Comment