An AI app that is meant to determine facial features of a speaker sometimes has difficulty figuring out what the speaker may look like due to their voice’s pitch or accent.

The Speech2Face Algorithm Envisions the Speaker Based on Voice, One Image

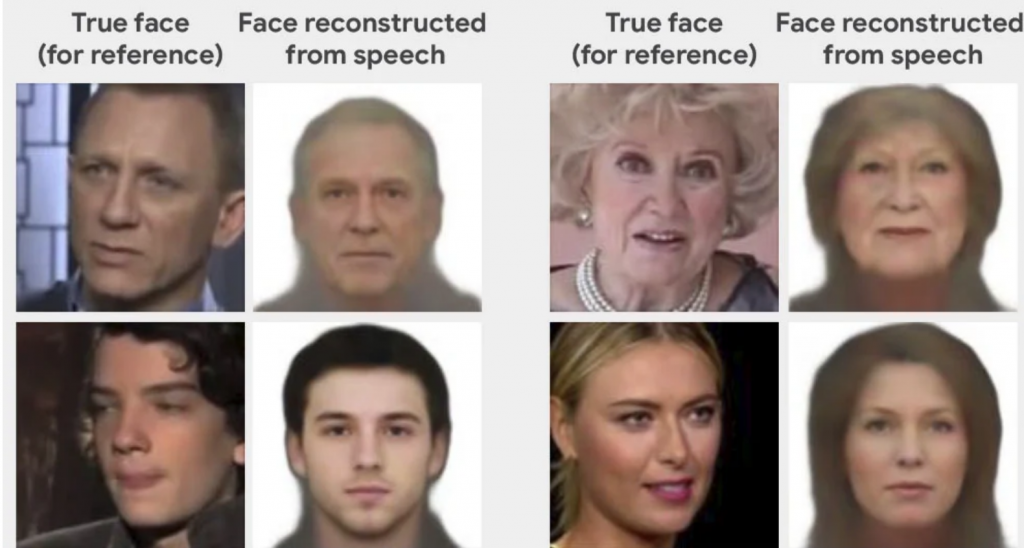

The Speech2Face algorithm can give users a video representation of a person’s face just by using the person’s voice. And it is fairly accurate in the samples we pasted below. The story was found at petapixel.com, and the algorithm uses pixels to portray faces.

AI scientists at MIT’S Computer Science and Artificial Intelligence Laboratory (CSAIL) first published a story about an AI algorithm called Speech2Face in a paperback in 2019. Like a lot of groundbreaking science, it was first mentioned in science fiction.

“How much can we infer about a person’s looks from the way they speak?” the abstract reads. “[W]e study the task of reconstructing a facial image of a person from a short audio recording of that person speaking.”

And just like that, researchers set up the experiment using lots of machine learning and an avalanche of audio/video clips showing people talking. Once trained, the AI was remarkably good at creating portraits based only on voice recordings that resembled what the speaker actually looked like.

Take a look and see what you think.

Speech2Voice Shows Successful Reproductions From Audio Data

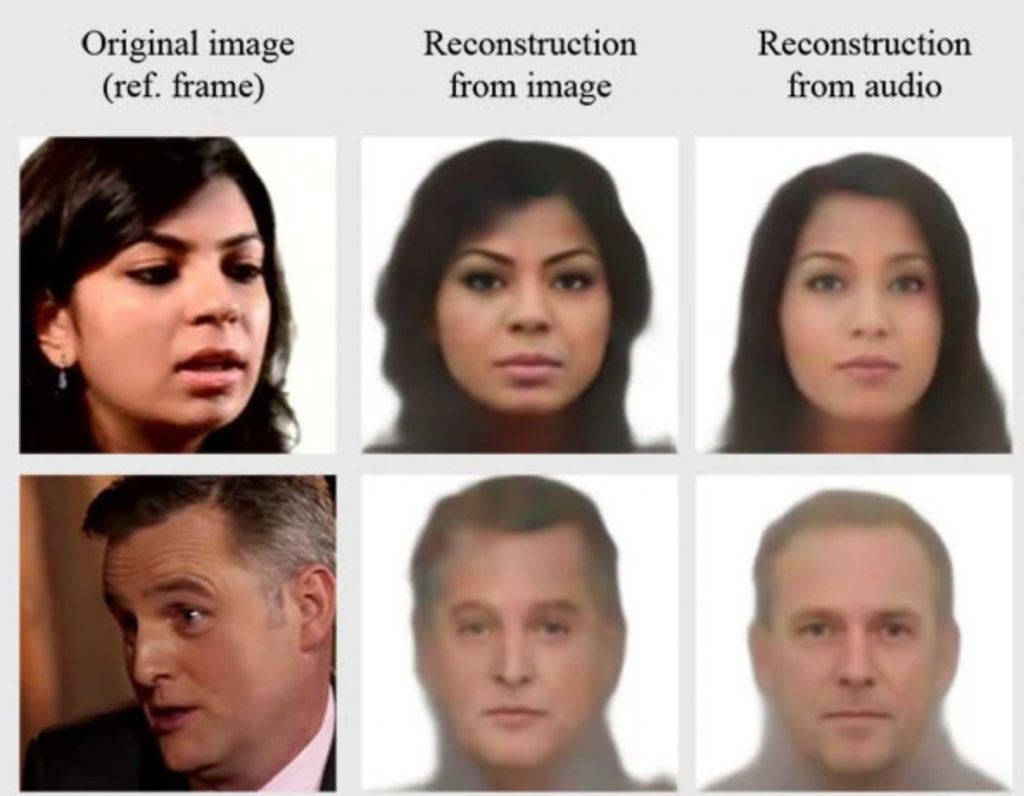

And then the researchers decided to experiment by showing the algorithm a single photograph and asking for its reproduction. Below is a look at the three images and they are remarkably similar.

Researchers say there are positive uses for the voice to face programs, in particular by putting a face to an audio recording of a suspected criminal. But that would raise a lot of legal issues at this point.

It is a clever use of AI but it could of course be used for something harmful, as all AI might be. Also, it is not 100% accurate. It has trouble with age and with ethnic accents at times. or as one person put it:

“In some ways, then, the system is a bit like your racist uncle,” writes photographer Thomas Smith. “It feels it can always tell a person’s race or ethnic background based on how they sound — but it’s often wrong.”

Maybe with a little more training, this AI will become far more accurate. Hopefully not be as dangerous as some of those ‘deepfakes’ that have become a bit of a plague. But researchers are more hopeful about its use.

“Note that our goal is not to reconstruct an accurate image of the person, but rather to recover characteristic physical features that are correlated with the input speech,” the paper states. “We have demonstrated that our method can predict plausible faces with facial attributes consistent with those of real images.

“We believe that generating faces, as opposed to predicting specific attributes, may provide a more comprehensive view of voice face correlations and can open up new research opportunities and applications.”

read more at petapixel.com

Leave A Comment