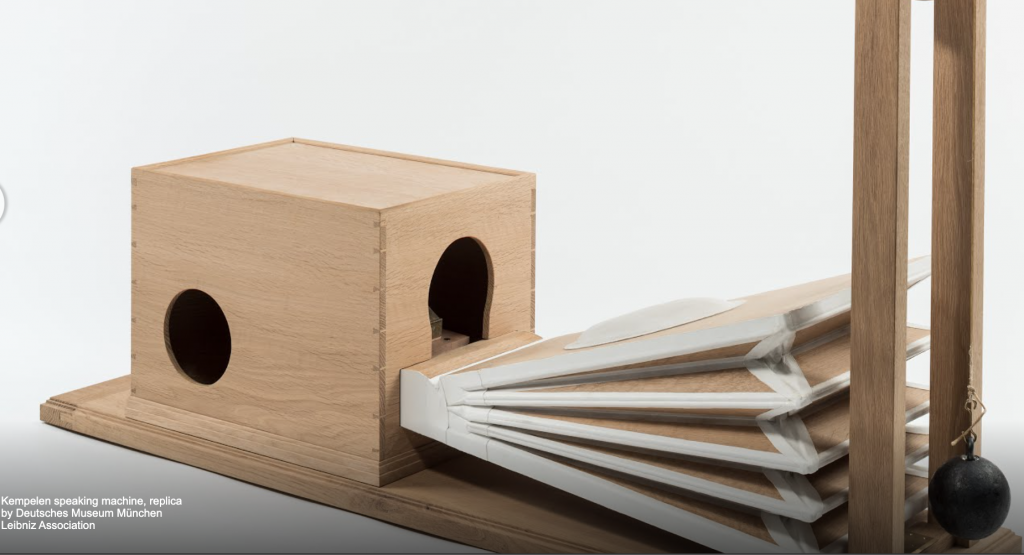

A model replica of the speaking machine by Wolfgang von Kempelen. (Source: Google Arts & Culture/Deutsches Museum)

Companies Attempt to Master the Art of Voice Cloning with Refined Algorithms

When it comes to recording the human voice there are a lot of parameters to consider. Now when you try to copy a human voice with an AI-driven computerized object it is a whole other set of parameters. Humans have been making talking machines for a long time. From an article found at npr.org, we discovered that deepfake voices have come a long way.

“The voice is not easy to grasp,” says Klaus Scherer, emeritus professor of the psychology of emotion at the University of Geneva. “To analyze the voice really requires quite a lot of knowledge about acoustics, vocal mechanisms and physiological aspects. So it is necessarily interdisciplinary, and quite demanding in terms of what you need to master in order to do anything of consequence.”

It’s been more than 200 years since the invention of the first speaking machine by Wolfgang von Kempelen around 1800. The contraption used bellows, pipes, and a rubber mouth and nose to simulate a few utterances, like “mama” and “papa.” Now deepfake voices simulate those of actors like Samuel L. Jackson on Alexa.

Writer Chloe Veltman was even able to listen to a clone of her voice for the story. She found its accuracy eerie.

“My jaw is on the floor,” says the original voice behind Chloney – that’s me, Chloe – as I listen to what my digital voice double can do. “Let’s hope she doesn’t put me out of a job anytime soon.”

The CEO of SpeechMorphing, Fathy Yassa, explained that the process requires a great deal of machine learning by the voice algorithms. Meaning the algorithm listens to a human speak for about 10 to 15 minutes, then begins to repeat the voice millions of times till it gets it right.

Industry Growth

The global speech and voice recognition industry is worth tens of billions of dollars and is growing fast. The technology has given actor Val Kilmer, who lost his voice from throat cancer a few years ago, the chance to reclaim something approaching his former vocal powers.

It’s enabled film directors, audiobook creators, and game designers to develop characters without the need to have live voice talent on hand. For the film Roadrunner an AI was trained on Anthony Bourdain’s extensive archive of media appearances to create a digital vocal double of the late chef and TV personality. This angered people because Bourdain’s voice said things he never actually said while alive. Even former President Barak Obama’s voice was used in a deepfake video. ( Note the video below includes profanities)

It is easy to see the negative potential of this technology. Having actors say something funny or controversial with the algorithm is one thing. Having algorithms reproducing the voices of politicians saying something dangerous is quite another.

“We’re entering an era in which our enemies can make it look like anyone is saying anything at any point in time,” says the Obama deepfake in the video, produced in collaboration with BuzzFeed in 2018. “Even if they would never say those things.”

The article has several examples of the author’s voice in different settings and languages. If you have ever listened to a computer algorithm try and read a novel to you while you are falling asleep, it is easy to hear we still have quite a way to go. We have yet to get them to the point where you really can’t tell the difference between the original human voice and the fake one spoken in AI. There is a noticeable lack of emotion and they lack the sound of intakes of breath. But designers are working on that too.

Voice cloning is getting closer every year.

read more at npr.org

Leave A Comment