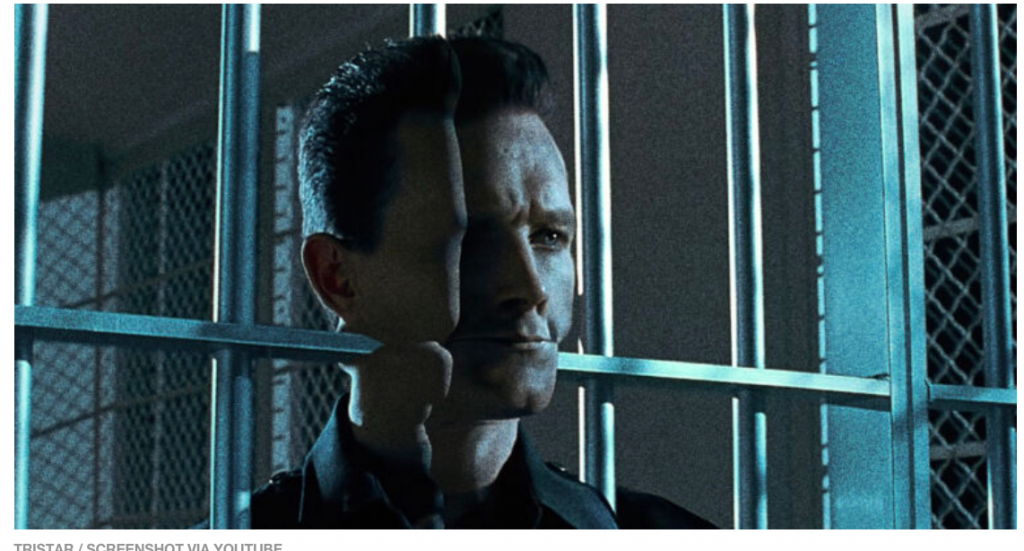

The Terminator movie series featured autonomous killer robots with human forms that could move through metal. (Source: YouTube)

Government Panel Approves of ‘Options’ for Building Autonomous Robot Killers

Dan Robitski wrote a great piece in futurism.com about Congress and its moral dilemma of deciding whether to build a robot army or not

Tasked with deciding whether the United States military should be able to develop autonomous killer robots capable of using deadly force, a congressional advisory panel said the government should keep its options open.

Using similar logic to a parent convincing to get their toddler to try vegetables, members of the National Security Commission on Artificial Intelligence concluded that Congress should at least consider giving killer robots or artificial intelligence systems a chance, Reuters reports. Otherwise, the military might never know if they actually perform so well that they kill fewer innocent people, according to the logic of the panel, which made this decision despite growing international pressure to ban killer robots outright. The American Association for the Advancement of Science resoundingly urged against developing autonomous killing machines.

Another Arms Race

Of course, the fact is that the U.S. military has already started developing autonomous tanks, which it strategically terms “lethality automated systems.” The militaries of China, Russia, and the U.K. have all developed robots, drones, or algorithms capable of taking human life as well — suggesting that this so-called moral imperative to explore killer tech is really more of an imperative to not fall behind in an arms race.

“[The commission’s] focus on the need to compete with similar investments made by China and Russia… only serves to encourage arms races,” Mary Wareham, coordinator of the Campaign to Stop Killer Robots, told Reuters.

Yes, there is plenty in the article to raise the hairs on the back of your neck, without even mentioning the hordes of killer drones already on the market. It’s going to take more than AI to control this problem. It’s going to take some foresight, moral character and a truckload of wisdom to get this right.

read more at futurism.com

Leave A Comment