DALL-E, CLIP Create Images from Text Requests Using the Power of GPT-3

Get ready for the next step in creativity driven AI. OpenAI has created a program that can visually create what users request. This incredible news comes from a story on venturebeat.com.

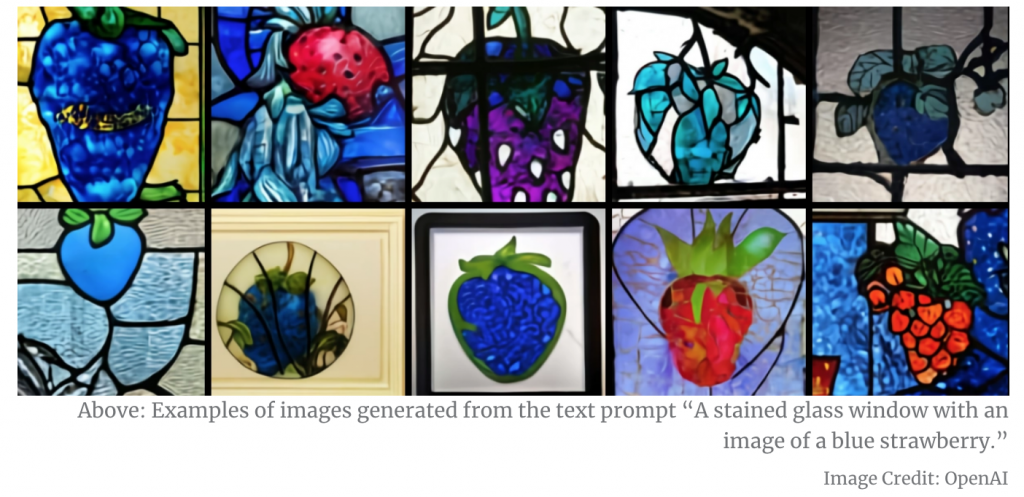

OpenAI released two multimodal AI systems that combine computer vision and NLP: DALL-E, a system that generates images from text, and CLIP, a network trained on 400 million pairs of images and text.

The photo below was generated by DALL-E from the text prompt “an illustration of a baby daikon radish in a tutu walking a dog.” DALL-E uses a 12-billion parameter version of GPT-3, and like GPT-3 is a Transformer language model. The name is meant to evoke the artist Salvador Dali and the robot WALL-E.

Images generated by DALL-E depict a baby Daikon radish in a tutu walking a dog. (Source: OpenAI)

And that’s what we mean by incredible. Text-to-visual creativity has long been the desire of programmers and now it is a reality.

Tests OpenAI appears to demonstrate that DALL-E has the ability to manipulate and rearrange objects in generated imagery and also create things that don’t exist, like a cube with the texture of a porcupine or a cube of clouds. Based on text prompts, images generated by DALL-E can appear as if they were taken from the real world or can depict works of art. Visit the OpenAI website to try a controlled demo of DALL-E.

Khari Johnson, the author of the article, writes that CLIP, a multimodal model trained on 400 million pairs of images and text collected from the internet, uses zero-shot learning capabilities akin to GPT-2 and GPT-3 language models.

“We find that CLIP, similar to the GPT family, learns to perform a wide set of tasks during pretraining, including object character recognition (OCR), geo-localization, action recognition, and many others. We measure this by benchmarking the zero-shot transfer performance of CLIP on over 30 existing datasets and find it can be competitive with prior task-specific supervised models,” 12 OpenAI coauthors write in a paper about the model.

Although testing found CLIP was proficient at a number of tasks, it fell short in specialization tasks, like satellite imagery classification or lymph node tumor detection.

“This preliminary analysis is intended to illustrate some of the challenges that general purpose computer vision models pose and to give a glimpse into their biases and impacts. We hope that this work motivates future research on the characterization of the capabilities, shortcomings, and biases of such models, and we are excited to engage with the research community on such questions,” the paper reads.

OpenAI chief scientist Ilya Sutskever was a co-author of the paper detailing CLIP and may have alluded to the coming release of CLIP when he recently told deeplearning.ai that multimodal models would be a major machine learning trend in 2021. Google AI chief Jeff Dean made a similar prediction.

read more at venturebeat.com

Leave A Comment