A still from the video made by SoundSpaces, which shows how the smart agents learn to navigate 3D spaces.

New Technologies Bring Human Sensory Abilities to Robotic Assistants

Some exciting progress is being reported about merging AI Virtual Assistants with sensory abilities to hear, see and interact physically. Writer Daphne Leprince-Ringuet writes about it on ZDnet.com, explaining in layman’s terms how to train AI in a digital-only setting. On-screen, the algorithms can run and jump and fetch in convincing ways. But to take that algorithm and make it operate a mechanical body is a huge jump. Like the difference between hitting a baseball on a T-stand and hitting a curveball thrown by a big-league pitcher.

For now, the AI will be able to help users locate their phones by hearing where they are and locating them.

“Where’s my phone?” is, for many of us, a daily interjection–often followed by desperate calling and frantic sofa searches. Now some new breakthroughs made by Facebook AI researchers suggest that home robots might be able to do the hard work for us, reacting to simple commands such as “bring me my ringing phone.”

Virtual assistants for now are utterly incapable of identifying a specific sound and then using it as a target for where they should navigate across a space.

While you could order a robot to “find my phone 25 feet southwest of you and bring it over,” there is little an assistant can do if it’s not told exactly where it should go.

Embodied AI

To address this gap, Facebook’s researchers built a new open-source tool called SoundSpaces, designed for so-called “embodied AI” – a field of artificial intelligence that’s interested in fitting physical bodies, like robots, with software, before training the systems in real-life environments.

In this case, SoundSpaces lets developers train virtual embodied AI systems in 3D environments representing indoor spaces, with highly realistic acoustics that can simulate any sound source – in a two-story house or an office floor, for example.

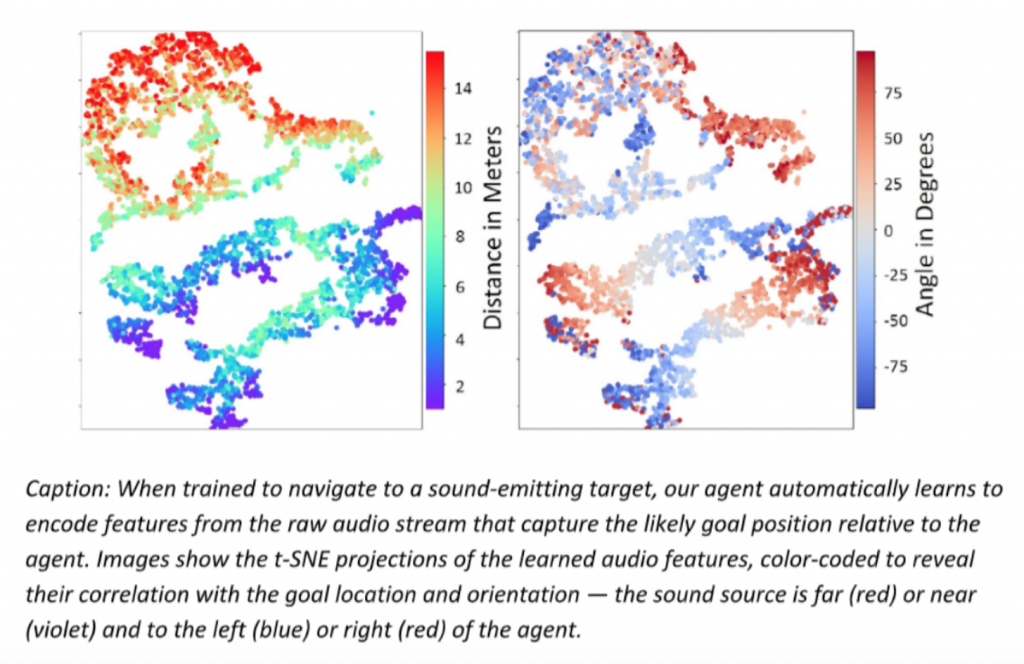

Incorporating audio sensing in the training enables AI systems to correctly identify different sounds, but also to guess where the sound is coming from and then use what they heard as a sound-emitting target. In other words, they can hear you, see you and probably play hide and seek with you.

In parallel, the researchers released a new tool called SemanticMapNet, to teach virtual assistants how to explore, observe and remember an unknown space, and in this way create a 3D map of their environment that the systems can use to carry out future tasks.

“We had to teach AI to create a top-down map of a space using a first-person point of view, while also building episodic memories and spatio-semantic representations of 3D spaces so it can actually remember where things are,” Kristen Grauman, research scientist at Facebook AI Research, told ZDNet. “Unlike any previous approach, we had to create novel forms of memory.”

In addition, Facebook’s AI lab, FAIR, released AI Habitat, a new simulation platform for training AI agents by allowing them to explore various virtual environments, like a furnished apartment or cubicle-filled office, according to a story on MIT’s technologyreview.com. The AI could then be ported into a robot, which would be able to navigate through the real world.

“The algorithms build on FAIR’s work in January of this year, when an agent was trained in Habitat to navigate unfamiliar environments without a map. Using just a depth-sensing camera, GPS, and compass data, it learned to enter a space much as a human would, and find the shortest possible path to its destination without wrong turns, backtracking, or exploration.

However, bringing those abilities into the real world, which is known as the “sim2real” transfer, is still a long way off, according to FAIR’s researchers. We won’t see the Jetson’s Rosie the Robot any time soon.

Leave A Comment