Social Media Cos Struggle to Make AI Unbiased, Stop Bullying

Social Media Cos Struggle to Make AI Unbiased, Stop Bullying

Both Facebook and Instagram have recently encountered thorny issues related to AI capabilities. In FB’s case AI was caught racially discriminating against users. For Instagram, the problem has been figuring out the best way to keep users from bullying each other

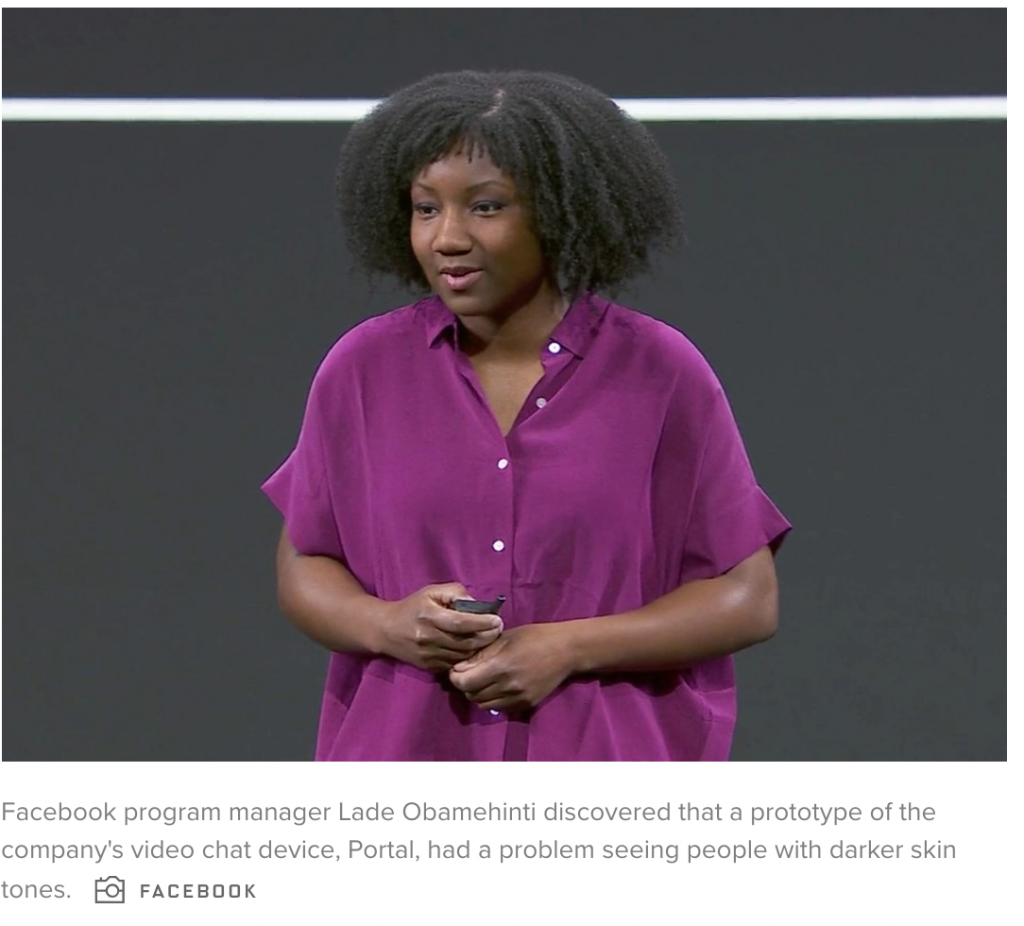

When Facebook’s program manager Lade Obamehinti tested a prototype of the company’s AI algorithm for its Portal video chat device, which uses computer vision to identify and zoom in on a person speaking, she discovered that it wouldn’t listen to her. The device ignored Obamehinti, who is black, when she spoke. Instead, it listened to a white man who was working with her.

“AI creates unpredictable consequences of its own,” said Joaquin Candela, Facebook’s director of AI.

Obamehinti talked about the problem at last week’s Facebook developers’ conference, a day after CEO Mark Zuckerberg claimed Facebook’s many products would become more private. In a Wired.com story, Facebook’s chief technology officer Mike Schroepfer also addressed the challenges of using AI technology to supervise against or enhance the company’s products without creating new biases and problems.

A Time magazine article describes how a black woman programmer has seen racial discrimination in facial recognition software dating back to 2015.

With all new AI ideas, particularly on Facebook’s scale, the sophisticated systems are essential to fix the unintended consequences of these advances, especially when they involve bias that’s being programmed into AI algorithms by programmers.

Many AI researchers have recently raised the alarm about the risk of biased AI systems as they are assigned more critical and personal roles. While race bias is a major issue at FB and other companies, it is also “fake news” that has them up late and working hard to eliminate as well.

Candela, Facebook’s AI director, spoke last week about how Facebook’s use of AI to fight misinformation has also required engineers to be careful that the technology doesn’t create inequities. Candela acknowledged that AI advances alone won’t fix Facebook’s problems. It requires Facebook’s engineers and leaders to do the right thing.

“While tools are definitely necessary they’re not sufficient because fairness is a process,” he said.

Instagram, which serves 70% of American teenagers according to the Pew Research Center, is dealing with a problem of bias promoted by its users by using AI to find bullying in photos, according to a New York Times story. This year, the company is testing new features to help prevent bullying, including the ability to hide “like” counts on posts. According to the British anti-bullying organization Ditch the Label, 42% of cyberbullying victims from ages 12-20 are bullied on Instagram.

According to the Times story, last year Instagram gathered teenagers into focus groups to understand bullying and its forms. From threats to insults to attractiveness ratings, the service hopes to train AI to catch bullying and stop it.

Leave A Comment