Deepmind Leverages Neural Net in Training Alpha Star to Win

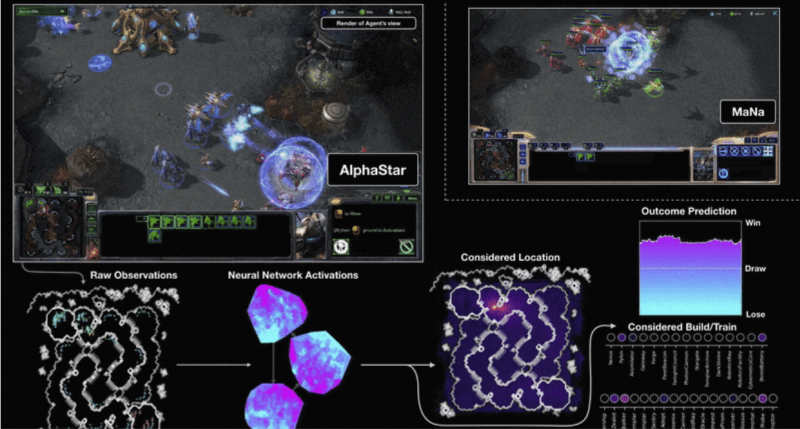

The success of Google’s Deepmind AI engine Alpha Star—created to play the video game Star Craft II—signifies yet another major advance in how artificial intelligence is learning advanced gaming. This latest example of how AI can beat human experts, which followed the 2016 success of Alpha Go in overcoming the top Go player in China, impressed the designers with its complete and total routing of the top U.S. Star Craft II player in January.

A paper explaining the Alpha Star architecture, considerations and implications will be released soon, explaining what Deepmind developers think this latest advance means.

The Deepmind blog explains that the defeat of a top professional player in this latest complex game shows that Alpha Star can perform the most challenging “Real Time Strategy” (RTS) game play. A story in Vox.com called the results of the games “stunning” and noted, “DeepMind is leading its field at applying those techniques to surpass humans in surprising new ways.”

In a series of test matches held on 19 December, Alpha Star beat Team Liquid’s Grzegorz “MaNa” Komincz, one of the world’s best pro StarCraft players, 5-0, following a successful benchmark match against his team-mate Dario “TLO” Wünsch. The matches took place under professional match conditions on a competitive ladder map, without any game restrictions.

The impact of the video game win is proof that the AI system can be “trained directly from raw game data by supervised learning and reinforcement learning.” Other game system tests, like those challenging Atari, Mario and Quake III, fell far short of this success and required handcrafted programming and significant restrictions on game rules to allow the programs to win.

Deepmind’s blog spelled out all the ways that winning the complex game points to an impressive advance:

- Game theory: StarCraft is a game where, just like rock-paper-scissors, there is no single best strategy. As such, an AI training process needs to continually explore and expand the frontiers of strategic knowledge.

- Imperfect information: Unlike games like chess or Go where players see everything, crucial information is hidden from a StarCraft player and must be actively discovered by “scouting.”

- Long term planning: Like many real-world problems cause-and-effect is not instantaneous. Games can also take anywhere up to one hour to complete, meaning actions taken early in the game may not pay off for a long time.

- Real-time: Unlike traditional board games where players alternate turns between subsequent moves, StarCraft players must perform actions continually as the game clock progresses.

- Large action space: Hundreds of different units and buildings must be controlled at once, in real-time, resulting in a combinatorial space of possibilities. On top of this, actions are hierarchical and can be modified and augmented. Our parameterization of the game has an average of approximately 10 to the 26 legal actions at every time-step.

The strategic abilities required to win the game show that Deepmind has outdone itself, according to a story in TowardDataScience.com. The article concludes:

“AlphaStar represents a major breakthrough in AI and one that open the door to all sorts of new challenges. The principles of AlphaStar can be applied to many problems that require long term strategic planning with imperfect information. From economic policy planning to weather predictions, we are likely to see AI agents inspired by AlphaStar tackle these challenges in the near future.”

https://deepmind.com/blog/alphastar-mastering-real-time-strategy-game-starcraft-ii/

Leave A Comment