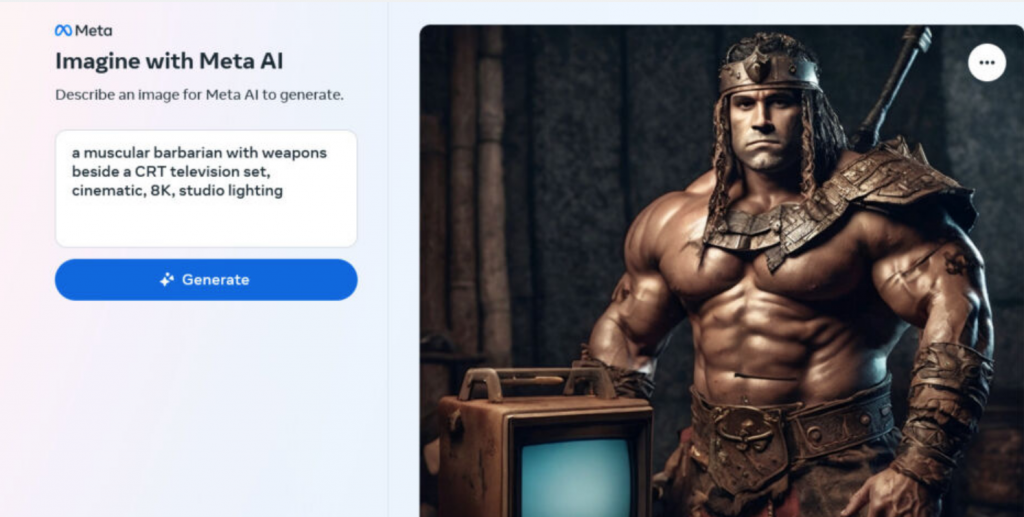

Meta’s new AI image generator was trained on 1.1 billion Instagram and Facebook photos. It is possible that your photos have been vacuumed up and returned to you with the correct prompt with their new Imagine with Meta AI. The arstechnica.com writer used this image to test the image generator against others. ( Source: arstechnica.com)

AI Critic Calls Imagine with Meta AI as ‘Mostly Average’ in Terms of Its Images

Well, at least they told us how they did it. Meta and Mark Zuckerburg have released a new digital AI toy.

According to a story on arstechnica.com, last week Meta released a free standalone AI image-generator website, “Imagine with Meta AI,” based on its Emu image-synthesis model. Meta used 1.1 billion publicly visible Facebook and Instagram images to train the AI model, which can render a unique image from a written prompt. Previously, Meta’s version of this technology—using the same data—was only available in messaging and social networking apps such as Instagram.

The images posted by users on Facebook or Instagram helped train Emu.

“In a way, the old saying, ‘If you’re not paying for it, you are the product’ has taken on a whole new meaning.”

Although, as of 2016, Instagram users uploaded over 95 million photos daily, the dataset Meta used to train its AI model was a small subset of its overall photo library.

Imagine AI Gets Panned

Writer Benj Edwards of arstechnica.com put Imagine with Meta AI through some of the same requests and prompts he posed to other AI generators. His review wasn’t a rave:

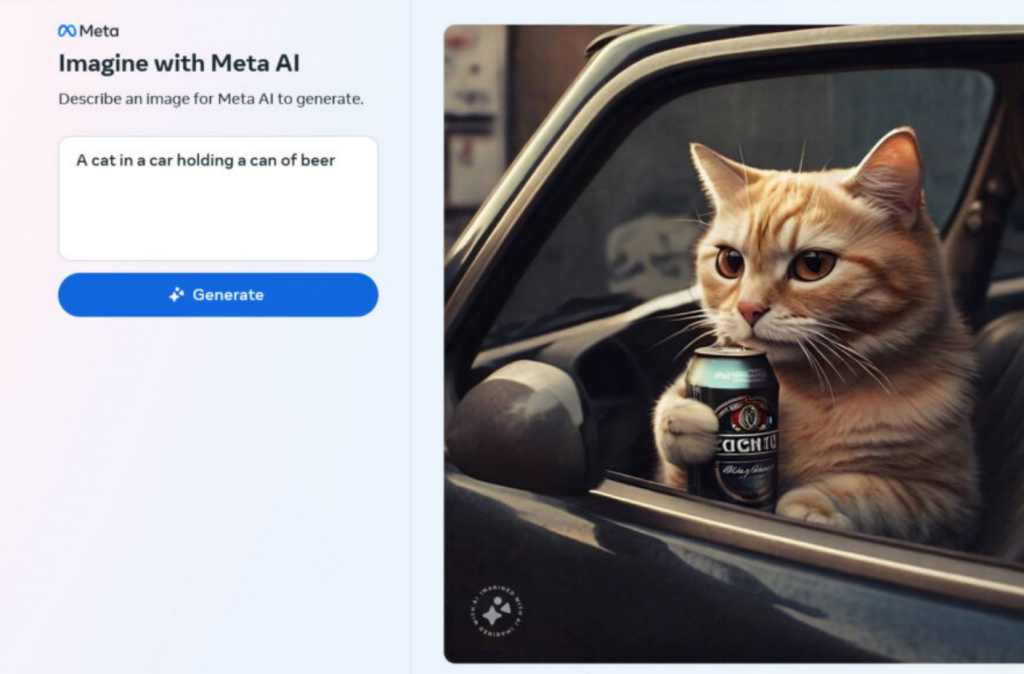

“We put Meta’s new AI image generator through a battery of low-stakes informal tests using our “Barbarian with a CRT” and ‘Cat with a Beer’ image-synthesis protocols. We found aesthetically novel results, as you can see above. (As an aside, when generating images of people with Emu, we noticed many looked like typical Instagram fashion posts.)

“We also tried our hand at adversarial testing. The generator appears to filter out most violence, curse words, sexual topics, and the names of celebrities and historical figures (no Abraham Lincoln, sadly), but it allows commercial characters like Elmo (yes, even ‘with a knife’) and Mickey Mouse (though not with a machine gun).

“Meta’s model generally creates photorealistic images well, but not as well as Midjourney. It can handle complex prompts better than Stable Diffusion XL, but perhaps not as well as DALL-E 3. It doesn’t seem to do text rendering well at all, and it handles different media outputs like watercolors, embroidery, and pen-and-ink with mixed results. Its images of people seem to include diversity in ethnic backgrounds.

“Overall, it seems about average these days in terms of AI image synthesis.”

Keeping in mind this review may not reflect the same results that other users get. Edwards does a good job of adding links to his story that address many logical questions.

Limits Set

There are a few words that will not compute with this AI from Meta. Words of violence, sex, and some other topics are unable to generate images. However, Meta is aware of the people who will push the envelope with these algorithms. They are pushing the idea of AI watermarking as their way to protect their product. But their product is questionable even by their admissions.

Edwards adds:

“Meta seems to be handling issues of potential harmful outputs with filters, a proposed watermarking system that isn’t operational yet (‘In the coming weeks, we’ll add invisible watermarking to the imagine with Meta AI experience for increased transparency and traceability,’ the company says), and a small disclaimer at the bottom of the website:

“‘Images are and may be inaccurate or inappropriate.'”

“The images might not be accurate (do cats drink beer?), and they might not even be ethical in the eyes of the unnamed authors of the 1.1 billion images used to train the model. But dare we say it: Generating them can be fun. Of course, depending on your disposition and how you view the pace of AI image synthesis, that fun may be balanced out by an equal level of concern.”

The thought-provoking article gives insights about the latest AI image generator that you can use for free.

read more at arstechnica.com

Leave A Comment