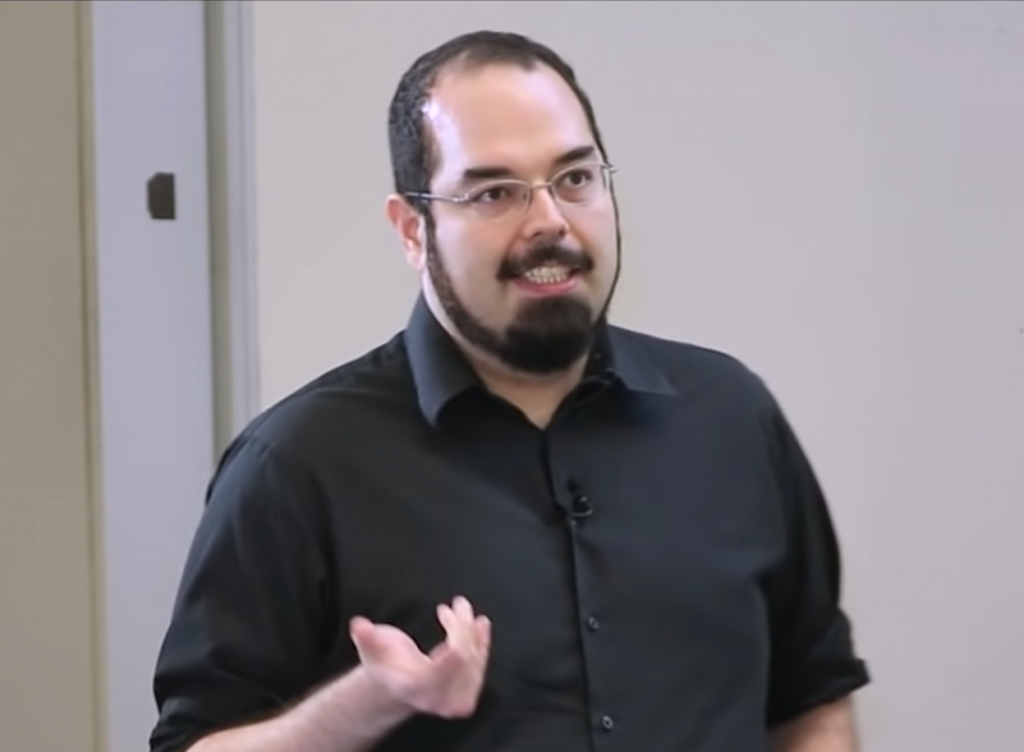

Eliezer Yudkowsky explains alignment in a YouTube video. The AI theorist and writer is best known for popularizing the idea of friendly AI. He is a co-founder and research fellow at the Machine Intelligence Research Institute, a private research nonprofit based in Berkeley, California.

Researchers Struggle over Pursuing AI Safety through Ethics, Alignment

When it comes to saving humanity from a run-amok algorithm, that kind of excitement makes for good movies, book plots, and scary student lectures. However, it turns out there are real people fighting that good fight today in real-time. According to Kelsey Piper’s story on vox.com, two trains of thought exist to protect us from Skynet-like horrors: ethics guidelines and “alignment.”

But of course, these two camps can’t agree on the best way to make that happen.

Team Ethics

Teams of researchers in academia and at major AI labs are working on the problem of AI ethics, or the moral concerns raised by AI systems. These efforts tend to be especially focused on data privacy concerns and on what is known as AI bias — AI systems using training data with bias often built in that produce racist or sexist results, such as refusing women credit card limits they’d grant a man with identical qualifications.

So fighting gender bias is good and that’s points for Team Ethics

Team Alignment

There are also teams of researchers in academia and at some (though fewer) AI labs that are working on the problem of AI alignment. This is building in protections as part of system design. The problem is the risk that, as our AI systems become more powerful, our oversight methods and training approaches will be more and more meaningless for the task of getting them to do what we actually want. Ultimately, we’ll have handed humanity’s future over to systems with goals and priorities we don’t understand and can no longer influence.

And it seems allowing AI to do the oversight on AI might not be a good idea according to Team Alignment.

It is clear that communication might be what’s missing between these two camps. From the perspective of people working on AI ethics, experts focusing on alignment are ignoring real problems we already experience today in favor of obsessing over future problems that might never come to be. Often, the alignment camp doesn’t even know what problems the ethics people are working on.

“Some people who work on long-term/AGI-style policy tend to ignore, minimize, or just not consider the immediate problems of AI deployment/harms,” Jack Clark, co-founder of the AI safety research lab Anthropic and former policy director at OpenAI, wrote this weekend.

The AI ethics/AI alignment battle doesn’t have to exist. After all, climate researchers studying the present-day effects of global warming don’t tend to bitterly condemn climate researchers studying long-term effects, and researchers working on projecting the worst-case scenarios don’t tend to claim that anyone working on heat waves today is wasting time.

Currently, there are very few restrictions or guardrails on much of the AI research going on in America or anywhere else around the world for that matter. It’s not hard to imagine somebody pushing the wrong button just once and in turn, they turn our world over to an AI Overlord.

read more at vox.com

Leave A Comment