Some AI firms are no longer releasing pertinent information about their latest algorithms. Instead, they are hiding data, such as how algorithms were trained and the amount of power required to operate a chatbot. (Source: Stanford University Human-Centered Artificial Intelligence)

Stanford Study Exposes Lack of Transparency by AI Companies in Sources of Training

One of the best AI writers around is Will Knight for wired.com. This week he wrote a piece on how AI is becoming more powerful, while the companies building chatbots and other services are becoming more secretive.

AI companies are trying to maintain secrecy of what makes their algorithms work differently or better from others, but they are sacrificing transparency to keep a market edge.

“A study released by researchers at Stanford University this week shows just how deep—and potentially dangerous—the secrecy is around GPT-4 and other cutting-edge AI systems. Some AI researchers I’ve spoken to say that we are in the midst of a fundamental shift in how AI is pursued. They fear it’s one that makes the field less likely to produce scientific advances, provides less accountability, and reduces reliability and safety.

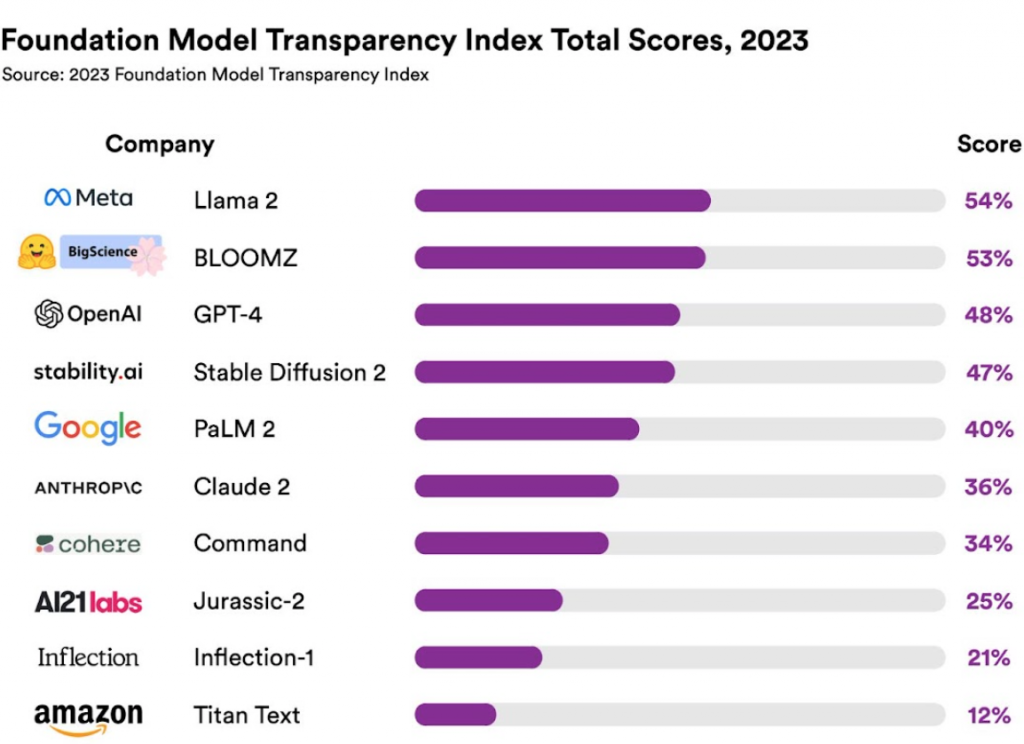

“The Stanford team looked at 10 different AI systems, mostly large language models like those behind ChatGPT and other chatbots. These include widely used commercial models like GPT-4 from OpenAI, the similar PaLM 2 from Google, and Titan Text from Amazon. The report also surveyed startup models, including Jurassic-2 from AI21 Labs, Claude 2 from Anthropic, Command from Cohere, and Inflection-1 from chatbot maker Inflection.”

The study also looked at the ‘free’ AI that is downloadable including the image-generation model Stable Diffusion 2 and Llama 2.

Criteria Selected

The study came up with 13 criteria to gauge the transparency of each chatbot. The study also looked for disclosures about the hardware used to train and run a model, the software frameworks employed, and a project’s energy consumption.

Across these metrics, the researchers found that no model achieved more than 54 percent on their transparency scale across all these criteria.

Rishi Bommasani, a PhD student at Stanford who worked on the study, says it reflects the fact that AI is becoming more opaque even as it becomes more influential. This contrasts greatly with the last big boom in AI when openness helped feed big technological advances, including speech and image recognition.

“In the late 2010s, companies were more transparent about their research and published a lot more,” Bommasani says. “This is the reason we had the success of deep learning.”

New Language Model

Sharing what you have learned doesn’t have to mean you lose in the marketplace. In the past transparency only led to greater discoveries.

AI2 is trying to develop a much more transparent AI language model, called OLMo. It is being trained using a collection of data sourced from the web, academic publications, codes, books, and encyclopedias. That data set, called Dolma, has been released under AI2’s ImpACT license. When OLMo is ready, AI2 plans to release the working AI system and the code behind it, allowing others to build upon the project

“This is a pivotal time in the history of AI,” says Jesse Dodge, a research scientist at the Allen Institute for AI, or AI2. “The most influential players building generative AI systems today are increasingly closed, failing to share key details of their data and their processes.”

Given how widely AI models are being deployed—and how dangerous some experts warn they might be—a little more openness could go a long way.

The Allen Institute is trying to remedy this tendency with a new AI language model called OLMo.

“It is being trained using a collection of data sourced from the web, academic publications, code, books, and encyclopedias. That data set, called Dolma, has been released under AI2’s ImpACT license. When OLMo is ready, AI2 plans to release the working AI system and also the code behind it too, allowing others to build upon the project.”

read more at wired.com

Leave A Comment