Political ads appear to be one of the easiest ways to misuse generative AI. The government lacks oversight on social media platforms. Twitter says it has a “synthetic media” policy under which it may label or remove ‘synthetic, manipulated, or out-of-context media that may deceive or confuse people and lead to harm.’ The website verifythis.com identified the fake video images. (Source: verifythis.com)

Generative AI Could Threaten Election Decisions by Manipulating the Truth

Our country has taken a giant leap of faith as it allows AI to become an integral part of life. But some aren’t sure if it is a leap forward or a leap backward for society. Reports say chatbots like ChatGPT are being directed to misbehave in regards to spreading misinformation—just in time for the 2024 U.S. elections, according to a story on cnn.com.

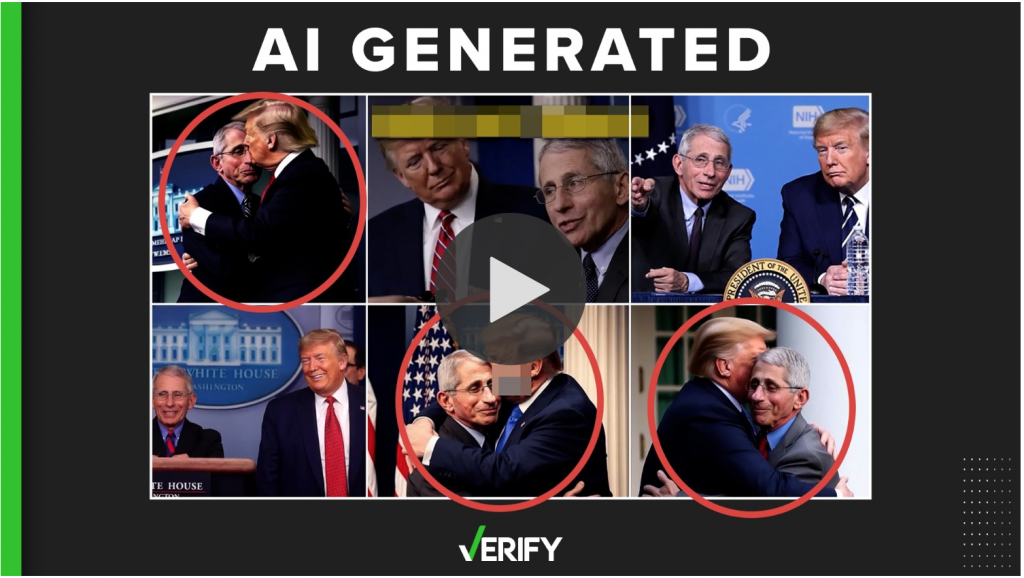

The concern is over people or organizations using AI to mislead or completely lie to your average voter. It started with a political ad for Ron DeSantis, the Governor of Florida, which was posted to Twitter. His campaign used images that were generated by AI showing former President Donald Trump hugging Dr. Anthony Fauci. The images, used to criticize Trump for not firing the nation’s top infectious disease specialist, were tricky to spot: they were shown alongside real images of the pair and with a text overlay saying, “real life Trump.”

“As the images began spreading, fact-checking organizations and sharp-eyed users quickly flagged them as fake. But Twitter, which has slashed much of its staff in recent months under new ownership, did not remove the video. Instead, it eventually added a community note — a contributor-led feature to highlight misinformation on the social media platform — to the post, alerting the site’s users that in the video, ‘3 still shots showing Trump embracing Fauci are AI-generated images.’ ”

Lack of Media Guardrails

Currently, Meta and TikTok have also joined a group of tech industry partners coordinated by the non-profit Partnership on AI dedicated to developing a framework for responsible use of synthetic media. Twitter has rolled back much of its content moderation in the months since billionaire Elon Musk took over the platform relying on its “Community Notes” feature to let users critique the accuracy of other people’s posts and add context.

Several prominent social networks have pulled back on their enforcement of some election-related misinformation and undergone significant layoffs over the past six months, which in some cases hit election integrity, safety, and responsible AI teams.

Current and former U.S. officials have also raised alarms that a federal judge’s decision earlier this month to limit how some U.S. agencies communicate with social media companies could have a “chilling effect” on how the federal government and states address election-related disinformation. (An appeals court temporarily blocked the order.)

Meanwhile, AI is evolving at a rapid pace. And despite calls from industry players and others, US lawmakers and regulators have yet to implement real guardrails for AI technologies.

“I’m not confident in even their ability to deal with the old types of threats,” said David Evan Harris, an AI researcher and ethics adviser to the Psychology of Technology Institute, who previously worked on responsible AI at Facebook-parent Meta. “And now there are new threats.”

Separate Realities

You might recall when Kelly Ann Conway of the Trump Administration created the phrase “alternative facts.” This idea was destructive and unfortunately very effective while blurring the lines for the media covering the news that day.

AI experts say the platforms’ detection systems for computer-generated content may not be able to match the technology’s advancements. Some of the companies with new generative AI tools lack the ability to accurately detect AI-generated content.

Some experts are urging social platforms to put policies in place alerting viewers to AI-generated or manipulated content. They’re also asking regulators and legislators to “establish guardrails around AI and hold tech companies accountable for the spread of false claims.”

“We know that we’re going into a very scary situation where it’s going to be very unclear what has happened and what has not actually happened,” said Mitchell. “It completely destroys the foundation of reality when it’s a question of whether or not the content you’re seeing is real.”

Not everything is as it seems in the political theater we call social media.

read more at cnn.com

Leave A Comment