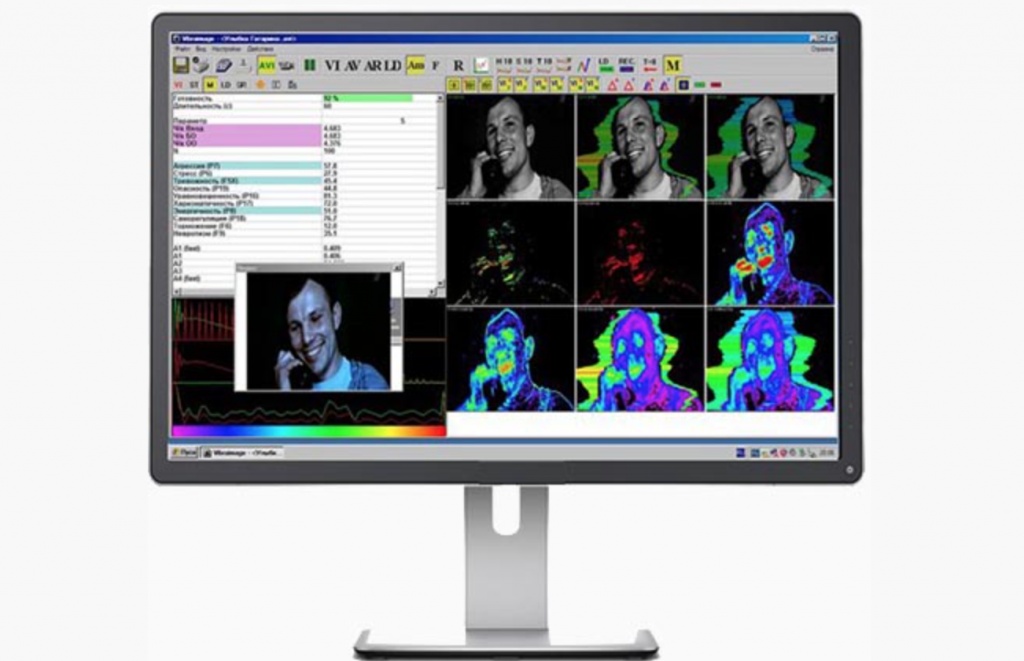

VibraImage relies on color imaging, thermal imaging or x-ray imaging. It measures micromovement (micromotion, locomotion, vibration) of a person by standard digital, web or television cameras and image processing. (Source: Elsys Corp.)

AI Program Said to Be Able to Pick Up Bad Vibrations from People’s Head Movements

Seeflection.com has been sharing stories about AI being used to review court sentences or recommend medical advice. AI is being given more and more responsibility in very critical areas. It has grown into nearly every facet of our modern world. Even into areas or services perhaps we have been warned about for years by sci-fi writers, such as the use of AI to assess you as a person and if you have worth in society, or to scan you for disease.

Now a system is using head vibrations to deduce things about you. Can you say phrenology 2.0? Shades of the movie The Minority Report.

A startling article about the latest AI usage on thenextweb.com was written by Jim Wright. In the piece, Wright shows several reasons how this technology being driven by AI called VibraImage is in fact flawed and frankly problematic as being used as much as the article says it is.

Wright published in Science, Technology and Society, that there is very little reliable, empirical evidence that VibraImage and systems like it are actually effective at what they claim to do.

It’s Not New Tech

VibraImage was developed by Russian biometrist Viktor Minkin through his company ELSYS Corp since 2001. Other emotion detection systems try to calculate people’s emotional states by analyzing their facial expressions. By contrast, VibraImage analyzes video footage of the involuntary micro-movements, or “vibrations,” of a person’s head, which are caused by muscles and the circulatory system. The analysis of facial expressions to identify emotions has come under growing criticism in recent years. Could VibraImage provide a more accurate approach?

Digital video surveillance systems can’t just identify who someone is. They can also work out how someone is feeling and what kind of personality they have. They can even tell how they might behave in the future. And the key to unlocking this information about a person is the movement of their head.

That is the claim made by the company behind the VibraImage artificial intelligence (AI) system. (The term “AI” is used in a broad sense to refer to digital systems that use algorithms and tools such as automated biometrics and computer vision). You may never have heard of it, but digital tools based on VibraImage are being used across a broad range of applications in Russia, China, Japan, and South Korea.

“Among other things, these applications include identifying ‘suspect’ individuals among crowds of people. They are also used to grade the mental and emotional states of employees. The technology has already been deployed at two Olympic Games, a FIFA World Cup and a G7 Summit.”

In Japan, clients of such systems include one of the world’s leading facial recognition providers (NEC), one of the largest security services companies (ALSOK), as well as Fujitsu and Toshiba. In South Korea, among other uses, it is being developed as a contactless lie detection system for use in police interrogations. In China, it has already been officially certified for police use to identify suspicious individuals at airports, border crossings, and elsewhere.

This is not the AI world most of us want to be a part of. And since we already know algorithms can and have been written with bias and certain slanted functions, it is only a matter of time before the same occurs with programs like Vibralmage. There are other companies worldwide using the same tech for the same questionable reasons.

Is It Too Much?

Most of us have been aware of these systems for a while and even some cities have taken steps to limit their use in policing. But not many cities and that should be a concern.

Wright says few scientific articles on VibraImage have been published in academic journals with rigorous peer review processes – and many are written by those with an interest in the success of the technology. This research often relies on experiments that already assume VibraImage is effective.

How exactly certain head movements are linked to specific emotional-mental states is not explained. One study from Kagawa University of Japan found almost no correlation between the results of a VibraImage assessment and those of existing psychological tests.

The author calls the tech ‘suspect AI.’ A spokesman for VibraImage stated:

“VibraImage is not an AI technology, but ‘is based on understandable physics and cybernetics and physiology principles and transparent equations for emotions calculations.’ It may use AI processing in behavior detection or emotion recognition when they have ‘technical necessity for it.’ “

read more at thenextweb.com

Leave A Comment