Robotic arms can be moved through brain-to-computer interfaces by paralyzed users.

Brain Signals Control Robotic Arm with Implants to Computer Interface

What a marvelous little tool we have in our heads. The human brain is a sender and receiver of signals that move our bodies and react to outside influences instantaneously. Unless you have had your life turned upside down with an accident and no longer had use of your limbs, it’s hard to imagine how hard it would be to train your brain to move a mechanical device. Nathan Copeland knows. He was paralyzed in 2004 from a car accident.

In an article from wired.com we found an entirely new universe is opening up for Copeland. Max G. Levy writes about a new connection being made between Copeland’s brain and a robotic arm.

A team at the University of Pittsburgh needed a volunteer to test whether a person could learn to control a robotic arm simply by thinking about it. This kind of research into brain-computer interfaces has been used to explore everything from restoring motion to people with paralysis to developing a new generation of prosthetic limbs to turning thoughts into text. Companies like Kernel and Elon Musk’s Neuralink are popularizing the idea that small electrodes implanted in the brain can read electrical activity and write data onto a computer. (No, you won’t be downloading and replaying memories anytime soon.)

Copeland was excited. “The inclusion criteria for studies like this is very small,” he recalls. You have to have the right injury, the right condition, and even live near the right medical hub. “I thought from the beginning: I can do it, I’m able to—so how can I not help push the science forward?”

The article takes us through the process of teaching Copeland how to control the arm. But what was missing was the return feeling that a hand and brain use to verify when an item is fully grasped or partially grasped.

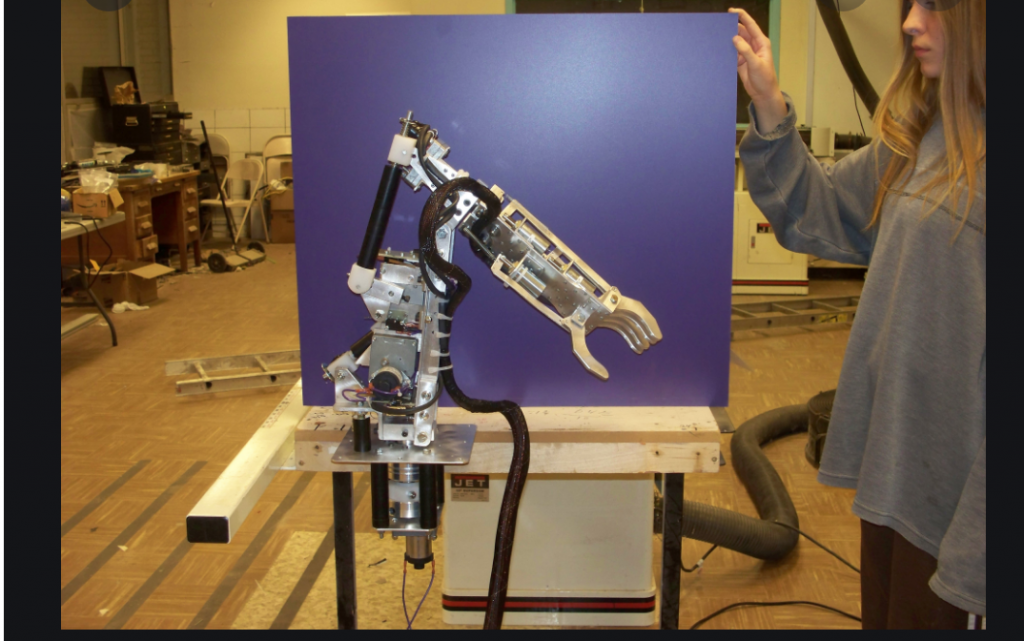

He soon underwent surgery in which doctors tacked lentil-sized electrode arrays onto his motor cortex and somatosensory cortex. These would read the electrical patterns of his brain activity, showing his intentions to move his wrist and fingers. Through something called a brain-computer interface (BCI), these impulses would be translated to control a robotic limb that sat atop a vertical stand beside him in the lab. Copeland began making the commute from his home in Dunbar, Pennsylvania, to Pittsburgh three times a week for lab tests. After three sessions he could make the robot move spheres and grasp cubes—all just by thinking.

TOUCH IS IMPORTANT

For restoring mobility, says study author Jennifer Collinger, a biomedical engineer at the University of Pittsburgh. Because to take maximum advantage of future BCI prosthetics or BCI-stimulated limbs a user would need real-time tactile feedback from whatever their hand (or the robotic hand) is manipulating. The way prosthetics work now, people can shortcut around a lack of touch by seeing whether stuff is being gripped by the robotic fingers, but eyeballing is less helpful when the object is slippery, moving, or just out of sight.

In everyday life, says Collinger, “you don’t necessarily rely on vision for a lot of the things that you do. When you’re interacting with objects, you rely on your sense of touch.”

The process of allowing Copeland’s brain to make the loop connection between sending and receiving information was possible in part because his brain was not damaged in the original accident, thus allowing the return of sensations to his brain. The article is deep and filled with interesting information on what may be the answer to millions of disabled individuals and help them return to full motion within their lives.

read more at wired.com

Leave A Comment