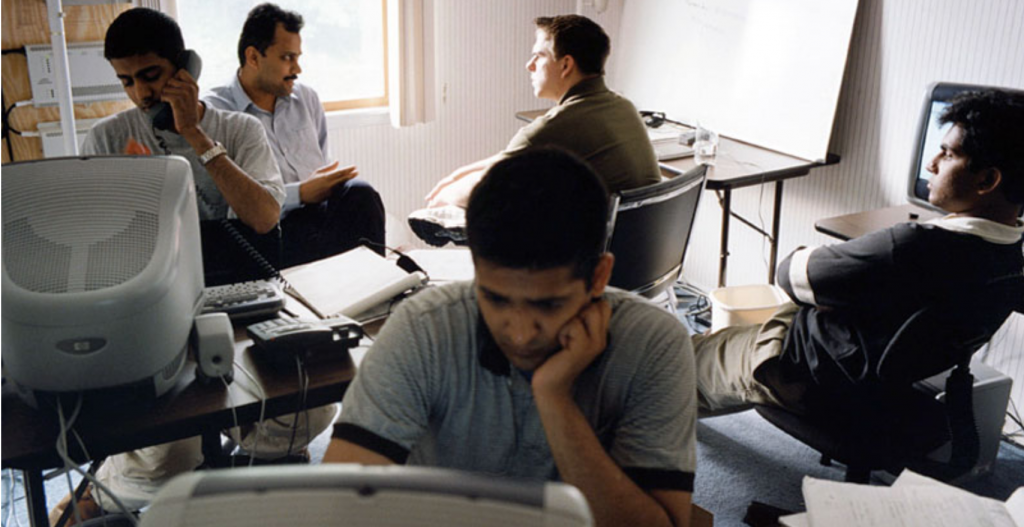

Employees of Qsupport in the new Qsupport branch in Iselin, New Jersey. The company’s headquarters is Bangalore, India. The images are for a photo documentary series called Computer Caste. (Source: Wim Klerkx, Netherlands)

Many AI Companies Pay Foreign Content Moderators, Image Taggers Low Wages

For decades, technology experts have been warning that AI will take away jobs as robots and automation replace people. While that hasn’t been the case at the scale predicted, a different issue has emerged involving the creation of low-paying jobs to support algorithms, which require data tags on millions of examples.

According to a story on OneZero on the Medium platform, hourly workers are being paid as little as $8 an hour to tag data. According to a 50-page research paper from scholars at Princeton, Cornell and the University of Montreal, data-labeling companies hire workers from sub-Saharan Africa and Southeast Asia, pay them less than U.S. minimum wage, and earn millions. Early on, some were paid in Amazon gift cards if they didn’t have a U.S. bank account and worked for Amazon Mechanical Turk, an online gig platform.

One of the most impactful datasets in the history of artificial intelligence, ImageNet, relied on Mechanical Turk workers who were paid $2 per hour, according to the paper.

Lower skilled tech workers, in general, are underpaid in the United States, according to a 2017 study by salary insights platform Paysa. “More than one-third of tech and engineering workers — nearly two million people — are underpaid by 10 percent or more,” a bizjournals.com story reported. More than 78 percent of all technology and engineering workers (3.7 million) were affected.

The ultimate irony of foreign workers tagging data and images is that it’s mostly U.S.-derived and photos are primarily white people.

“Images of grooms are classified with lower accuracy when they come from Ethiopia and Pakistan, compared to images of grooms from the United States,” the paper says. “Many of these workers are contributing to AI systems that are likely to be biased against underrepresented populations in the locales they are deployed in, and may not be directly benefiting their local communities.”

The researchers suggest integrating data labelers into the AI development process—and paying them equitably—so “their insight and expertise improve the accuracy of the data collection process.

The paper points to Masakhane, an organization dedicated to the preservation of African languages through artificial intelligence as an example of equitable A.I. development. Masakhane doesn’t create data for A.I. researchers, but instead fosters a community of people who label, research, and build algorithms for the African continent.

One of the biggest criticisms of AI companies is the lack of diversity in databases, which leads to gender and race discrimination in hiring apps, facial recognition programs and police database IDs. Paying people a fair wage seems a small price to pay to end discrimination that hurts people and leads to lawsuits.

read more at onezero.medium.com

Leave A Comment