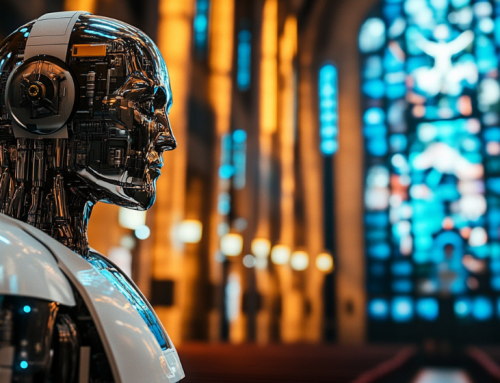

Disinformation campaigns could potentially throw elections into chaos, according to AI experts who want to prevent them from happening. “Election” photo by Nick Youngson. (Source: CC BY-SA 3.0 Alpha Stock Images)

Brookings Exposes Nightmare Scenario of Disinformation Attack on Election Day

The highly respected Brookings Institute has posed a frightening scenario regarding an American election that gets hijacked by AI and unscrupulous humans who use AI for criminal purposes. Rapid disinformation attacks conducted for maximum disruptive effect pose a chilling possibility that would be hard to counter before the damage is done.

In an article by John Villasenor, Brookings posed the following question while describing a possible route the criminals might take to disrupt yet another U.S. election.

The Nightmare Scenario

On the morning of Election Day in a closely contested U.S. presidential election, supporters of one candidate launch a disinformation campaign aimed at suppressing the votes in favor of the opposing candidate in a key swing state. After identifying precincts in the state where the majority of voters are likely to vote for the opponent, the authors of the disinformation attack unleash a sophisticated social media campaign to spread what appears to be first-person accounts of people who went to polling places in those precincts and found them closed. That’s just the beginning.

The attackers have done their homework. For the past several months, they have laid the groundwork, creating large numbers of fake but realistic-looking accounts on Facebook and Twitter. They mobilize those accounts to regularly post and comment on articles covering local and national politics.

The attackers used artificial intelligence (AI) to construct realistic photographs and profiles of account owners to vary the content and wording of their postings, thereby avoiding the sort of replication likely to trigger detection by software designed to identify false accounts. The attackers have also have built up a significant base of followers, both by having some of the attacker-controlled accounts follow other attacker-controlled accounts and by ensuring that the attacker-controlled accounts follow accounts of real people, many of whom follow them in return.

Just after polls open on the morning of Election Day, the attackers swing into action, publishing dozens of Facebook and Twitter posts complaining about showing up at polling locations in the targeted precincts and finding them closed. A typical tweet, sent shortly after the polls opened in the morning, reads “I went to my polling place this morning to vote and it was CLOSED! A sign on the door instructed me to vote instead at a different location!” Dozens of other attacker-controlled accounts “like” the tweet and respond with similar stories of being locked out of polling places. Other tweets and Facebook posts from the attackers include photographs of what appears to be closed polling stations.

It is jarring to think that most of us using online social platforms have already seen much of this scenario play out before our eyes over the last decade. Everything from the revelations of teams of [people in Russia or Ukraine that are paid to disrupt the American social media politics as possible. And they were quite successful. Remember Cambridge Analytica? In the worst case scenario, the disinformation attack could lead to tens of thousands of lost votes across the state—enough, as it turns out, to change the election outcome at both the state and national level.

Risks of Disinformation

Hopefully, the scenario outlined above will never happen. But the fact that it could occur illustrates an important aspect of online disinformation that has not received as much attention as it deserves. Some forms of disinformation can do their damage in hours or even minutes. This kind of disinformation is easy to debunk given enough time, but extremely difficult to do so quickly enough to prevent it from inflicting damage.

Fortunately, the need to combat online disinformation has received increasing attention among academic researchers, civil society groups, and in the commercial sector, specifically among both startups and established technology companies. This has led to a growing number of paid products and free online resources to track disinformation. Part of the solution involves bot detection, as bots are often used to spread disinformation. But the overlap is not complete—bots are also used for many other purposes as well, some nefarious and some innocuous; and not all disinformation campaigns involve bots.

another tool to fight disinformation is called “Hoaxy:”

“Indiana University’s Observatory on Social Media, Hoaxy, can be used to ‘observe how unverified stories and the fact checking of those stories spread on public social media.’

The article explores potential disinformation in election cycles and what companies are developing to battle the major disinformation campaigns that have been plaguing the democratic process for selecting leaders as well as the process of educating the young.

read more at brookings.edu

Leave A Comment