Algorithm Translates Brain Waves into Words

“We’ve shown that, with the right technology, these people’s thoughts could be decoded and understood by any listener,” said Nima Mesgarani, an electrical engineer at Columbia University’s Zuckerman Institute.

An article published in the journal Scientific Reports, details how the team at Columbia University’s Zuckerman Mind Brain Behavior Institute used deep-learning algorithms and the same type of tech powering devices like Apple’s Siri and the Amazon Echo to turn thought into “accurate and intelligible reconstructed speech.”

The journal reported the research earlier this month, but the article goes into far greater depth.

The research started with five epileptic patients who agreed to the study. They had electrodes attached to their brain’s surface. A series of tests conducted included reading, listening to sentences and listening to someone counting numbers. Researchers fed the data into a vocoder algorithm that sorted out the brain signals, cleaned up by a neural network, before resulting in a robot like speech pattern. You can listen to the results.

The potential to assist disabled people with a widespread technology based on the research is staggering. Imagine if Stephen Hawking had that technology instead of moving his eyes to type words into sounds?

“It would give anyone who has lost their ability to speak, whether through injury or disease, the renewed chance to connect to the world around them,” Mesgarani said.

read more at https://cnet.co/2HJd2B0

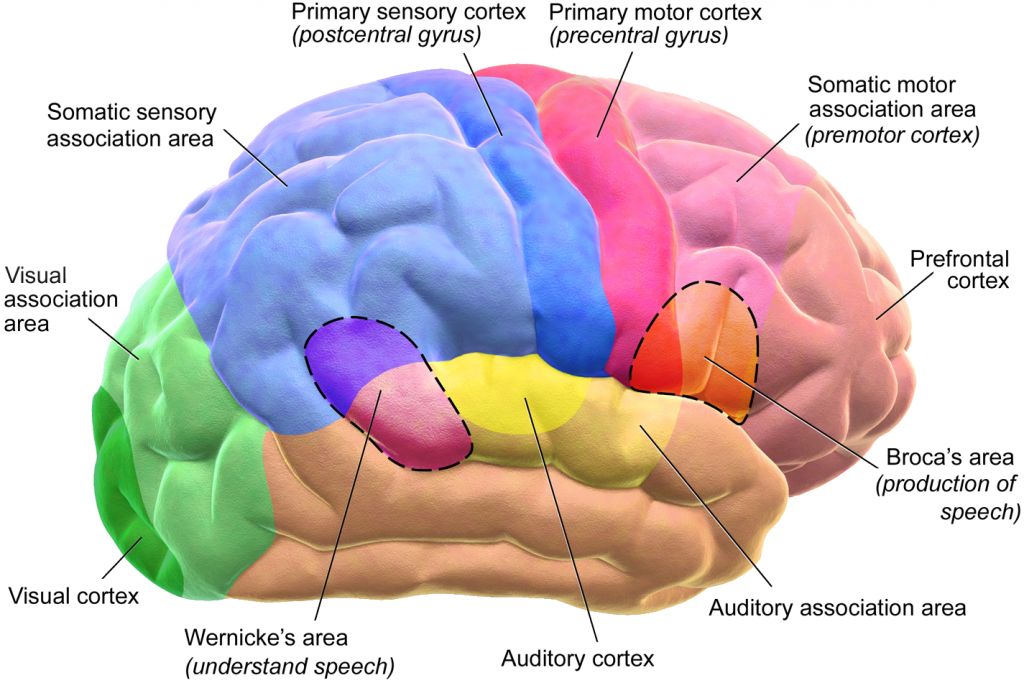

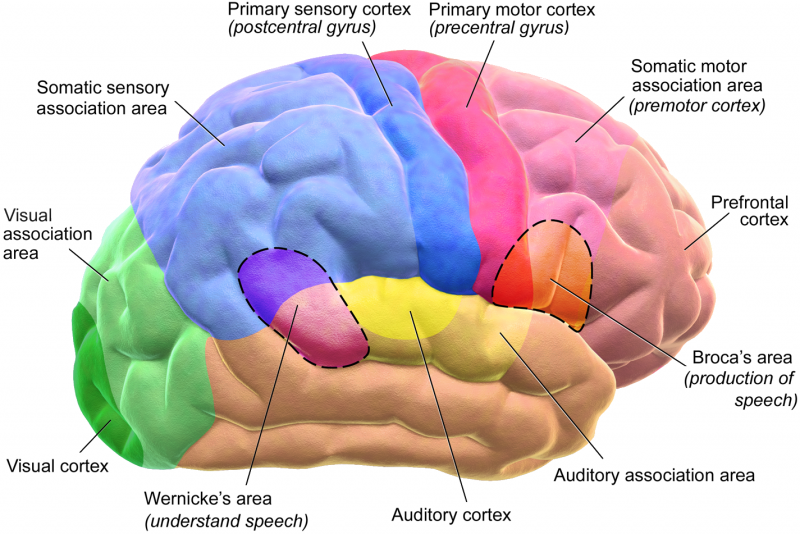

The human brain and its functions. credit: Wikipedia

Leave A Comment