Orlando Police Test Amazon’s ‘Rekognition’ Tools

Last week, NPR broke the news that Orlando police began testing Amazon’s Rekognition visual classification AI for use in real-time facial recognition through public surveillance cameras. While police departments already surveil Americans en masse on public roadways through computer vision systems designed to read license plates, real-time facial recognition AI technology raises troubling concerns over privacy, legality and liberty.

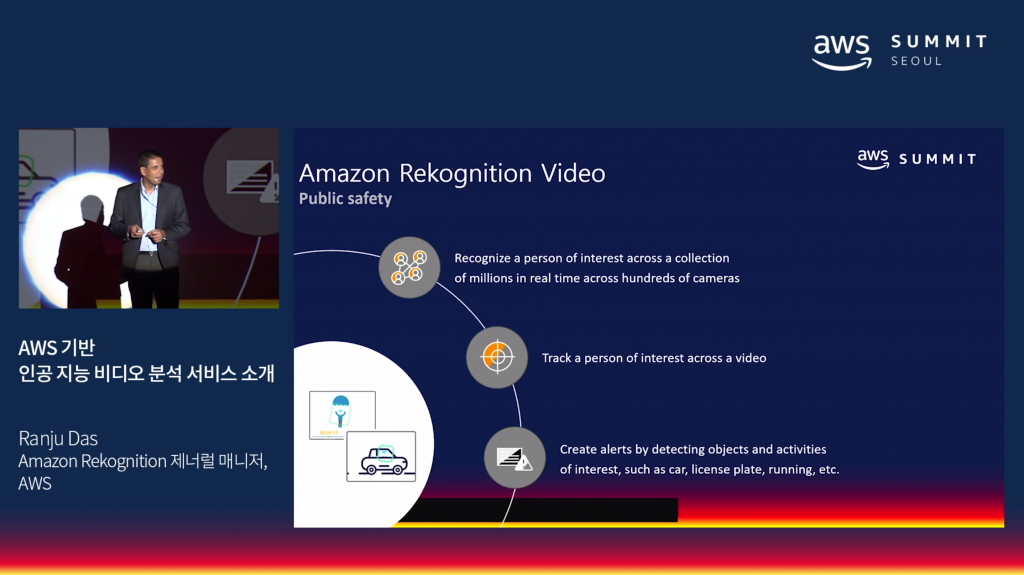

NPR’s story referenced a video from earlier in May posted by Amazon of an AWS developer conference in Seoul, South Korea where Amazon’s Rekognition director Ranju Das spoke at length on a variety of Rekognition use cases, including potential law enforcement and public security uses such as identifying and tracking “persons of interest.” Das described the City of Orlando as a “launch partner of ours” who “have cameras all over the city.” Das presented test footage of the recognition software in action on a residential street, adding that “we analyze the video in real-time, search[ing] against the collection of faces they have.” See Das’ discussion of Recognition including use in Orlando here.

Head of Amazon’s Rekognition division, Ranju Das, speaking in April about public safety applications of the company’s visual recognition AI. Via YouTube/Amazon.

In a correction posted on 24 May, Amazon released a statement that Das “got confused and misspoke” during the presentation, clarifying that “The City of Orlando is testing Amazon’s Rekognition Video and Amazon Kinesis Video Streams internally to find ways to increase public safety and operational efficiency, but it’s not correct that they’ve installed cameras all over the city or are using in production.”

While it’s unclear in the video if the footage was of an actual public road, a pre-staged test, or simply simulated footage edited for demonstration purposes, the presentation hints at the AI’s capabilities to identify different pedestrians, cars and even pets in the video frames—capabilities that the City of Orlando is indeed testing, whether “all over the city” or not.

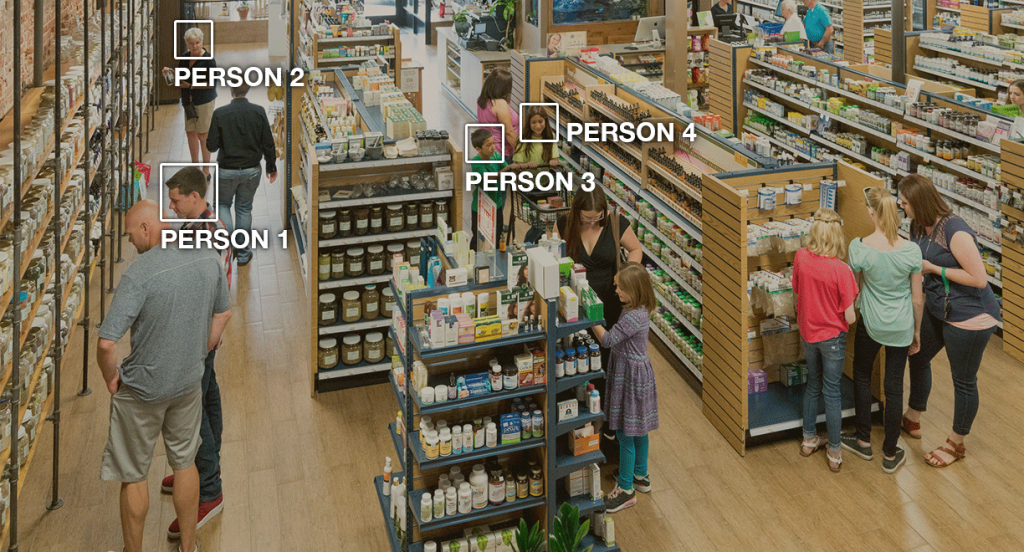

Even Rekognition’s own website, however, touts Orlando’s active participation in testing the technology. The website describes many of Rekognition’s abilities and clearly markets some of its capabilities for security applications, stating that “[y]ou can detect, analyze, and compare faces for a variety of user verification, cataloging, people counting and public safety use cases,” noting that Rekognition “can track people through a video even when their faces are not visible, or as they go in and out of the scene. You can also identify their movements in the frame to tell things like whether someone was entering or exiting a building.”

An image from Rekognition’s website demonstrating one potential use for identifying people on CCTV security. Image via Amazon.

Amazon quotes John Mina, the Chief of Police at Orlando Police Department, who describes the company’s programs:

“The City of Orlando is excited to work with Amazon to pilot the latest in public safety software through a unique, first-of-its-kind public-private partnership. Through the pilot, Orlando will utilize Amazon’s Rekognition Video and Amazon Kinesis Video Streams technology in a way that will use existing City resources to provide real-time detection and notification of persons-of-interest, further increasing public safety and operational efficiency opportunities for the City of Orlando and other cities across the nation.”

According to written statements by the City of Orlando in the wake of NPR reportage, the Orlando Police Department admits that it is actively employing Rekognition AI, however the city claims that the technology is limited to a “pilot program” to test the AI and “keep the residents and visitors of Orlando safe.” Furthermore, the city clarified the scope of the test, stating that “[Orlando] is only using facial imagining for a handful of Orlando police officers who volunteered and agreed to participate in the test pilot” and that testing on surveillance cameras is “limited to only 8 City-owned cameras.”

The city also stated that it is not storing facial data of members of the public, and stressed that “[t]he Orlando Police Department is not using the technology in an investigative capacity or in any public spaces at this time,” adding that “[a]ny use of the system will be in accordance with current and applicable law.”

However, questions remain, and within the delicate wording of Orlando’s releases on the issue, it’s unclear if the pilot technology had been used in public prior to the statements. Regardless of the extent of Orlando’s Rekognition pilot, even if the city was employing the technology in wide public usage for policing, the city would likely be operating within the scope of current laws. No current laws outright ban the police from using real-time facial recognition AI and, as with much of the digital privacy issues surfacing over the past decade, little or no legal precedents exist. Federal courts have yet to face cases on the constitutionality of facial recognition AI.

The NPR report cited Matt Cagle of the ACLU of Northern California. The ACLU has requested records on Amazon’s communication with the Orlando Police Department as well as the Washington County Sheriff’s Office in Oregon who also uses Rekognition AI to identify potential suspects in crime scene stills. Cagle describes the ACLU as “blowing the whistle” on the invasive technology, claiming that “Amazon is handing governments a surveillance system primed for abuse.”

Echoing NSA whistleblower Edward Snowden’s description of the federal surveillance state as “turnkey tyranny,” Cagle warns of the potential for misuse of the technology, saying that “[a]ctivating a real-time facial recognition system, that can track people, if the technology is there, could be as simple as flipping a switch in some communities.”

Above: a report by the Wall Street Journal on China’s own surveillance state in which the government actively observes, identifies, and tracks the public without consent or legal restriction.

The controversy over Orlando’s use⎯or “pilot program“ as officials claim⎯of real-time pedestrian identification directly conjures visions of surveillance dystopias in literature and film such as 1984 and Minority Report. However, governments today are already wielding some of the same AI-empowered surveillance systems once safely relegated to science fiction. Orlando’s use of Rekognition AI—a case likely being watched with eager eyes by other state, local and federal agencies—raises discomforting associations with existing governments wielding dystopian powers of oversight against their own populations. China, the most notorious and powerful of the globe’s security sates, employs millions of cameras to identify and track every move of the nation’s more than 1.3 billion citizens.

Amazon absolves itself of all blame in the matter. The company stated that it required all of its customers to act in accordance with existing laws and “be responsible,” a laughable proposition given the impossibility of enforcing such a policy, especially in the face of lucrative government contracts. Additionally, Amazon countered criticisms of its technology’s uses by claiming that the benefits of such technologies outweighed the risk of unethical or illicit uses, mirroring Google’s defenses of its own competing computer vision AI in a controversial DoD drone program.

According to Amazon: “[o]ur quality of life would be much worse today if we outlawed new technology because some people could choose to abuse the technology. Imagine if customers couldn’t buy a computer because it was possible to use that computer for illegal purposes?”

Leave A Comment