Highlights: AI for Accessibility, Cortana Works with Alexa

The Microsoft Build 2018 conference last week for developers revealed several new directions for the company, including further investment in AI. It pledged $25 million for the AI for Accessibility program, for instance, which will seek ways to help disabled people via developers, universities and other organizations working in the disability sector.

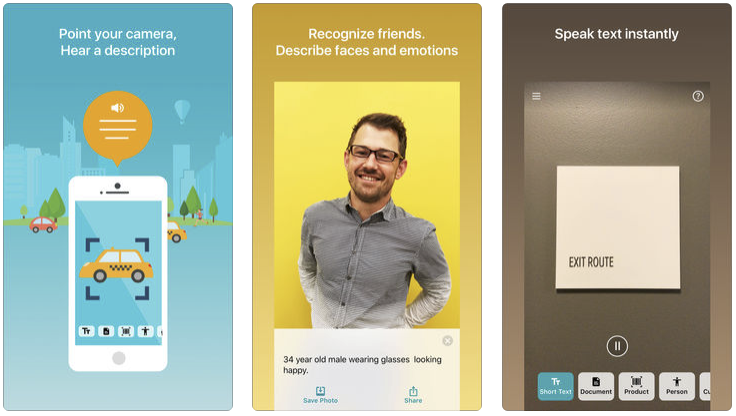

Seeing AI is an example of a Microsoft app currently used for people with low vision.

https://www.microsoft.com/en-us/seeing-ai/

Another major announcement was the fruition of a partnership between Microsoft’s Cortana digital assistant and Amazon’s Alexa—as well as providing open source access to the cloud-based Azure IoT Edge Runtime.

Microsoft’s partnership with Amazon will allow Alexa to hail an Uber through Windows 10 or check your schedule with Cortana on an Amazon Echo, making for a more seamless user experience among devices. So far the company hasn’t set a deadline to activate the system crossover.

With support added to the Azure Kubernetes Service (AKS), Microsoft has made its Azure Maps generally available and partnered with DJI and Qualcomm for its Azure, AI and IoT products. The company also announced plans to release Project Kinect for Azure, a package of sensors and a depth camera.

With more than 100 new features, the Microsoft Azure Bot Service will enable the 300,000 developers who use it to access more conversational AI tools and services in the cloud.

read more at venturebeat.com

Leave A Comment