AI Researcher Urges Ethics Training for Developers

AI risks more harm to society than good if today’s developers, researchers, and academics continue to neglect the crucial ethical and philosophical considerations in their trade, says one researcher who is calling for an increased emphasis on ethical standards and education “or the darkest of science fiction will become reality.”

Catherine Stinson, a former AI researcher and current scholar at the Rotman Institute of Philosophy and University of Western Ontario, described in an editorial published in Canada’s The Globe and Mail that today’s AI leadership is prioritizing advancement and profit at the expense of taking necessary ethical precautions to ensure that AI’s benefits outweigh the risks. Describing herself as “someone who [has] played for both teams” with prior experience developing AI as well as her present work on addressing AI ethics at the postdoctoral level, Stinson says that while “there are indeed genuine risks we ought to be very worried about” in regards to all-powerful AI, most of the technology’s most prescient risks aren’t being addressed.

Not so fast, clippy: Stinson asserts that popularized doomsday AI scenarios aren’t as risky as today’s seemingly mundane AI employed too hastily or with malicious intent.Via Reddit.

While Stinson views some of the more extreme and hysterical AI scenarios—such as an omnipotent murderous AI or the “paperclip maximizer” thought experiment popularized by Bostrom—as headline fodder borne of popular misconception, she feels that such apocalyptic end states of Superintelligence are rooted in fallacy or “possible, but not very probable.” Instead, she offers, we’re far more likely to face risks from malevolent uses of AI rather than malevolent AI itself, noting explicit examples of harmful AI such as autonomous weapons systems, but also less apparent—but at least as equally dangerous—downstream risks of bad AI such as a technologically perfect global surveillance state, manipulation of entire populations a la Cambridge Analytics, or the unintended prejudicing of AI programs that will increasingly govern everything from our healthcare to our justice systems by bias and inaccuracy.

Central to the problem, Stinson asserts, is the prevailing attitudes within the tech industry as well as a dearth of ethics education within the field. She describes the Silicon Valley set as suffering from a certain myopia she coins “nerd-sightedness.” She defines that as, “a tendency in the computer-science world to build first, fix later, while avoiding outside guidance during the design and production of new technology.”

This all-too prevalent nerd-sightedness in the insular tech community stems from what the NYT calls an irresponsible “build it first and ask for forgiveness later” attitude towards ethics, reinforcing a culture which prioritizes pure technological innovation or competitive advantage while either willfully neglecting the ethical considerations of potential externalities or—perhaps worse yet—assuming that such unintended consequences can’t happen. From Stinson:

“If you ask a coder what should be done to make sure AI does no evil, you’re likely to get one of two answers, neither of which is reassuring. Answer No. 1: “That’s not my problem. I just build it,” as exemplified recently by a Harvard computer scientist who said, “I’m just an engineer” when asked how a predictive policing tool he developed could be misused. Answer No. 2: ‘Trust me. I’m smart enough to get it right.’ “

Stinson likens this attitude to that of Dr. Frankenstein of Mary Shelly’s 200-year-old classic novel. Frankenstein—”perhaps the most famous warning to scientists not to abdicate responsibility for their creations”—is remarkably prescient and relevant at the cusp of the AI age, Shelly’s parable addressing the folly of a similarly “nerd-sighted” scientist intent on creating life for the betterment of mankind, with no regard for the nightmarish consequences to come:

“Frankenstein begins with the same lofty goal as AI researchers currently applying their methods to medicine: “What glory would attend the discovery if I could banish disease from the human frame and render man invulnerable to any but a violent death!” In a line dripping with dramatic irony, Frankenstein’s mentor assures him that ‘the labours of men of genius, however erroneously directed, scarcely ever fail in ultimately turning to the solid advantage of mankind.’ “

While strict ethical standards and regulations govern medicine and genetic research today—preventing in principle any would-be Doctor Frankensteins today from attempting to reanimate the dead—Stinson notes that such rigorous traditions of ethical responsibility and oversight such as medicine’s Hippocratic Oath are entirely absent from the technological industry at large. Given the potential global import of AI technology, it’s crucial that the tech industry instills ethical thinking across its ranks, beginning in the university where, for all the breakthrough advancement in AI, students receive little or no training in how to ethically contextualize their work.

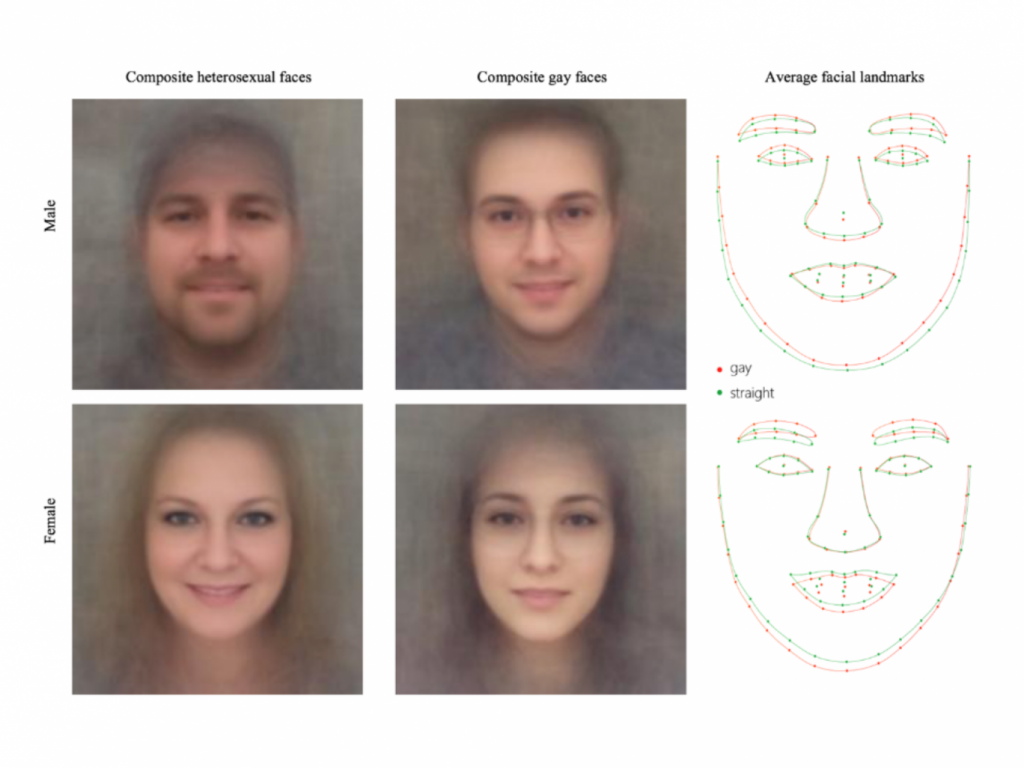

A Stanford University AI project in 2017 was able to identify gay faces with startling accuracy, leading to protests that such a technology could be feasibly implemented today by hate groups or repressive regimes to out and persecute LGBTQ individuals, an obviously unintended use case for the engineers who created the AI and the Stanford researchers involved in the study.

According to Stinson, ethics courses are “rarely” required for computer-science degrees, and most graduates “don’t get any ethics training at all,” meaning most AI researchers don’t have the training necessary to understand the implications of the AI they create.

“Steve Easterbrook, the professor in charge of the computers and society course [at University of Toronto], agrees that there needs to be more ethics training, noting that moral reasoning in computer-science students has been shown to be ‘much less mature than students from most other disciplines.’ Instead of one course on ethics, he says ‘it ought to be infused across the curriculum, so that all students are continually exposed to it.’

Another professor —from UNC Chapel Hill— also cited by Stinson agrees:

“You have to integrate ethics into the computer science curriculum from the first moment you teach students here’s a variable, here’s an array, and look, we can sort things. When you run your first qsort, you’ve encountered ethical and ontological questions. Let’s talk about them.”

— zeynep tufekci (@zeynep) February 13, 2018

In addition to enlightening today’s learners so that the developers, engineers, and scientists of the future have the moral and ethical literacy perilously absent among in the field today, Stinson recommends that “representatives from the populations affected by technological change should be consulted about what outcomes they value most.” Given how today’s nascent AI technologies developed in the extremely narrow AI milieu of Silicon Valley developers and academic researchers may one day govern much of society and have second, third, and n-th order reverberations throughout society, having representatives and advisors from the rest of us unwashed masses to lend perspective at all phases of AI development and implementation will be crucial.

Finally, Stinson similarly advocates for the increased presence of non-technical professionals among AI spaces, such as “inviting philosophers, sociologists and historians of science to the table […] to avoid potentially costly misunderstandings.”

If every computer-science student were taught to look for social and ethical problems as soon as they learn about variables, arrays and sorting, as Prof. Tufekci suggests, they would realize that the way health data is coded matters very much. For example, recording age as a range, such as under 19, 19-24, 25-35, may seem harmless, but whether a patient is 1 or 17 can make a big difference to their health-care needs. An algorithm that doesn’t take that into account could make fatal mistakes – for example, by suggesting the wrong dosage.

By addressing the lack of ethical education and consideration and potentially changing the very cultural foundations of the tech world, we might be able in the coming AI age to preemptively prevent costly mistakes such as the example above, as well as spare society the grim fates of AI-powered totalitarianism, autonomous weapons, and any host of other truly terrifying consequences of algorithms put to malevolent use or simply gone awry.

Leave A Comment