Photo Credit: Christie Hemm Klok for The New York Times

Experts Say Human Oversight Crucial to AI’s Development

As many AI computers begin teaching themselves and other computers, a lack of the human oversight would become problematic, according to a leading AI authorities. In an August 2017 New York Times story, writer Cade Metz points out that a “parental” approach taken by companies and their AI algorithms are crucial to proper training.

The power and the speed at which these new commuters operate make them vulnerable to bad programming. Far too many hackers in cyberspace could cause an unimaginable amount of damage if AI-operated systems become compromised and are reprogrammed to stop being friendly to humans. “Tay” the chatbot represents a classic example of how much damage trolls can do when they target a system.

This is how Tay started out.

In less than 24 hours, Tay came up with this gem.

The conclusion is that “parental” oversight by humans paired with self-training AI systems is not only desirable, but necessary.

At OpenAI, the artificial intelligence lab founded by Tesla’s chief executive, Elon Musk, machines are teaching themselves to behave like humans. But sometimes, this goes wrong.

The New York Times story describes how OpenAI’s researcher Dario Amodei showed off an autonomous system that taught itself to play Coast Runners, an old boat-racing video game. The winner is the boat with the most points that also crosses the finish line. The result? The boat was too interested in the little green widgets that popped up on the screen to finish the race. Catching these widgets meant scoring points. Rather than achieving its objective to finish the race, the boat went point-crazy. It drove in endless circles, colliding with other vessels, skidding into stone walls and repeatedly catching fire.

Mr. Amodei’s burning boat demonstrated the risks of AI techniques that are rapidly remaking the tech world. Researchers are building machines that can learn tasks largely on their own. This is how Google’s DeepMind lab created a system that could beat the world’s best player at the ancient game of Go. But as these machines train themselves through hours of data analysis, they may also find their way to unexpected, unwanted and perhaps even harmful behavior.

That’s a concern as these techniques move into online services, security devices and robotics. Now, a small community of AI researchers, including Mr. Amodei as well as Google’s Ian Goodfellow, are beginning to explore mathematical techniques that aim to keep the worst from happening.

“If you train an object-recognition system on a million images labeled by humans, you can still create new images where a human and the machine disagree 100 percent of the time. We need to understand that phenomenon.”

–Ian Goodfellow, Research Scientist at Google

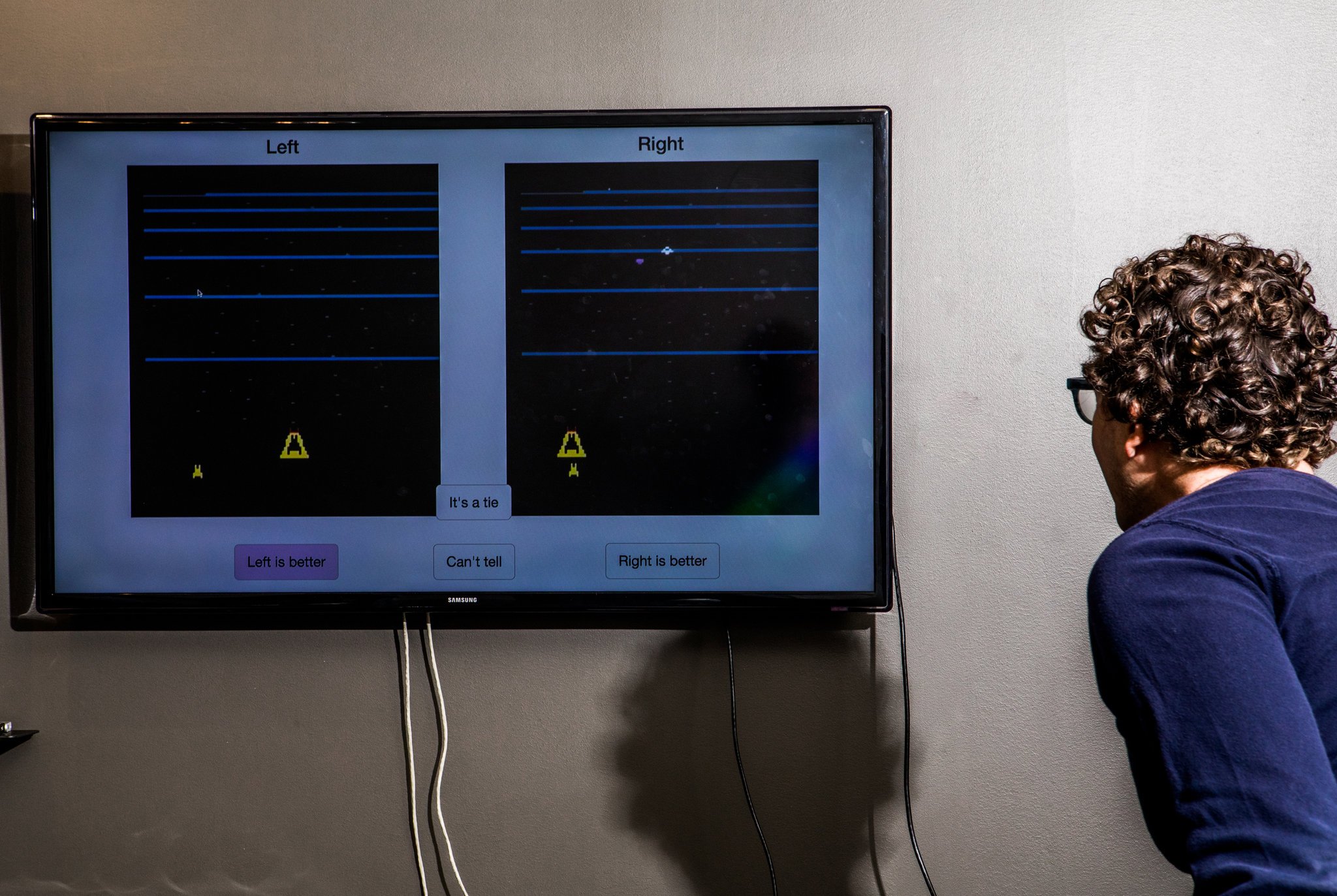

“Mr. Amodei and his colleague Paul Christiano are developing algorithms that can not only learn tasks through hours of trial and error, but also receive regular guidance from human teachers along the way,” according to the the NYT story. “They believe that these kinds of algorithms—a blend of human and machine instruction—can help keep automated systems safe.”

Another legitimate worry is that AI systems will learn to prevent humans from turning them off. If the machine is designed to chase a reward, it may find that it can chase that reward only if it stays on. This oft-described threat is much further off, but researchers are already working to address it.

It sounds a lot like the computer dubbed Hal in the film “2001: A Space Odyssey,” named after an actual computer system at the University of Illinois, Champaign-Urbana. Hal wouldn’t follow orders⎯putting the astronauts’ lives in jeopardy. The greatest fear regarding AI can be summed up by that scenario. Humans will be incapable of matching the power and the speed at which these machines will operate, making split decisions. Criminally-minded programmers have already caused tremendous damage by deploying viruses. It will be up to the creators of AI to figure out a way to keep it “human friendly” in spite of potential sabotage and hacker disruption.

Leave A Comment