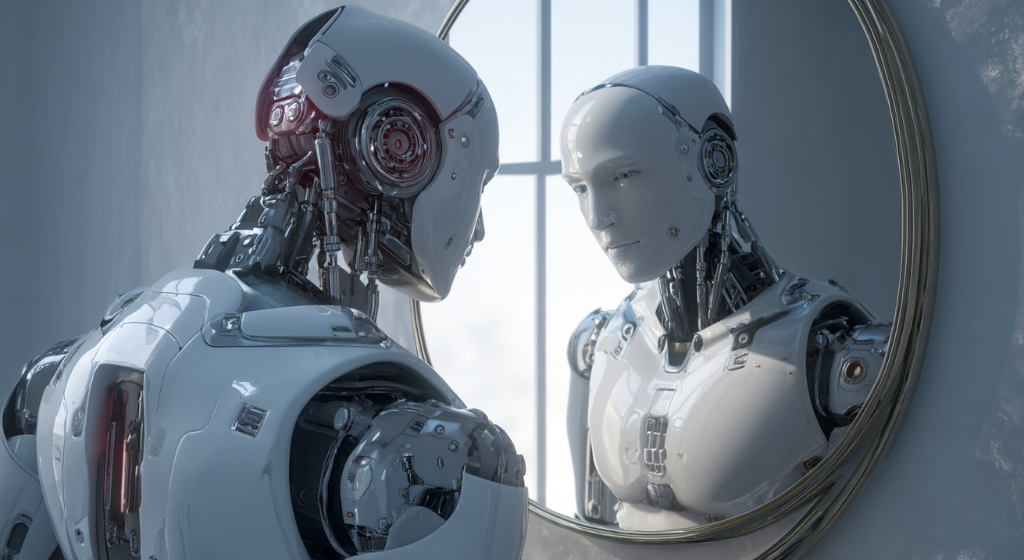

MIT’s new Neural Jacobian Fields system teaches robots bodily self-awareness using only vision, eliminating the need for sensors and enabling real-time, self-supervised control across diverse robot types. (Source: Image by RR)

Robots Use Visual Feedback to Develop Internal Body Maps

MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) has unveiled a groundbreaking robotic control system that allows machines to learn self-awareness using vision alone. Dubbed Neural Jacobian Fields (NJF), the technology eliminates the need for embedded sensors or hand-coded control models, enabling robots to determine how their own bodies move in response to motor commands purely by watching themselves. This development, as noted in news.mit.edu, marks a major advance in making robots more flexible, accessible, and capable of operating in unstructured real-world environments.

The system mimics human learning: a robot performs random movements while cameras record how each motor command affects different body parts. Over time, NJF constructs an internal map — not just of the robot’s shape, but of how it responds to input. The neural model builds on Neural Radiance Fields (NeRF), allowing it to infer both geometry and motor sensitivity from visual data alone. Once trained, the robot needs only a single monocular camera for real-time closed-loop control, making it faster and more adaptable than traditional physics-based simulation tools.

NJF’s vision-based control proved effective across a range of platforms, including soft pneumatic hands, rigid robotic arms, and even sensorless platforms. This sensorless capability allows for creative, unconstrained robot designs, especially in soft robotics, where traditional modeling is often unfeasible. The system is robust even when data is noisy or incomplete and allows robots to adapt autonomously — no prior knowledge, no human supervision, and no external tracking systems required.

While the current version of NJF must be trained separately for each robot using multiple cameras, researchers hope to democratize the system further. A future version may let users capture training footage with a smartphone, enabling even hobbyists to train robots without expensive infrastructure. Though limitations remain — such as a lack of tactile sensing and difficulty generalizing across robot types — NJF signals a shift away from rigid programming and toward embodied learning, bringing the dream of truly self-aware, self-sufficient robots closer to reality.

read more at news.mit.edu

Leave A Comment