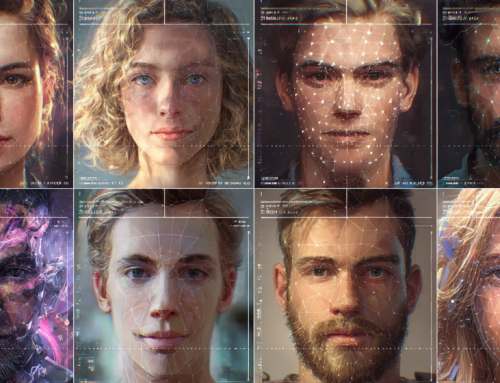

The well-documented inaccuracies of generative AI tools, such as ChatGPT, not only conflict with the EU’s General Data Protection Regulation (GDPR) which mandates accurate personal data processing, but also expose companies like OpenAI to potentially hefty penalties and regulatory orders that could significantly alter their operational methods within the EU. (Source: Image by RR)

Privacy Concerns Escalate as ChatGPT Fails to Correct Personal Data Errors

OpenAI is grappling with another privacy complaint in the European Union, filed by the privacy rights nonprofit “noyb.” This complaint highlights the “hallucination” problem of ChatGPT, where the AI chatbot generates incorrect information about individuals, in this case, an erroneous birth date for a public figure. Despite the significant capabilities of generative AI, this issue puts OpenAI at odds with the EU’s General Data Protection Regulation (GDPR), which mandates that personal data must be accurate and gives individuals the right to have incorrect data corrected. As reported in techcrunch.com, the complaint was lodged with the Austrian data protection authority, stressing that OpenAI’s refusal to correct the data, by claiming technical impossibility and instead offering to block the data, fails to comply with GDPR requirements.

OpenAI’s privacy policy does allow users to request corrections of inaccuracies or complete removal of their personal data from ChatGPT’s outputs. However, the company warns that due to the technical complexities of its AI models, it might not always be able to make the requested corrections. This situation highlights a significant challenge in reconciling the operational characteristics of AI technologies with stringent legal standards like those of the GDPR, which also requires transparency about data sources and storage that OpenAI reportedly struggles to provide.

The broader implications of these GDPR compliance issues are considerable. The group noyb’s complaint isn’t just about a single data error; it questions the fundamental ability of AI systems like ChatGPT to meet EU legal standards regarding data accuracy and transparency. The complaint argues that if an AI system can’t reliably produce accurate and transparent data about individuals, it shouldn’t be processing data about them at all. This stance is mirrored by other ongoing investigations across the EU, including a significant one in Italy, where the data protection authority is still reviewing a draft decision on OpenAI’s alleged GDPR violations concerning misinformation and data processing transparency.

As these legal challenges mount, OpenAI faces increasing regulatory pressure across the EU, with multiple investigations that could lead to hefty fines and demands for operational changes. The company has already tried to mitigate these risks by establishing a regional office in Dublin, hoping to centralize the handling of such complaints via Ireland’s Data Protection Commission. This move aims to streamline the oversight process under GDPR mechanisms designed for handling cross-border complaints in the EU. However, with ongoing complaints and investigations in several member states, OpenAI’s strategy and its compliance with EU data protection laws remain under critical examination.

read more at techcrunch.com

Leave A Comment