Prof. Kim of Korea points out four issues that must be addressed when introducing AI to a company.

Professor: Incorporating AI Will Improve Efficiencies in Every Industry

Ben Sherry is a writer for inc.com who has reports on four important hurdles a person today should be able to clear if they expect to add AI to their company operations. Yes almost everyone is aware of how incredible the addition of AI has been for so many industries. And what was once thought to be out of reach for AI to overtake is now a reality. Chatbots and writing apps are doing the jobs nobody thought would be possible just a few years ago.

In 2013, researchers at Oxford published an analysis of the jobs most likely to be threatened by automation and AI. At the top of the list were occupations such as telemarketing, hand sewing, and brokerage clerking. AI robots are doing repetitive labor in factories and the like.

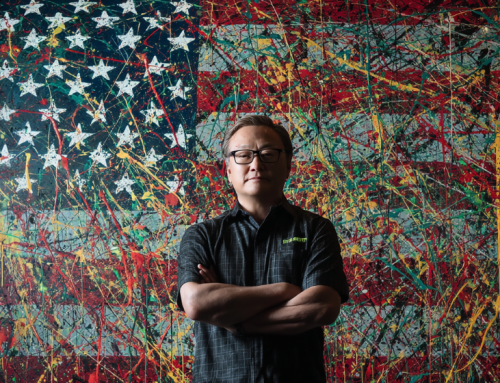

During a keynote presentation at 2022’s Annual Conference on Neural Information Processing Systems, Juho Kim, an associate professor at the Korea Advanced Institute of Science & Technology (KAIST) laid out the four main challenges facing the development of new A.I.-powered tools. In doing so, he explained how incorporating robust opportunities for feedback will allow developers to create systems that are even friendlier to us humans.

1. Bridging the accuracy gap

According to Kim, one of the most difficult aspects of creating AI is aligning its functionality with how users expect it to work, and bridging the gap between the user’s intention and the system’s output. Kim says most AI developers focus on expanding their systems’ datasets and models, but this can widen the accuracy gap, where the system can function very well but only for people who already know how to use it. One solution? Incorporate human testers. By analyzing feedback from real people, developers can identify errors and unintended biases.

2. Incentivizing users to work with AI

Kim says, “unfortunately, one of the most common patterns we see in Human-AI interaction is that humans quickly abandon AI systems because they aren’t getting any tangible value out of them.” One way that Kim says developers can solve this issue is by prioritizing “co-learning” when creating their systems. By giving ample opportunities for humans to provide feedback on AI systems, people can learn how to better use the system, and the A.I. can learn more about what its users actually want from it. Kim says this kind of two-way learning incentivizes people to stick with these systems.

3. Considering social dynamics.

A major issue for today’s A.I. systems is understanding context. As an example, Kim described a system used by his students: Students enter a chatroom to have conversations with each other, and an AI-powered moderator watches their chat and can recommend questions to keep the conversation going. While Kim says it may be tempting to just have the AI moderator participate in the conversation, asking questions to the students directly instead of just recommending topics of conversation, this wouldn’t encourage the AI to learn more about the social dynamics of the conversation. Instead, by giving users the ability to either accept or reject the AI’s recommendations, the system can learn more about what kinds of questions should be asked and when.

4. Supporting sustainable engagement.

Kim says that AI tools are often used only once or twice by a given user, so it’s key to design experiences that can adapt over time to stay relevant to users’ needs. Kim described an experiment he ran with his class in which they developed an A.I. system that could edit the appearance of websites to make them more easily readable. According to Kim, the class could’ve made a system in which the A.I. just automatically creates what it thinks is the perfect website design, but by giving users the ability to edit and tinker with the layout, the A.I. can learn more about what that specific user is looking for and provide a more personalized experience going forward.

Along with Ben Sherry’s article, we found a useful piece on theatlantic.com that highlights the incredible growth of AI.

AI already plays a crucial, if often invisible, role in our digital lives. It powers Google search, structures our experience of Facebook and TikTok, and talks back to us in the name of Alexa or Siri. But this new crop of generative AI technologies seems to possess qualities that are more indelibly human. Call it creative synthesis—the uncanny ability to channel ideas, information, and artistic influences to produce original work. Articles and visual art are just the beginning. Google’s AI offshoot, DeepMind, has developed a program, AlphaFold, that can determine a protein’s shape from its amino-acid sequence. In the past two years, the number of drugs in clinical trials developed using an AI-first approach has increased from zero to almost 20.”

The article explains that AI will “change everything.”

read more at inc.com

also at theatlantic.com

Leave A Comment