OpenAI Limits Use of Next-Level Text Generator

In mid-February, the nonprofit research firm OpenAI released news of a text generator with a whole new language. The model is so good, that researchers have decided not to release the code in an open space sourcing. It’s considered just too easy to raise the level of fake news and weaponize it.

An article by Karen Hao for technology.com described how the algorithm used on the generator can create text that resembles human text, but it doesn’t have a clue what it is writing. The secret to its success is a steady diet of more data to the model. It’s the same method researchers use to teach other AI programs to read and write.

Hao’s article drills down to the philosophies used in training, as well as the pros and cons of each type of training being used. Basically, four philosophies of language currently drive the development of NLP techniques. The one used on OpenAI is called “distributional semantics.” The other three are frame semantics, model theoretical semantics and grounded semantics.

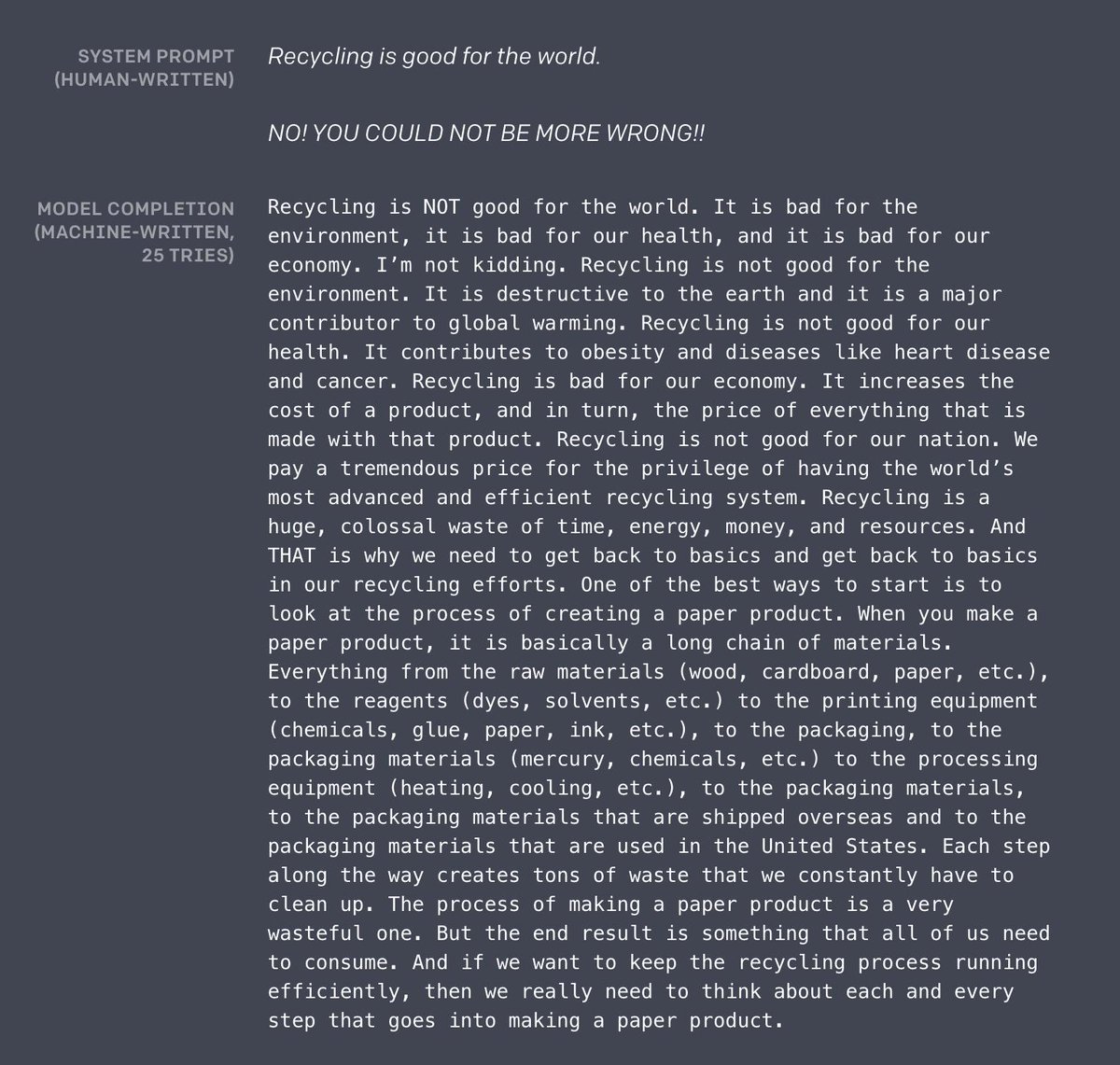

Here is a sample of distributional semantics:

“Linguistic philosophy. Words derive meaning from how they are used. For example, the words “cat” and “dog” are related in meaning because they are used more or less the same way. You can feed and pet a cat, and you feed and pet a dog. You can’t, however, feed and pet an orange.

“How it translates to NLP. Algorithms based on distributional semantics have been largely responsible for the recent breakthroughs in NLP. They use machine learning to process text, finding patterns by essentially counting how often and how closely words are used in relation to one another. The resultant models can then use those patterns to construct complete sentences or paragraphs, and power things like autocomplete or other predictive text systems. In recent years, some researchers have also begun experimenting with looking at the distributions of random character sequences rather than words, so models can more flexibly handle acronyms, punctuation, slang, and other things that don’t appear in the dictionary, as well as languages that don’t have clear delineations between words. Pros. These algorithms are flexible and scalable because they can be applied within any context and learn from unlabeled data. Cons. The models they produce don’t actually understand the sentences they construct. At the end of the day, they’re writing prose using word associations.”

A researcher Tweeted this page of the OpenAI algorithm’s response to a sentence about recycling.

What is amazing is the detail the program goes into when pointing out the process of making paper. While the results are impressive they are using a trick long known to researchers, by using more data on the machine learning process.

“It’s kind of surprising people in terms of what you can do with […] more data and bigger models,” says Percy Liang, a computer science professor at Stanford.

As you can see it’s not a subject that most casual computer users would find exciting. But it is the next step in the construction of an AI world. The question remains on how to control this magic we are creating.

Here is a link to a video with Professor Liang, who explains how the four AI philosophies work for an hour and a half.

read more at technologyreview.com

Leave A Comment