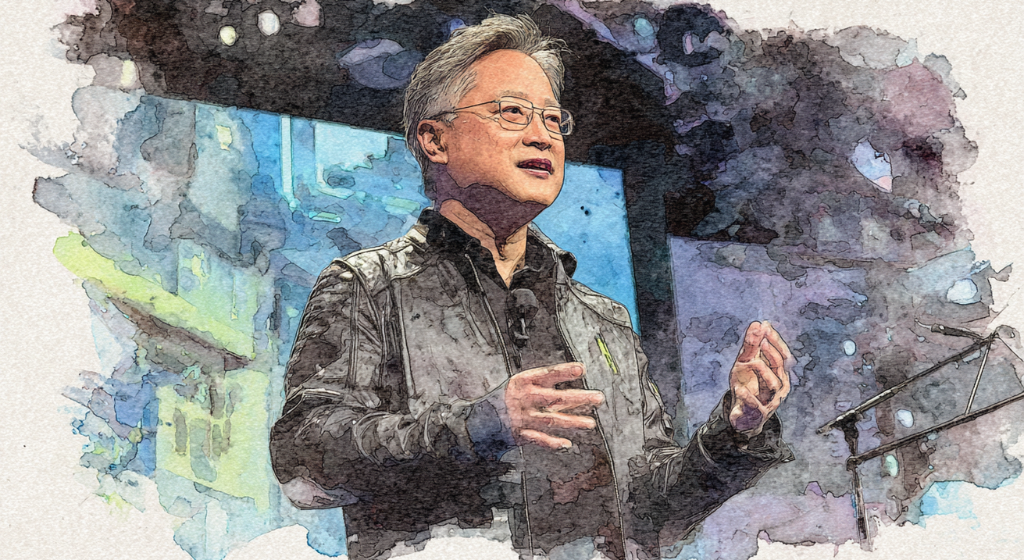

Nvidia’s new Blackwell chips have revolutionized AI training by dramatically cutting the number of chips and time needed to train massive models, cementing the company’s dominance in the AI hardware race. (Source: Image by RR)

Blackwell Chips Reportedly More Than Twice as Fast as Previous Hopper Models

Nvidia’s latest Blackwell chips have significantly advanced the efficiency of training large artificial intelligence models, according to benchmark results released by MLCommons, a nonprofit group focused on AI system performance. These results, as reported in reuters.com, highlight a dramatic drop in the number of chips required to train large language models (LLMs), marking a critical leap forward as AI training remains a major resource and cost bottleneck in the industry.

The new data specifically focused on training performance using complex models like Meta’s open-source Llama 3.1 405B, which has trillions of parameters and represents one of the most demanding AI workloads. While both Nvidia and AMD were included in MLCommons’ benchmarking, only Nvidia submitted training results for Llama 3.1. Nvidia’s new Blackwell chips more than doubled the speed of their previous-generation Hopper chips on a per-chip basis.

In the most efficient benchmark, 2,496 Blackwell chips trained the Llama model in just 27 minutes—an impressive feat given that it previously took over three times as many Hopper chips to complete a similar task, even with a slightly faster time. These results reinforce Nvidia’s lead in the high-stakes AI chip race, especially as training efficiency remains a key metric for companies scaling massive models.

Chetan Kapoor of CoreWeave, which worked with Nvidia on these tests, noted a trend toward breaking training workloads into smaller subsystems of chips, rather than relying on massive monolithic chip clusters. This modular approach helps accelerate training times even for ultra-large models, potentially lowering costs and increasing accessibility for companies building next-generation AI tools.

read more at reuters.com

Leave A Comment