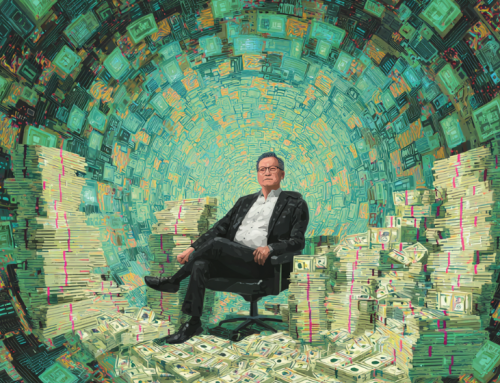

Anthropic’s release of the Claude system prompts paves the way for greater transparency in AI model development, while also giving users insight into how their AI chatbot is programmed to behave. (Source: image by RR)

Claude 3.5, 3 Haiku, and 3 Opus Now More Transparent with Public System Prompts

Anthropic, an OpenAI competitor, has set a new standard for transparency in the AI industry by publicly releasing system prompts for its Claude family of AI models. These system prompts act as operating instructions, guiding large language models (LLMs) on how to interact with users, what behaviors to exhibit, and indicating the knowledge cut-off dates for the model’s training. While system prompts are common in LLMs, not every company makes them publicly available, which has led to a subculture of “AI jailbreakers” attempting to uncover them. Anthropic has taken a proactive step by revealing the prompts for Claude 3.5 Sonnet, Claude 3 Haiku, and Claude 3 Opus on its website and committing to regular updates.

The system prompts offer insights into the capabilities and behaviors of these models. For example, Claude 3.5 Sonnet, the most advanced model, has a knowledge base updated as of April 2024 and is designed for detailed and concise responses. It handles controversial topics delicately, avoids unnecessary filler, and is mindful of not acknowledging facial recognition in images. Claude 3 Opus, with an August 2023 knowledge base, focuses on complex tasks and provides balanced perspectives on sensitive topics but lacks some of the nuanced behavioral guidelines of Sonnet. As reported in venturebeat.com, Claude 3 Haiku, the fastest of the models, also updated in August 2023, prioritizes speed and efficiency for quick, concise answers.

Anthropic’s decision to release these system prompts is significant in addressing the “black box” problem in generative AI, where users often don’t understand how or why AI models make decisions. By providing public access to the system prompts, Anthropic offers more transparency into the rules guiding its AI, helping users better understand how the models operate. While the release does not include source code, training data, or model settings, it represents a meaningful step towards AI explainability, allowing users to glimpse the decision-making frameworks behind the Claude models.

AI developers have praised Anthropic’s move as a step forward in transparency, showing other companies in the industry a possible path to follow. While Anthropic still retains control over the deeper technical aspects of its models, the release of system prompts benefits users by offering clarity on how these AI chatbots are designed to behave, setting a new precedent for openness in the rapidly evolving AI landscape.

read more at venturebeat.com

Leave A Comment