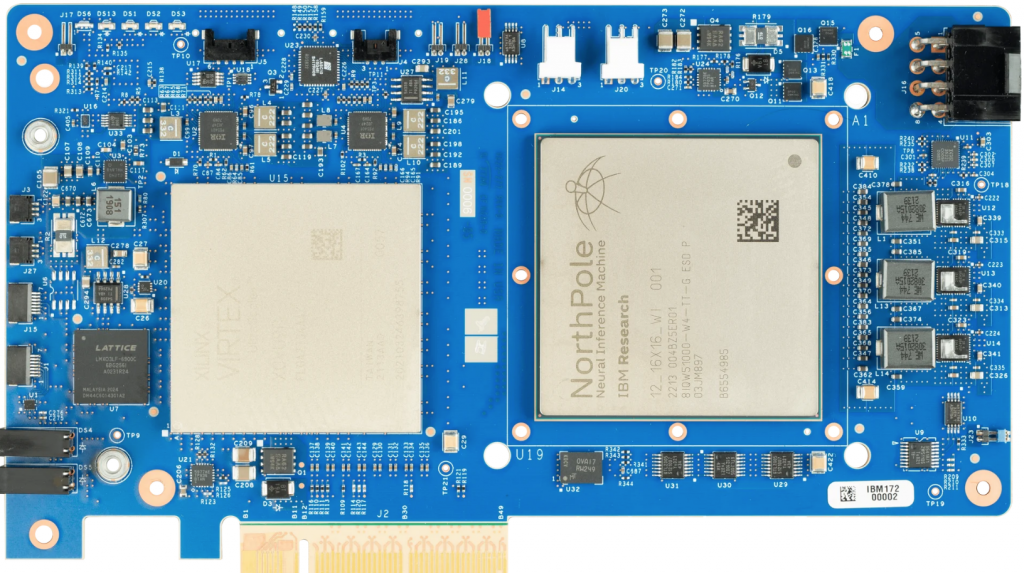

The NorthPole chip on a PCIe card. IBM’s new design is proving highly efficient. (Source: IBM Corp.)

IBM’s NorthPole Chip to Enable AI to Be Energy Efficient, More Effective ML Tool

This week from an article that was filled with highly technical terms regarding the building of a new IBM processor we have some exciting news high tech news that even layman can follow.

The article from arsthecnica.com is filled with good information about computer chips most of us never think about. How they are built and for what purpose are they going to be used is the basic explanation. However, today’s computing requires a lot more forethought when designing a new chip.

A new chip prototype from IBM Research’s lab in California, long in the making, has the potential to upend how and where AI is used efficiently.

Energy Demands

As the utility of AI systems has grown dramatically, so has their energy demand. Training new systems is extremely energy intensive, as it generally requires massive data sets and lots of processor time. Executing a trained system tends to be much less involved—smartphones can easily manage it in some cases. But, because you execute them so many times, that energy use also tends to add up.

Fortunately, there are lots of ideas on how to bring the latter energy use back down. IBM and Intel have experimented with processors designed to mimic the behavior of actual neurons. IBM has also tested executing neural network calculations in phase change memory to avoid making repeated trips to RAM.

Now, IBM is back with yet another approach, one that’s a bit of “none of the above.” The company’s new NorthPole processor has taken some of the ideas behind all of these approaches and merged them with a very stripped-down approach to running calculations to create a highly power-efficient chip that can efficiently execute inference-based neural networks.

For things like image classification or audio transcription, the chip can be up to 35 times more efficient than relying on a GPU.

NorthPole Chip

This article is a very interesting read and maybe an important one for anyone using a computer, and that refers to just about everybody. As the article is lengthy, we are just bringing a few of the chips highlights.

“It’s worth clarifying a few things early here. First, NorthPole does nothing to help the energy demand in training a neural network; it’s purely designed for execution. Second, it is not a general AI processor; it’s specifically designed for inference-focused neural networks.

John Timmer is the author of this piece. He goes into great detail about how the chip is designed and what it was designed for. But more than that, he explains the surprises the new chip had for the designers as it began to exceed their expectations. And if you speak “high-tech chip,” language, you will find the data helpful.

Software as Well

Because of all these distinctive design choices, the team behind NorthPole had to develop its own training software that figures out things like the minimum level of precision that’s necessary at each layer to operate successfully.

“Executing neural networks on the chip is also a relatively unusual process. Once the weights and connections of the neural network are placed in buffers on the chip, execution simply requires an external controller—typically a CPU—to upload the data it’s meant to operate on (such as an image) and tell it to start. Everything else runs to completion without the CPU’s involvement, which should also limit the system-level power consumption.

“The NorthPole test chips were built on a 12 nm process, which is well behind the cutting edge. Still, they managed to fit 256 computational units, each with 768 kilobytes of memory, onto a 22 billion transistor chip. When the system was run against an Nvidia V100 Tensor Core GPU that was fabricated using a similar process, they found that NorthPole managed to perform 25 times the calculations for the same amount of power. It could outperform a cutting-edge GPU by about fivefold using the same measure.

Tests with the system showed it could perform a range of widely used neural network tasks efficiently, as well. In other words, IBM’s multi-tasking chip may not save money on energy demands, depending on how it’s applied to a project.

IBM’s newest chip has proven to be very useful in the proper situation. If you are excited at this new approach to chip design be sure to click the link below for all of the rest of the information that has IBM excited as well.

read more at arstechnica.com

Leave A Comment