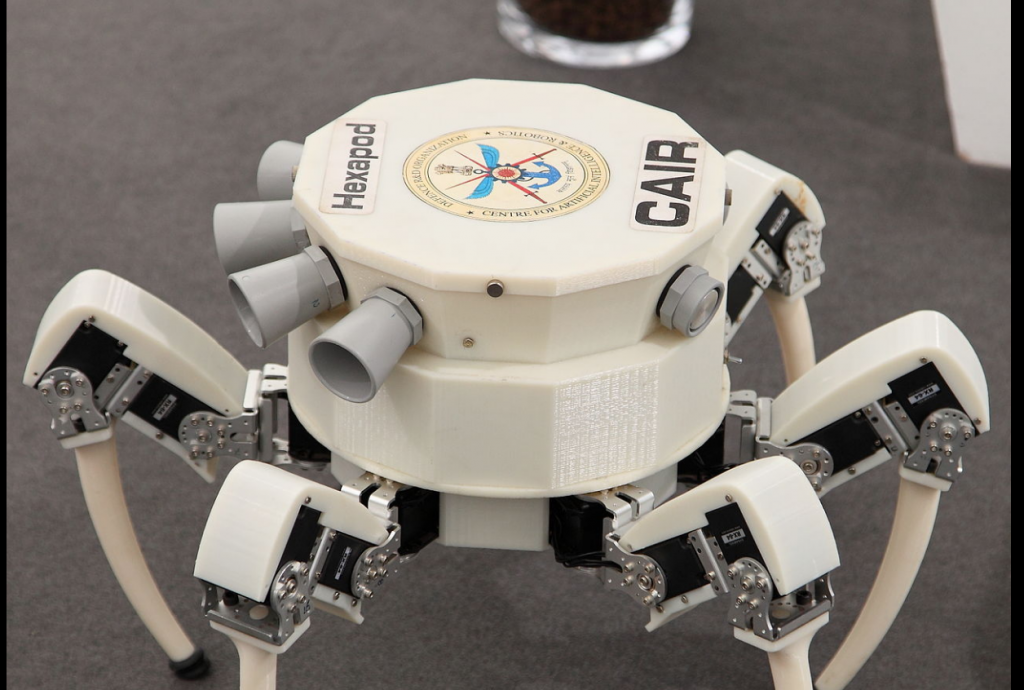

Older AI weapons like this one could become problematic for the Pentagon in the future.

Report Cites Problems in Old Military AI, Potential for Accidents

A report from analysts at the Center for Security and Emerging Technology (CSET) cautions the Defense Department about being dependent on AI of any kind, according to Nationaldefensemagazine.org.

CSET, part of Georgetown University’s Walsh School of Foreign Service, says that the U.S. military needs to consider how decades-old AI technology could lead to accidents with catastrophic consequences, while also ensuring that new technologies have safeguards to prevent similar problems.

Doomsday examples similar to those in sci-fi movies illustrate the importance of getting artificial intelligence right, according to the report, “AI Accidents: An Emerging Threat — What Could Happen and What to Do.”

While AI accidents in other sectors outside of the Defense Department could certainly be catastrophic — such as with power grids — the military is a particularly high-risk area, said Helen Toner, co-author of the report and CSET’s director of strategy.

“The chance of failure is higher and obviously when you have weaponry involved, that’s always going to up the stakes,” she said during an interview.

CSET’s report describes a potential doomsday storyline involving phantom missile launches.

Without relaying the details of the scenario in the article, let’s just say it doesn’t end well, due to one of three AI failures. These failures fit into three different categories, according to the report. These include robustness, specification, and assurance.

The Defense Department must also be mindful that while researchers and engineers are developing new systems that could one day be more resilient, many of today’s systems will be around for a long time, the CSET report noted.

“Governments, businesses and militaries are preparing to use today’s flawed, fragile AI technologies in critical systems around the world,” the study said. “Future versions of AI technology may be less accident-prone, but there is no guarantee.”

To better avoid accidents, the federal government should facilitate information sharing about incidents and near misses; invest in AI safety research and development; fund AI standards development and testing capacity, and work across borders to reduce accident risks, the report said.

A past example of such a problem was the Boeing 737 Max Airliner, which had problems with an AI called MCAS. It would be wise for our government and even for ourselves to be careful with how much older, or un-tested AI we allow into our lives or our businesses.

read more at nationaldefensemagazine.org

Leave A Comment