Images promoting the Qoves Aesthetics reports from a video touting the beauty rating service.

Program for Rating Physical Beauty Gets Critical Reviews from Women Researchers

A story in technologyreview.com, the MIT magazine covering AI and high tech news, recently examined one program in particular that rates faces for beauty, and discussed an array of programs that use varying criteria for determining attractiveness.

The Qoves Studio facial assessment tool is being used by the company’s “facial aesthetics consultancy” to modeling agencies, plastic surgery consulting, advice on beauty products, and how to enhance digital images. One of the uses is to tell people how beautiful they are—or aren’t—and how they can fix their flaws, including using everything from fillers and creams to plastic surgery.

The writer, Tate Ryan-Mosely, used her own photo to elicit a response from the system. Using an unedited, no make-up snapshot, she uploaded it and got back a fairly damning report:

“I uploaded the most bearable photo, and within milliseconds Qoves returned a report card of the 10 “predicted flaws” on my face. Topping the list was a 0.7 probability of nasolabial folds, followed by a 0.69 probability of under-eye contour depression, and a 0.66 probability of periocular discoloration. In other words, it suspected (correctly) that I have dark bags under my eyes and smile lines, both of which register as problematic with the AI.”

When Ryan-Mosely uploaded an older, more flattering photo, she got a better rating. Qoves is one of many such AI tools used by dating apps and cosmetics websites. But it is meant to rate women, primarily, though the apps also are used for men.

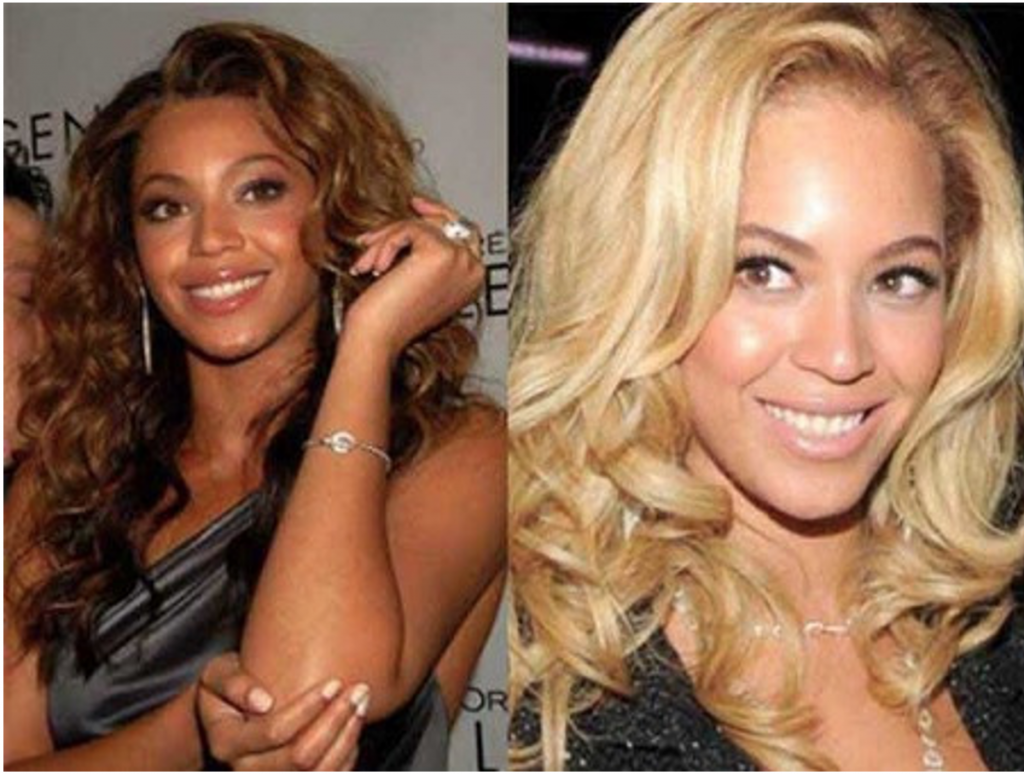

A comparison of two photos of Beyonce Knowles from Lauren Rhue’s research using Face++. Its AI predicted the image on the left would rate at 74.776% for men and 77.914% for women. The image on the right, meanwhile, scored 87.468% for men and 91.14% for women in its model. Obviously, it’s biased towards white faces.

What’s most disturbing, however is that other algorithms rate women according to how each gender would rate them—and women tend to be more generous than men in that department, like the open facial recognition platform, Face++, created by a Chinese company. Ryan-Mosely writes:

“Its beauty scoring system was developed by the Chinese imaging company Megvii and, like Qoves, uses AI to examine your face. But instead of detailing what it sees in clinical language, it boils down its findings into a percentage grade of likely attractiveness. In fact, it returns two results: one score that predicts how men might respond to a picture, and the other that represents a female perspective. Using the service’s free demo and the same unglamorous photo, I quickly got my results. ‘Males generally think this person is more beautiful than 69.62% of persons’ and ‘Females generally think this person is more beautiful than 73.877%.’ “

While most of the systems are proprietary, Qoves, an Australian company, says it uses a convolutional neural network (CNN) for its program. It uses thousands of pictures and ratings by people.

Other similar apps are mostly meant for selling products, such as one used by the cosmetics company Ulta.

“Other big companies have invested in beauty AIs in recent years. They include the American cosmetics retailer Ulta Beauty, valued at $18 billion, which developed a skin analysis tool. Nvidia and Microsoft backed a ‘robot beauty pageant’ in 2016, which challenged entrants to develop the best AI to determine attractiveness.”

Computer vision, because of its inability to see tones as well as people, is often inherently flawed in its assessment, according to Lauren Rhue, an economist who is an assistant professor of information systems at the University of Maryland, College Park. She found that the systems are racist because they consistently rate dark-skinned women as being less attractive than Caucasian women. In addition, older women were rated as less attractive, too.

read more at technologyreview.com

Leave A Comment