The new Lightmatter AI computer chip runs faster and its transfer of data through light reduces power usage.

Lightmatter Boosts AI Computing Speed Using Data Transmitted by Light

Lightmatter, an MIT startup, has developed an AI chip that uses light to perform key calculations, according to a story in wired.com. Writer Will Knight outlines the next big thing in AI hardware, which enables data to be transmitted by light instead of electricity and runs 1.5 to 10 times faster than a top-of-the-line Nvidia A100 AI chip.

“Either we invent new kinds of computers to continue,” says Lightmatter CEO Nick Harris, “or AI slows down.”

Conventional computer chips use transistors to control the flow of electrons through a semiconductor. By reducing information to a series of 1s and 0s, they can perform logical operations, and run software. Lightmatter’s chip is designed to perform only a specific kind of mathematical calculation used to run AI programs.

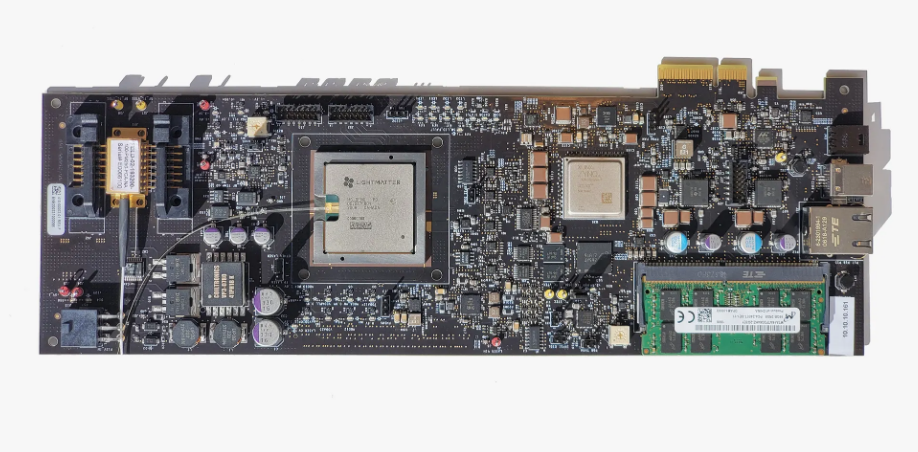

Knight describes the new chip’s appearance as being like a regular computer chip connected with several fiber optic wires.

“But it performed calculations by splitting and mixing beams of light within tiny channels, measuring just nanometers. An underlying silicon chip orchestrates the functioning of the photonic part, and also provides temporary memory storage.”

Lightmatter plans to start shipping the first chip, called Envise, later this year. It will ship server blades containing 16 chips that fit into conventional data centers. Funding of $22 million came from GV (formerly Google Ventures), Spark Capital and Matrix Partners.

Warp Speed

Running a natural language model called BERT, for example, Lightmatter says Envise is five times faster than the Nvidia chip; it also consumes one-sixth of the power. The chip is faster and more efficient for certain AI calculations because information can be encoded more efficiently in different wavelengths of light.

The main drawback, however, is that the chip’s calculations are analog rather than digital, so it’s less accurate than digital silicon chips. To compensate, Lightmatter plans to market its chips for running pre-trained AI models rather than for training models.

read more at wired.com

Leave A Comment