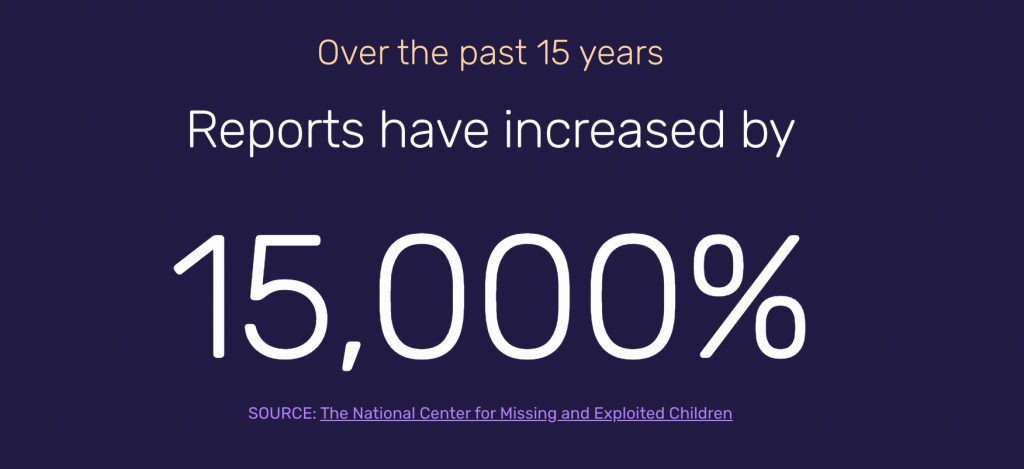

CSAM incidences are increasing exponentially. (Source: Safer)

AI Tools Make Major Advances in Fighting Growing Child Exploitation Threats

According to a story on Analytics Insight, child exploitation online is growing. In fact, a report by the Internet Watch Foundation of the UK found that online abuse involving Child Sexual Abuse Material (CSAM) has shot up 50% during the COVID-19 lockdown.

The story reviews eight programs that can help prevent and circumvent efforts to exploit or otherwise abuse children. The following is a short summary of each:

1. Safer–Developed by the AI company Thorn, this tool detects child abuse images with around 99% accuracy. Safer allows tech platforms to identify, remove and report child sexual abuse material at scale, a critical step forward in the plan to eliminate CSAM. Safer has already enabled the takedown of nearly 100,000 known CSAM files while in its beta phase

2. Child Safe.AI–The AI platform monitors and models child exploitation risk on the web. Already deployed by U.S. law enforcement, it actively collects signals of exploitation threats from online ecosystems, modeling that signal into probable risk. It observes millions of conversation, content and photographic signals.

3. Spotlight–Also developed by Thorn, this technology uses predictive analytics to identify the victims of child sexual abuse and child trafficking. By analyzing the web trafficking and data gathered from sex ads and escort websites, it identifies the potential victims of human and child trafficking. It’s already used by the U.S. Federal department to solve complex child trafficking cases.

4. AI Technology by United Nations Interregional Crime and Justice and Research Institute (UNICRI)– This technology uses AI-tools and Robotics for identifying the location of long-missing children, to scan illicit sex ads, and disrupt human and child trafficking risks. This technology is still evolving and has not been in much use presently.

5. Griffeye– This technology uses computer vision tools like facial recognition and image recognition to scan images on the parameters of nudity and age. It’s already deployed by U.S. federal agencies to identify and thwart CSAM.

6. Google’s AI tool–In 2008, the tech giant Google introduced an AI tool to mitigate online child abuse. By using deep neural networks for image processing, this technology assists reviewers and NGO’s in sorting through many images by prioritizing the most likely CSAM content for review. This AI-tool also helps classifier in keeping up with offenders by also targeting content that has not been previously confirmed as CSAM.

7. ai–This technology utilizes computer vision by training on real CSAM, and identifying and flagging new images containing child abuse. It is used in conjecture with the hash list and reduces the investigator’s manual workload. This tool also assists in prioritizing cases that involve child sexual abuse.

8. Cellebrite AI-tool–The Cellebrite AI-tools use machine learning algorithms to help investigators in streamlining the process of collating, analyzing and reporting the evidence of child abuse.

read more at analyticsinsight.net

Leave A Comment